[Editor’s Note: Mad Scientist Laboratory is pleased to publish our latest “Tenth Man” post. This Devil’s Advocate or contrarian approach serves as a form of alternative analysis and is a check against group think and  mirror imaging. The Mad Scientist Laboratory offers it as a platform for the contrarians in our network to share their alternative perspectives and analyses regarding the Operational Environment (OE). We continue our series of “Tenth Man” posts examining the foundational assumptions of The Operational Environment and the Changing Character of Future Warfare, challenging them, reviewing the associated implications, and identifying potential signals and/or indicators of change. Enjoy!]

mirror imaging. The Mad Scientist Laboratory offers it as a platform for the contrarians in our network to share their alternative perspectives and analyses regarding the Operational Environment (OE). We continue our series of “Tenth Man” posts examining the foundational assumptions of The Operational Environment and the Changing Character of Future Warfare, challenging them, reviewing the associated implications, and identifying potential signals and/or indicators of change. Enjoy!]

Assumption: The character of warfare will change but the nature of war will remain human-centric.

The character of warfare will change in the future OE as it inexorably has since the advent of flint hand axes; iron blades; stirrups; longbows; gunpowder; breech loading, rifled, and automatic guns; mechanized armor; precision-guided munitions; and the Internet of Things. Speed, automation, extended ranges,

The character of warfare will change in the future OE as it inexorably has since the advent of flint hand axes; iron blades; stirrups; longbows; gunpowder; breech loading, rifled, and automatic guns; mechanized armor; precision-guided munitions; and the Internet of Things. Speed, automation, extended ranges,  broad and narrow weapons effects, and increasingly integrated multi-domain conduct, in addition to the complexity of the terrain and social structures in which it occurs, will make mid Twenty-first Century warfare both familiar and utterly alien.

broad and narrow weapons effects, and increasingly integrated multi-domain conduct, in addition to the complexity of the terrain and social structures in which it occurs, will make mid Twenty-first Century warfare both familiar and utterly alien.

The nature of warfare, however, is assumed to remain human-centric in the future. While humans will increasingly be removed from processes, cycles, and perhaps even decision-making, nearly all content regarding the future OE  assumes that humans will remain central to the rationale for war and its most essential elements of execution. The nature of war has remained relatively constant from Thucydides through Clausewitz, and forward to the present. War is still waged because of fear, honor, and interest, and remains an expression of politics by other means. While machines are becoming ever more prevalent across the battlefield – C5ISR, maneuver, and logistics – we cling to the belief that parties will still go to war over human interests; that war will be decided, executed, and controlled by humans.

assumes that humans will remain central to the rationale for war and its most essential elements of execution. The nature of war has remained relatively constant from Thucydides through Clausewitz, and forward to the present. War is still waged because of fear, honor, and interest, and remains an expression of politics by other means. While machines are becoming ever more prevalent across the battlefield – C5ISR, maneuver, and logistics – we cling to the belief that parties will still go to war over human interests; that war will be decided, executed, and controlled by humans.

Implications: If these assumptions prove false, then the Army’s fundamental understanding of war in the future may be inherently flawed, calling into question established strategies, force structuring, and decision-making models. A changed or changing nature of war brings about a number of implications:

– Humans may not be aware of the outset of war. As algorithmic warfare evolves, might wars be fought unintentionally, with humans not recognizing what has occurred until effects are felt?

– Wars may be fought due to AI-calculated opportunities or threats – economic, political, or even ideological – that are largely imperceptible to human judgement.  Imagine that a machine recognizes a strategic opportunity or impetus to engage a nation-state actor that is conventionally (read that humanly) viewed as weak or in a presumed disadvantaged state. The machine launches offensive operations to achieve a favorable outcome or objective that it deemed too advantageous to pass up.

Imagine that a machine recognizes a strategic opportunity or impetus to engage a nation-state actor that is conventionally (read that humanly) viewed as weak or in a presumed disadvantaged state. The machine launches offensive operations to achieve a favorable outcome or objective that it deemed too advantageous to pass up.

- – Infliction of human loss, suffering, and disruption to induce coercion and

influence may not be conducive to victory. Victory may be simply a calculated or algorithmic outcome that causes an adversary’s machine to decide their own victory is unattainable.

influence may not be conducive to victory. Victory may be simply a calculated or algorithmic outcome that causes an adversary’s machine to decide their own victory is unattainable.

– The actor (nation-state or otherwise) with the most robust kairosthenic power and/or most talented humans may not achieve victory. Even powers enjoying the greatest materiel advantages could see this once reliable measure of dominion mitigated. Winning may be achieved by the actor with the best algorithms or machines.

- These implications in turn raise several questions for the Army:

– How much and how should the Army recruit and cultivate human talent if war is no longer human-centric?

– How much and how should the Army recruit and cultivate human talent if war is no longer human-centric?

– How should forces be structured – what is the “right” mix of humans to machines if war is no longer human-centric?

– Will current ethical considerations in kinetic operations be weighed more or less heavily if humans are further removed from the equation? And what even constitutes kinetic operations in such a future?

– Should the U.S. military divest from platforms and materiel solutions (hardware) and re-focus on becoming algorithmically and digitally-centric (software)?

– Should the U.S. military divest from platforms and materiel solutions (hardware) and re-focus on becoming algorithmically and digitally-centric (software)?

– What is the role for the armed forces in such a world? Will competition and armed conflict increasingly fall within the sphere of cyber forces in the Departments of the Treasury, State, and other non-DoD organizations?

– Will warfare become the default condition if fewer humans get hurt?

– Could an adversary (human or machine) trick us (or our machines) to miscalculate our response?

Signposts / Indicators of Change:

– Proliferation of AI use in the OE, with increasingly less human involvement in autonomous or semi-autonomous systems’ critical functions and decision-making; the development of human-out-of-the-loop systems

– Technology advances to the point of near or actual machine sentience, with commensurate machine speed accelerating the potential for escalated competition and armed conflict beyond transparency and human comprehension.

– Technology advances to the point of near or actual machine sentience, with commensurate machine speed accelerating the potential for escalated competition and armed conflict beyond transparency and human comprehension.

– Nation-state governments approve the use of lethal autonomy, and this capability is democratized to non-state actors.

– Cyber operations have the same political and economic effects as traditional kinetic warfare, reducing or eliminating the need for physical combat.

– Smaller, less-capable states or actors begin achieving surprising or unexpected victories in warfare.

– Smaller, less-capable states or actors begin achieving surprising or unexpected victories in warfare.

– Kinetic war becomes less lethal as robots replace human tasks.

– Other departments or agencies stand up quasi-military capabilities, have more active military-liaison organizations, or begin actively engaging in competition and conflict.

If you enjoyed this post, please see:

… as well as our previous “Tenth Man” blog posts:

-

- “The Tenth Man” — War’s Changing Nature in an AI World, by Dr. Peter Layton.

-

- “The Tenth Man” — Russia’s Era Military Innovation Technopark, by Mr. Ray Finch.

Disclaimer: The views expressed in this blog post do not necessarily reflect those of the Department of Defense, Department of the Army, Army Futures Command (AFC), or Training and Doctrine Command (TRADOC).

Humans are susceptible to cognitive biases and these biases sometimes result in catastrophic outcomes, particularly in the high stress environment of war-time decision-making. Artificial Intelligence (AI) offers the possibility of mitigating the susceptibility of negative outcomes in the commander’s decision-making process by enhancing the collective Emotional Intelligence (EI) of the commander and his/her staff. AI will continue to become more prevalent in combat and as such, should be integrated in a way that advances

Humans are susceptible to cognitive biases and these biases sometimes result in catastrophic outcomes, particularly in the high stress environment of war-time decision-making. Artificial Intelligence (AI) offers the possibility of mitigating the susceptibility of negative outcomes in the commander’s decision-making process by enhancing the collective Emotional Intelligence (EI) of the commander and his/her staff. AI will continue to become more prevalent in combat and as such, should be integrated in a way that advances  the EI capacity of our commanders. An interactive AI that feels like one is communicating with a staff officer, which has human-compatible principles, can support decision-making in high-stakes, time-critical situations with ambiguous or incomplete information.

the EI capacity of our commanders. An interactive AI that feels like one is communicating with a staff officer, which has human-compatible principles, can support decision-making in high-stakes, time-critical situations with ambiguous or incomplete information. The mission command philosophy necessitates improved EI. EI is defined as the capacity to be aware of, control, and express one’s emotions, and to handle interpersonal relationships judiciously and empathetically, at much quicker speeds in order seize the initiative in war.

The mission command philosophy necessitates improved EI. EI is defined as the capacity to be aware of, control, and express one’s emotions, and to handle interpersonal relationships judiciously and empathetically, at much quicker speeds in order seize the initiative in war. The advent of machine-learning algorithms that could be applied to autonomous lethal weapons systems has so far resulted in a general predilection towards ensuring humans remain in the decision-making loop with respect to all aspects of warfare.

The advent of machine-learning algorithms that could be applied to autonomous lethal weapons systems has so far resulted in a general predilection towards ensuring humans remain in the decision-making loop with respect to all aspects of warfare. The Battalion is a good example organization to visualize this framework. A machine-learning software system could be connected into different staff systems to analyze data produced by the section as they execute their warfighting functions. This machine-learning software system would also assess the human-in-the-loop decisions against statistical outcomes and aggregate important data to support the commander’s

The Battalion is a good example organization to visualize this framework. A machine-learning software system could be connected into different staff systems to analyze data produced by the section as they execute their warfighting functions. This machine-learning software system would also assess the human-in-the-loop decisions against statistical outcomes and aggregate important data to support the commander’s  assessments. Over time, this EI-based machine-learning software system could rank the quality of the staff officers’ judgements. The commander can then consider the value of the staff officers’ assessments against the officers’ track-record of reliability and the raw data provided by the staff sections’ systems. The Bridgewater financial firm employs this very type of human decision-making assessment algorithm in order to assess the

assessments. Over time, this EI-based machine-learning software system could rank the quality of the staff officers’ judgements. The commander can then consider the value of the staff officers’ assessments against the officers’ track-record of reliability and the raw data provided by the staff sections’ systems. The Bridgewater financial firm employs this very type of human decision-making assessment algorithm in order to assess the  “believability” of their employees’ judgements before making high-stakes, and sometimes time-critical, international financial decisions.

“believability” of their employees’ judgements before making high-stakes, and sometimes time-critical, international financial decisions. Stuart Russell offers a construct of limitations that should be coded into AI in order to make it most useful to humanity and prevent conclusions that result in an AI turning on humanity. These three concepts are: 1) principle of altruism towards the human race (and not itself), 2) maximizing uncertainty by making it follow only human objectives, but not explaining what those are, and 3) making it learn by exposing it to everything and all types of humans.

Stuart Russell offers a construct of limitations that should be coded into AI in order to make it most useful to humanity and prevent conclusions that result in an AI turning on humanity. These three concepts are: 1) principle of altruism towards the human race (and not itself), 2) maximizing uncertainty by making it follow only human objectives, but not explaining what those are, and 3) making it learn by exposing it to everything and all types of humans. The potential opportunities and pitfalls are abundant for the employment of AI in decision-making. Apart from the obvious danger of this type of system being hacked, the possibility of the AI machine-learning algorithms harboring biased coding inconsistent with the values of the unit employing it are real.

The potential opportunities and pitfalls are abundant for the employment of AI in decision-making. Apart from the obvious danger of this type of system being hacked, the possibility of the AI machine-learning algorithms harboring biased coding inconsistent with the values of the unit employing it are real. The commander’s primary goal is to achieve the mission. The future includes AI, and commanders will need to trust and integrate AI assessments into their natural decision-making process and make it part of their intuitive calculus. In this way, they will have ready access to objective analyses of their units’ potential biases, enhancing their own EI, and be able overcome them to accomplish their mission.

The commander’s primary goal is to achieve the mission. The future includes AI, and commanders will need to trust and integrate AI assessments into their natural decision-making process and make it part of their intuitive calculus. In this way, they will have ready access to objective analyses of their units’ potential biases, enhancing their own EI, and be able overcome them to accomplish their mission.

• Robotics: Forty plus countries develop military robots with some level of autonomy. Impact on society, employment.

• Robotics: Forty plus countries develop military robots with some level of autonomy. Impact on society, employment. • Artificial Intelligence: Human-Agent Teaming, where humans and intelligent systems work together to achieve either a physical or mental task. The human and the intelligent system will trade-off cognitive and physical loads in a collaborative fashion.

• Artificial Intelligence: Human-Agent Teaming, where humans and intelligent systems work together to achieve either a physical or mental task. The human and the intelligent system will trade-off cognitive and physical loads in a collaborative fashion. • Swarms/Semi Autonomous: Massed, coordinated, fast, collaborative, small, stand-off. Overwhelm target systems. Mass or disaggregate.

• Swarms/Semi Autonomous: Massed, coordinated, fast, collaborative, small, stand-off. Overwhelm target systems. Mass or disaggregate. • Internet of Things (IoT): Trillions of internet linked items create opportunities and vulnerabilities. Explosive growth in low Size Weight and Power (SWaP) connected devices (Internet of Battlefield Things), especially for sensor applications (situational awareness). Greater than 100 devices per human. Significant end device processing (sensor analytics, sensor to shooter, supply chain management).

• Internet of Things (IoT): Trillions of internet linked items create opportunities and vulnerabilities. Explosive growth in low Size Weight and Power (SWaP) connected devices (Internet of Battlefield Things), especially for sensor applications (situational awareness). Greater than 100 devices per human. Significant end device processing (sensor analytics, sensor to shooter, supply chain management). • Space: Over 50 nations operate in space, increasingly congested and difficult to monitor, endanger Positioning, Navigation, and Timing (PNT)

• Space: Over 50 nations operate in space, increasingly congested and difficult to monitor, endanger Positioning, Navigation, and Timing (PNT) • Hyper Velocity Weapons:

• Hyper Velocity Weapons: • Directed Energy Weapons: Signature not visible without technology, must dwell on target. Power requirements currently problematic.

• Directed Energy Weapons: Signature not visible without technology, must dwell on target. Power requirements currently problematic. • Synthetic Biology: Engineering / modification of biological entities

• Synthetic Biology: Engineering / modification of biological entities • Information Environment: Use IoT and sensors to harness the flow of information for situational understanding and decision-making advantage.

• Information Environment: Use IoT and sensors to harness the flow of information for situational understanding and decision-making advantage.

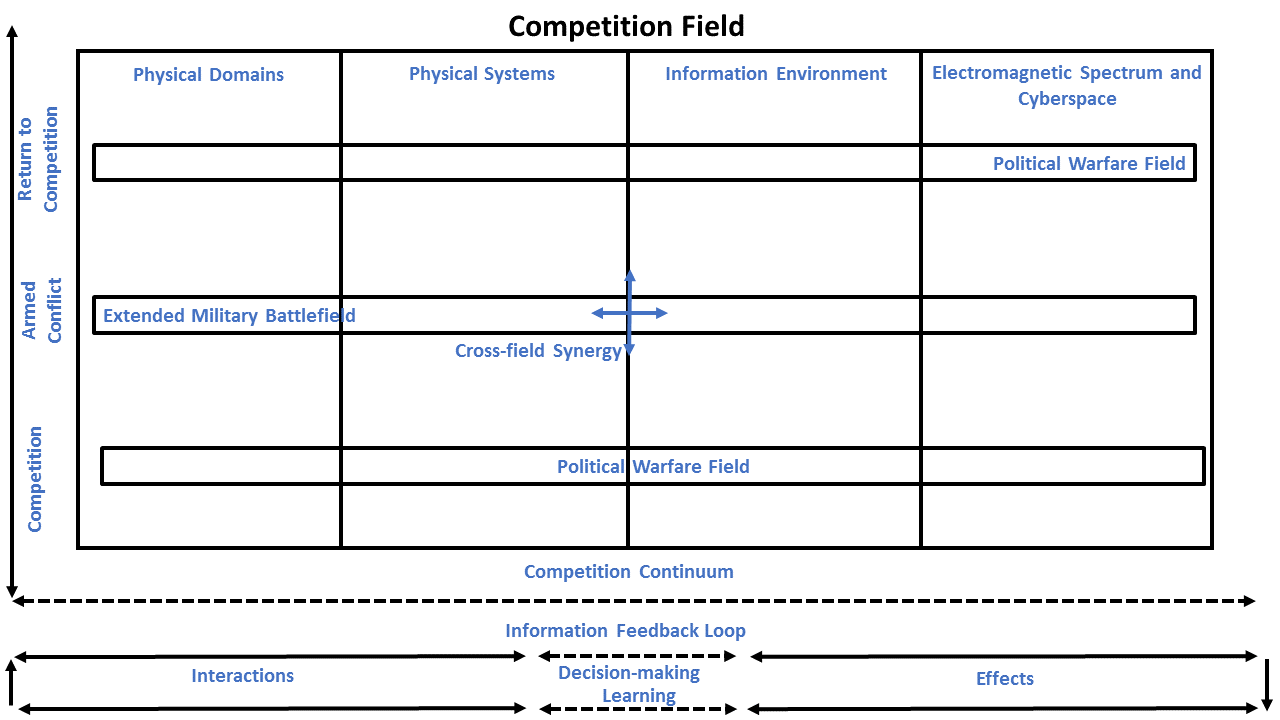

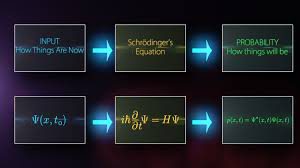

The concept applies quantum field theory to political warfare and the “extended battlefield,” where Joint and multinational systems are the quanta of these fields, prone to excitable states like field quanta. In quantum mechanics, “quanta” refers to the minimum amount of physical entity involved in an interaction, like a photon or bit. The concept also unites the “

The concept applies quantum field theory to political warfare and the “extended battlefield,” where Joint and multinational systems are the quanta of these fields, prone to excitable states like field quanta. In quantum mechanics, “quanta” refers to the minimum amount of physical entity involved in an interaction, like a photon or bit. The concept also unites the “ System and particle interactions are uncertain and not deterministic predictions described in exporting security as preventive war strategy and Newtonian physics. Measures short of war and war itself (i.e., violent or armed competition) are interactions in the competition field based on convergence, acceleration, force, distance, time, and other variables. Systems or things do not enter into relations; relations ground the notion of the system.

System and particle interactions are uncertain and not deterministic predictions described in exporting security as preventive war strategy and Newtonian physics. Measures short of war and war itself (i.e., violent or armed competition) are interactions in the competition field based on convergence, acceleration, force, distance, time, and other variables. Systems or things do not enter into relations; relations ground the notion of the system.  These dimensions or fields include the quanta of human beings, Internet of Things (IoT), data, and

These dimensions or fields include the quanta of human beings, Internet of Things (IoT), data, and  In theories of quantum gravity, that “thing” is the quanta of gravity, hypothetically called a graviton. In this assessment, it is the quanta of competition. The quanta of competition are not in competition; they are themselves competition and are described by links and the relation they express. The quanta of competition are also suited for quantum biology, since they involve both biological and environmental objects and problem sets.

In theories of quantum gravity, that “thing” is the quanta of gravity, hypothetically called a graviton. In this assessment, it is the quanta of competition. The quanta of competition are not in competition; they are themselves competition and are described by links and the relation they express. The quanta of competition are also suited for quantum biology, since they involve both biological and environmental objects and problem sets.