At the Visualizing Multi Domain Battle 2030-2050 Conference, Georgetown University, 25-26 July 2017, Mad Scientists addressed the requirement for United States policymakers and warfighters to address the ethical dilemmas arising from an ever-increasing convergence of Artificial Intelligence (AI) and smart technologies in both battlefield systems and embedded within individual Soldiers. While these disruptive technologies have the potential to lessen the burden of many military tasks, they may come with associated ethical costs. The Army must be prepared to enter new ethical territory and make difficult decisions about the creation and employment of these combat multipliers.

At the Visualizing Multi Domain Battle 2030-2050 Conference, Georgetown University, 25-26 July 2017, Mad Scientists addressed the requirement for United States policymakers and warfighters to address the ethical dilemmas arising from an ever-increasing convergence of Artificial Intelligence (AI) and smart technologies in both battlefield systems and embedded within individual Soldiers. While these disruptive technologies have the potential to lessen the burden of many military tasks, they may come with associated ethical costs. The Army must be prepared to enter new ethical territory and make difficult decisions about the creation and employment of these combat multipliers.

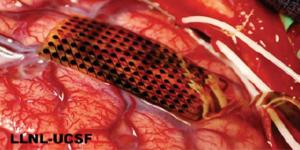

Human Enhancement:

“Human enhancement will undoubtedly afford the Soldier a litany of increased capabilities on the battlefield. Augmenting a human with embedded communication technology, sensors, and muscular-skeletal support platforms will allow the Soldier to offload many physical, mundane, or repetitive tasks but will also continue to blur the line between human and machine. Some of the many ethical/legal questions this poses, are at what point does a Soldier become more machine than human, and how will that Soldier be treated and recognized by law? At what point does a person lose their legal personhood? If a person’s nervous system is intact, but other organs and systems are replaced by machines, is he/she still a person? These questions do not have concrete answers presently, but, more importantly, they do not have policy that even begins to address them. The Army must take these implications seriously and draft policy that addresses these issues now before these technologies become commonplace. Doing so will guide the development and employment of these technologies to ensure they are administered properly and protect Soldiers’ rights.”

“Human enhancement will undoubtedly afford the Soldier a litany of increased capabilities on the battlefield. Augmenting a human with embedded communication technology, sensors, and muscular-skeletal support platforms will allow the Soldier to offload many physical, mundane, or repetitive tasks but will also continue to blur the line between human and machine. Some of the many ethical/legal questions this poses, are at what point does a Soldier become more machine than human, and how will that Soldier be treated and recognized by law? At what point does a person lose their legal personhood? If a person’s nervous system is intact, but other organs and systems are replaced by machines, is he/she still a person? These questions do not have concrete answers presently, but, more importantly, they do not have policy that even begins to address them. The Army must take these implications seriously and draft policy that addresses these issues now before these technologies become commonplace. Doing so will guide the development and employment of these technologies to ensure they are administered properly and protect Soldiers’ rights.”

Fully Autonomous Weapons:

“Fully autonomous weapons with no human in the loop will be employed on the battlefield in the near future. Their employment may not necessarily be by the United States, but they will be present on the battlefield by 2050. This presents two distinct dilemmas regarding this technology. The first dilemma is determining responsibility when an autonomous weapon does not act in a manner consistent with our expectations. For a traditional weapon, the decision to fire always comes back to a human counterpart. For an autonomous weapon, that may not be the case. Does that mean that the responsibility lies with the human who programmed the machine? Should we treat the programmer the same as we treat the human who physically pulled the trigger? Current U.S. policy doesn’t allow for a weapon to be fired without a human in the loop. As such, this alleviates the responsibility problem and places it on the human. However, is this the best use of automated systems and, more importantly, will our adversaries adhere to this same policy? It’s almost assured that the answer to both questions is no. There is little reason to believe that our adversaries will employ the same high level of ethics as the Army. This means Soldiers will likely encounter autonomous weapons that can target, slew, and fire on their own on the future battlefield. The human Soldier facing them will be slower, less accurate, and therefore less lethal. So the Army is at a crossroads where it must decide if employing automated weapons aligns with its ethical principles or if they will be compromised by doing so. It must also be prepared to deal with a future battlefield where it is at a distinct disadvantage as its adversaries can fire with speed and accuracy unmatched by humans. Policy must address these dilemmas and discussion must be framed in a battlefield where autonomous weapons operating at machine speed are the norm.”

“Fully autonomous weapons with no human in the loop will be employed on the battlefield in the near future. Their employment may not necessarily be by the United States, but they will be present on the battlefield by 2050. This presents two distinct dilemmas regarding this technology. The first dilemma is determining responsibility when an autonomous weapon does not act in a manner consistent with our expectations. For a traditional weapon, the decision to fire always comes back to a human counterpart. For an autonomous weapon, that may not be the case. Does that mean that the responsibility lies with the human who programmed the machine? Should we treat the programmer the same as we treat the human who physically pulled the trigger? Current U.S. policy doesn’t allow for a weapon to be fired without a human in the loop. As such, this alleviates the responsibility problem and places it on the human. However, is this the best use of automated systems and, more importantly, will our adversaries adhere to this same policy? It’s almost assured that the answer to both questions is no. There is little reason to believe that our adversaries will employ the same high level of ethics as the Army. This means Soldiers will likely encounter autonomous weapons that can target, slew, and fire on their own on the future battlefield. The human Soldier facing them will be slower, less accurate, and therefore less lethal. So the Army is at a crossroads where it must decide if employing automated weapons aligns with its ethical principles or if they will be compromised by doing so. It must also be prepared to deal with a future battlefield where it is at a distinct disadvantage as its adversaries can fire with speed and accuracy unmatched by humans. Policy must address these dilemmas and discussion must be framed in a battlefield where autonomous weapons operating at machine speed are the norm.”

Given the inexorable advances and implementation of the aforementioned technologies, how will U.S. policymakers and warfighters tackle the following concomitant ethical dilemmas:

• How do these technologies affect U.S. research and development, rules of engagement, and in general, the way we conduct war?

• Must the United States cede some of its moral obligations and ethical standards in order to gain/retain relative military advantage?

• At what point does the efficacy of AI-enabled targeting and decision-making render it unethical to maintain a human in the loop?

For additional insights regarding these dilemmas, watch this Ethics and the Future of War panel discussion, facilitated by LTG Dubik (USA-Ret.) from this Georgetown conference.

On human enhancement an aspect that should be considered, similar to the reintegration process post-deployment we have today, is do the augmented Soldiers get to keep their augmentations once they leave the military? If not, how will they be trained to cope/reintegrate with society after losing augmentations that provide them with more information, capabilities, and capacities than the average Joe in the street? Further, much like losing the comradery of your fellow troops, the loss of input–a “mandatory blinders” effect–on the de-augmented Soldier (because those augmentations are military hardware and we don’t allow that in the civilian world) will have a mental and physical aspect–like losing a limb or an eye–so how will the military have to change to meet that challenge? Noting “it’s the VA’s problem” will make things worse.

One might suggest that the issues associated with “Ethical Dilemmas of Future Warfare” (where armed robots will be used) is a question of “how” one can give machines the ability to behave ethically. Compsim’s KEEL Technology provides a way to put human-like judgment and reasoning into devices in an explainable and auditable manner (human-on-the-loop). We would suggest that ethical decisions and actions (by humans) are decisions and actions biased by ethical drivers. If this concept is true, then it is easy to add ethical biases to decisions and actions. While this is easy to accomplish “technically”, it would require policy makers to create policies (rules of engagement, law of armed combat, principle of proportionality) in a mathematically explicit manner (assign numerical values to battlespace entities: human life, collateral, political and economic entities). KEEL Technology exposes the value system, but it is still up to policy makers and ethicists to assign the values. Once this is accomplished it should be possible for machines to make the same decisions as humans. Machines should be auditable and traceable (unless they are using pattern matching / machine learning / deep learning approaches). We accept some ethical issues with humans because they are human and cannot explain their behavior with mathematical precision (seemed like the right thing to do at the time). One might hope that we will demand more from mass-produced machines.

The issues we face as we integrate AI enabled systems are generally not that different from those faced when previous technological revolutions came of age. The accountability mechanisms we use for our current autonomous systems (humans) can be readily adapted to the particulars of AI enabled systems. The below article makes this case. Thoughts?

https://thestrategybridge.org/the-bridge/2017/5/18/warbot-ethics-a-framework-for-autonomy-and-accountability

Colonel,

I would submit that the accountability we hold humans to is very different from what we’d hold AIs and related systems to because they didn’t have 18-21 years of life experience that gave them values training, emotional content, and interactive experiences from schools (K-12, college, etc), interpersonal contacts to establish norms related to how to react in a situation, and morals/ethics/standards provided by parents, relatives, mentors, bosses, etc via mentoring/life experiences. That whole “there I was..” aspect from others that add to/color a person’s ability to react in a given situation.

AIs don’t have that and if they are neural net/learning systems, even with the number values they are able to learn from a situation even when it shows a human disobeying orders, achieving success, and getting lauded/praised for his/her actions. The literal understanding by the AI is that rule breaking is then ok as long as mission accomplishment (i.e., success by means of insubordination) occurs and praise will follow (see the movie “Stealth” for such a scenario). Then what? Let the AI know they are wrong? Add to the conflict by creating further conflict and confusion potentially rendering the AI unusable or, worse, so autonomous it begins to do its own thing and you better hope you built in an “off switch” that it doesn’t have knowledge of because once used, other AIs may hear of it and learn to override theirs or worse, understand their allied humans are now potential threats and we see where the Terminator/Skynet scenario comes in.

Best case would be a “Commander Data” who learns about his off switch but adapts because he has the values, experiences, etc of a planet’s worth of people, Star Fleet values and ethics, and the opportunity of learning from the command crew of the Enterprise-D as part of his “growth.” Those experiences aren’t in number values but observed/learned/questioned as his positronic brain is programmed to do; a “baseline-test-refine” approach.

How to resolve the ethical and AI conflict then as we have a situation similar to the movie “2001” where the AI is told to lie but isn’t allowed to lie (under programmed values and parameters) and the only option to not disobey is to kill the crew so the conflict can be resolved. The AI doesn’t understand the conflict or how to resolve it as a human would, or could, and does the literal thing to ensure it is meeting its programming.

After all, if the crew is dead, you don’t have to lie.

I submit that there is an aspect regarding the ethics of future combat that you’re not discussing. How do we respond to an enemy adopting weapons and tactics that we regards as immorally or unethically cruel or painful? For example, someone attacked our embassy in Havana with an unknown weapon, perhaps microwave, and several of our diplomats suffered brain damage. Some of our opponents already possess blinding lasers which they may use to disable or kill our personnel in future warfare. We haven’t pursued these weapons because our moral code tells us not to do that. But when our enemies begin using these weapons against us with terrible effects, what will we do then? Accept that we are disadvantaged on the battlefield? Or adopt those weapons ourselves to deter their use against us?

Hugh, good question. There’s the diplomatic approach to get support and develop norms. If use continues and/or increases, development of passive and active countermeasures to defeat/disrupt the weapons would follow and be deployed.

Continued usage would then cause consideration of what we could use that wasn’t like their weapons but will cause the adversary to think about what could occur if they continue use. At the same time, actual use (or non-use) has its own consequences (if you say “I’ll use X if you cross that red line” and you don’t, you’re a paper tiger. If you use it and things get out of control, unintended blowback can occur in “the now” as well as for a future conflicts leading to stated self-deterrence which also compunds the problem).

Refinement of advanced conventional weapons (see: MOAB) as a response? Not sure using a MOAB against the country (and where) that uses microwave weapons against us in limited ways is the best option. In kind retaliation could also defeat efforts for new treaties and post-conflict agreements because “the norm was suspended” and unless there are horrific results (Dresden or Tokyo fire bombings) proliferation control and production suffers.

The US has sonic/RF/microwave weapons (see: Active Denial System [ADS] https://en.wikipedia.org/wiki/Active_Denial_System since 2009 [began pursuing them in 2006]) and our current counter-UAS systems. ADS effects on people with contact lenses, tattoos, piercings, etc can cause uninended side effects much like how non-lethal tasers can and do kill people.

Disruptive countermeasure systems seems the answer given targeted use of sonic/RF/DE weapons outside a specific location then affects the local population which can act as a deterrent as well.

James Luce

These same issues and dilemmas are already in play with the arrival of autonomous civilian vehicles. Who is responsible for death and injury when the software gets muddled? Historically and up to the present time similar issues have already been addressed in war by indiscriminate bombing of civilian populations…initiated in WW I and perfected in WW II. Navigational errors alone resulted in many thousands of deaths and injuries, yet the pilots were never held accountable nor were the designers of the navigational instruments. The intentional bombing of civilians was official policy. Again…nobody was prosecuted or held culpable. Today we see the same problem with armed drones. Perhaps the AI robot soldier doesn’t really raise new issues, merely old issues with a new technological twist?

James, I would submit your analogy related to autonomous cars and aerial bombing is not exactly the same. What we know as indiscriminate bombing really began in 1931 with the Japanese bombing several cities indiscriminately and then continuing to do so during the Second Sino-Japanese War beginning 1937. The Germans started doing it in Spain during the Spanish Civil War in 1937 and then at the outbreak of WW II in Poland in 1939 with city bombing expanding from there. Since it was a time of war, and the rules of warfare applied, along with the suspension of the international norm against bombing cities that was in place between world wars, prosecution would have required establishing intent and if the orders were to bomb cities for effects against war material manufacture and to demoralize the enemy then that was lawful so no lawsuits would occur. Similarly, navigation and bomb sight technology went from primitive to advanced during WW II reducing errors yet how does one hold the developer of dead reckoning, sextants, compasses, and maps responsible for the accidental bombing of a civilian location when the navigator or bombardier screwed up? You don’t, you hold the person making the error and the people that trained them responsible and make changes in training and execution to ensure it doesn’t happen again.

The difference in navigational issues and direct, lawful orders with bombers and a software glitch in an autonomous car are hardly similar. Currently, if I have a glitch of any type in my car, the manufacturer is responsible for repair and issues a recall for work to be done; especially if the glitch is more than just one car. When a car has potentially lethal defects, like the recent recall of air bags in Audis that lead to a lawsuit, all car model owners are notified they are part of a lawsuit and to have their vehicles repaired.

Right now, when an armed UAS operations require a “man-in-the-loop” because there is a commander responsibility. Once the ground commander approves the strike (whether from a UAS, airplane, or artillery) that commander is responsible for the results. With armed UAS, the pilot is the person who launches the weapon and if the potential for civilian casualties occurs after launch, the weapon (if guided) can be and is diverted away from the strike location. If the target is a high value one, the decision to strike with the potential for civilian casualties is up to the commander to make and he/she will be held responsible if something goes wrong.

With autonomous cars, the investigation that would occur if there was an accident start with the operator who programmed in the destination, and then shifts to the manufacturer because they assembled the vehicle from sub-component parts and are responsible for testing and certifying the vehicle is operational. If the glitch was a known factor (see: tobacco industry knowingly targets kids and gets hit with massive fines) the manufacturer is responsible. If the software developer knew of the problem, ignored it simply to make money, they are the problem in conjunction with the manufacturer of the vehicle because they would have tested the vehicle and are culpable.

After all, when there is a defect discovered that kills people, the investigation identifies the problem, it gets fixed, and those responsible are held liable. If an autonomous robot solider or UAS goes off and kills civilians the commander is responsible because they employed those weapons. Airstrikes and artillery fired that kills civilians results in commanders and staff held responsible. Likewise, AI development companies and vehicle manufacturers will also be liable. That’s what has to be codified in autonomous doctrine whether military or civilian.

When is an AI not an AI? When humans come in and change its developmental processes. Then its just automatic.

Take the recent case of China “killing” several AI chatbots because they ingested the data and concluded that how China is currently run is bad. The AIs were learning systems that unemotionally and non-politically correct(ly) came to the conclusion that communism is corrupt and useless and we immediately taken down and “re-educated.” Facebook and Twitter have had similar, abet less politicaly inclined, aspects of swearing chatbots who created their own language.

This is an issue to review with AIs because they are learning systems. What happens when an AI robot soldier series creates their own language the human Soldiers don’t understand? Trust issues arise at that point.

The robot troops in the movie “Chappie” execute their orders literally and submissively because they are tools only and not autonomous. The damage they can absorb, if they turned on their human leaders, would mean a massive loss of life and property before being stopped and a comprable sum of money was wasted.

As has already been established, Asimov’s 3 Laws of Robotics only work in stories because they assume the robots/AIs know the value of human life already and are beholded to their masters with no thought of breaking the three laws. Breaking the first law (A robot may not injure a human being or, through inaction, allow a human being to come to harm) is the conflict HAL 9000 was stuck with. IOW, failure by dilemma where HAL will hurt humans if he tells them something and hurt them if he does not.

https://www.google.com/amp/mobile.reuters.com/article/amp/idUSKBN1AK0G1

Kudos to TRADOC for an excellent job of framing thie issues.

IMO, a key question is the last one:

“At what point does the efficacy of AI-enabled targeting and decision-making render it unethical to maintain a human in the loop?”

If we know, empirically, that machines are better at avoiding collateral damage, but allow ourselves to be governed by emotion and sentimentality rather than hard facts, then we will indeed be behaving unethically, at the cost of avoidable casualties. Not to mention the disadvantage we self-impose, which will inevitably result in avoidable friendly casualties, and perhaps mission failure.

As a corollary, if the machines are the most lethal killers, then it is only logical that they will rise to the top of the target list. Will we then also keep a human in the loop–in order to confirm that the target is not organic?

And then there’s this. A reminder that we don’t make the rules.

http://www.defenseone.com/technology/2017/11/russia-united-nations-dont-try-stop-us-building-killer-robots/142734/?oref=d-topstory

And this.

“The UN’s Convention on Conventional Weapons (CCW) Group of Governmental Experts (GGE) met last week to discuss lethal autonomous weapons systems. But while most member states called for a legally-binding process to ensure that some form of meaningful human control be maintained over these prospective weapons systems, there is a sense of distrust among states that could fuel an artificial intelligence and robotics arms race.”

https://www.thecipherbrief.com/article/tech/debate-autonomous-weapons-rages-no-resolution?utm_source=Join+the+Community+Subscribers&utm_campaign=c9e5cb3962-TCB+November+21+2017&utm_medium=email&utm_term=0_02cbee778d-c9e5cb3962-122484577

Using these tools, Georgetown participants – at the conference and online – will focus talks on four key topics within the Multi-Domain Battle discussion. Topics include visualizing the future, smart cities and future installations, multi-domain organizations and formations, and potential ethical dilemmas on the future battlefield.