The nature of war remains inherently humanistic and largely unchanging. That said, Mad Scientists must understand the changing character of warfare in the future Operational Environment, as discussed on pages 16-18 of The Operational Environment and the Changing Character of Future Warfare. With emergences in technologies that are so significant, extensive, and pervasive, warfare will be transformed – made faster, more destructive, and fought at longer ranges; targeting civilians and military equally across the physical, cognitive, and moral dimensions; and (if waged effectively) securing its objectives before actual battle is joined. Although the character of warfare changes dramatically, there are a number of timeless competitions that will endure for the foreseeable future.

Finders vs Hiders. As in preceding decades, that which can be found, if unprotected, can still be hit. By 2050, it will prove increasingly difficult to stay hidden. Most competitors can access space-based surveillance, networked multi-static radars, a wide variety of drones / swarms of drones,  and a vast array of passive and active sensors that are far cheaper to produce than the countermeasures required to defeat them. Quantum computing and advanced sensing will open new levels of situational awareness. Passive sensing, especially when combined with artificial intelligence and big-data techniques, may routinely outperform active sensors.

and a vast array of passive and active sensors that are far cheaper to produce than the countermeasures required to defeat them. Quantum computing and advanced sensing will open new levels of situational awareness. Passive sensing, especially when combined with artificial intelligence and big-data techniques, may routinely outperform active sensors.  Hiding will still be possible, but will require a dramatic reduction of thermal, electromagnetic, and optical signatures. More successful methods may involve “hiding” amongst an obscuration of emitters and signals – presenting adversaries with a veritable needle within a stack of like-appearing and emitting needles.

Hiding will still be possible, but will require a dramatic reduction of thermal, electromagnetic, and optical signatures. More successful methods may involve “hiding” amongst an obscuration of emitters and signals – presenting adversaries with a veritable needle within a stack of like-appearing and emitting needles.

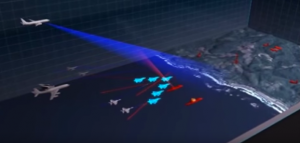

Strikers vs Shielders. Precision strike will improve exponentially through 2050, with the type of precision once formerly reserved for high-end aerospace assets now extended to all domains and at every echelon of engagement. Combatants, both state and non-state, will have a host of advanced delivery options available to them, including advanced kinetic weapons, hypersonics, directed energy (including laser and microwave), and cyber. Space-based assets will become increasingly integrated into striker-shielder complexes, with sensors, anti-satellite weapons, and possibly space-to-earth strike platforms.

At the same time, and on the other end of the spectrum, it will be possible to deploy swarms of massed, low-cost, self-organizing unmanned systems (directed by bio-mimetic algorithms) to overwhelm opponents, offering an alternative to expensive, exquisite systems. With operational range spanning from the strategic – including the homeland – to the tactical, the application of advanced fires from one domain to another will become routine. A wide range of effects can be delivered by a striker, ranging from point precision to area suppression using thermobarics, brilliant cluster munitions, and even a variety of nuclear, chemical, or biological systems. Shielders, on the other hand, will focus on an integrated approach to defense, which target enemy finders, their linkages to strikers, or the strikers themselves.

Protection vs Access. While protection vs. access is generally thought about in physical terms,

there is a more prevalent competition emerging in the future regarding cyber protection and access to data. Data is increasingly important,  as it underpins AI, machine learning, decision-making, and battlefield management. Due to its vital but often sensitive nature, there is a tension point between the need to access friendly and adversarial information and the need for both sides to protect it.

as it underpins AI, machine learning, decision-making, and battlefield management. Due to its vital but often sensitive nature, there is a tension point between the need to access friendly and adversarial information and the need for both sides to protect it.

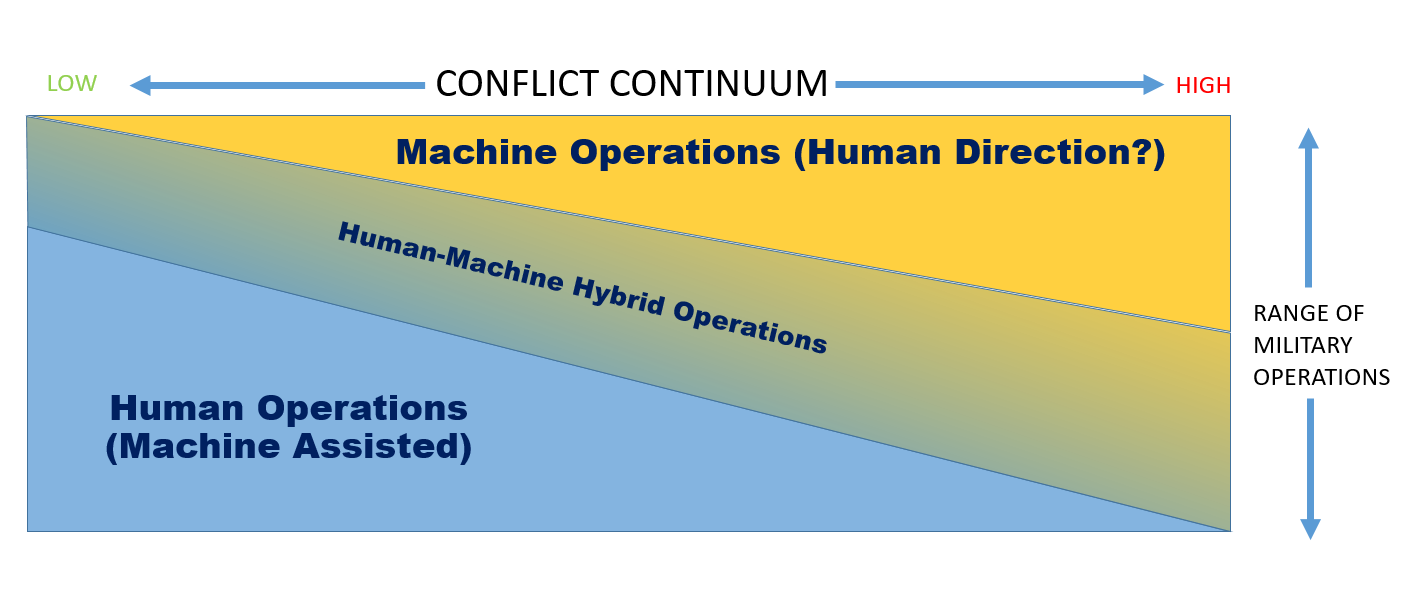

Planning and Judgement vs Reaction and Autonomy. The mid-Century duel for the initiative has a unique character. New operational tools offer extraordinary speed and reach and often precipitate unintended consequences. Commanders will need to open multi-domain windows through which to deliver effects, balancing deliberate planning to set conditions with “l’audace” — the ability to rapidly exploit opportunities and strike at vulnerabilities as they appear — thereby achieving success against sophisticated defensive deployments and shielder complexes.

This will place an absolute imperative on ISR, as well as on intelligence analysis that is augmented by AI, big data, and advanced analytic techniques to determine the conditions on the battlefield, and specifically when, and for how long, a window of operation is open. On the defensive, a commander will be faced with increasingly short decision cycles, with automation and artificial intelligence-assisted decisions becoming the norm. Man-machine teaming will be essential to staff planning, with carefully trained, educated, and possibly cognitive performance-enhanced personnel working to create and exploit opportunities. This means that Armies no longer merely adapt between wars, but do so between and during short-term engagements.

Escalation vs De-Escalation. The competition between violence escalation and de-escalation will be central to stability, deterrence, and strategic success. Violence is readily available on unprecedented scales to a wide-range of actors. Conventional and cyber capabilities can be so potent as to generate effects on the scale of WMD. State and non-state actors alike will utilize hybrid strategies and “Gray Zone” operations, demonstrating a willingness to escalate conflict to a level of violence that exceeds the interests of an adversary to intervene. Long-range strikers and shielder complexes, which extend from the terrestrial domains into space – taken together with cyber technology and more ubiquitous finders – are significantly destabilizing and allow a combatant a freedom of maneuver to achieve objectives short of open war. The ability to effectively escalate and de-escalate along a scalable series of options will be a prominent feature of force design, doctrine, and policy by mid-Century.

Conventional and cyber capabilities can be so potent as to generate effects on the scale of WMD. State and non-state actors alike will utilize hybrid strategies and “Gray Zone” operations, demonstrating a willingness to escalate conflict to a level of violence that exceeds the interests of an adversary to intervene. Long-range strikers and shielder complexes, which extend from the terrestrial domains into space – taken together with cyber technology and more ubiquitous finders – are significantly destabilizing and allow a combatant a freedom of maneuver to achieve objectives short of open war. The ability to effectively escalate and de-escalate along a scalable series of options will be a prominent feature of force design, doctrine, and policy by mid-Century.

These timeless competitions prompt the following questions:

1) What kind of R&D implications might each of these competitions have? Does R&D become increasingly ceded to the private sector as technological advances become exceedingly agnostic to defensive and offensive focuses?

2) In what ways do technological shifts in society impact these timeless competitions? (i.e., does the emergence of the Internet of Things – and eventually Internet of Everything – re-characterize Hiders vs. Finders?)

3) Does the democratization of technology and information increase the role of the Army in land warfare or does the pervasive nature of these technologies and cyber force the Army to incorporate itself more in a whole-of-government approach?

4) What kind of changes do the evolutions of timeless competitions bring about to Army force structuring, organization, strategy, tactics, training, and recruiting?

For further discussions regarding these Timeless Competitions, please see pages 43-49 of the Robotics, Artificial Intelligence & Autonomy Conference Final Report, and An Advanced Engagement Battlespace: Tactical, Operational and Strategic Implications for the Future Operational Environment.

At the

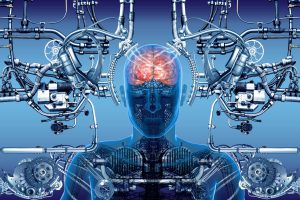

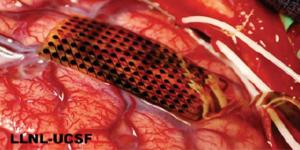

At the  “Human enhancement will undoubtedly afford the Soldier a litany of increased capabilities on the battlefield. Augmenting a human with embedded communication technology, sensors, and muscular-skeletal support platforms will allow the Soldier to offload many physical, mundane, or repetitive tasks but will also continue to blur the line between human and machine. Some of the many ethical/legal questions this poses, are at what point does a Soldier become more machine than human, and how will that Soldier be treated and recognized by law? At what point does a person lose their legal personhood? If a person’s nervous system is intact, but other organs and systems are replaced by machines, is he/she still a person? These questions do not have concrete answers presently, but, more importantly, they do not have policy that even begins to address them. The Army must take these implications seriously and draft policy that addresses these issues now before these technologies become commonplace. Doing so will guide the development and employment of these technologies to ensure they are administered properly and protect Soldiers’ rights.”

“Human enhancement will undoubtedly afford the Soldier a litany of increased capabilities on the battlefield. Augmenting a human with embedded communication technology, sensors, and muscular-skeletal support platforms will allow the Soldier to offload many physical, mundane, or repetitive tasks but will also continue to blur the line between human and machine. Some of the many ethical/legal questions this poses, are at what point does a Soldier become more machine than human, and how will that Soldier be treated and recognized by law? At what point does a person lose their legal personhood? If a person’s nervous system is intact, but other organs and systems are replaced by machines, is he/she still a person? These questions do not have concrete answers presently, but, more importantly, they do not have policy that even begins to address them. The Army must take these implications seriously and draft policy that addresses these issues now before these technologies become commonplace. Doing so will guide the development and employment of these technologies to ensure they are administered properly and protect Soldiers’ rights.” “Fully autonomous weapons with no human in the loop will be employed on the battlefield in the near future. Their employment may not necessarily be by the United States, but they will be present on the battlefield by 2050. This presents two distinct dilemmas regarding this technology. The first dilemma is determining responsibility when an autonomous weapon does not act in a manner consistent with our expectations. For a traditional weapon, the decision to fire always comes back to a human counterpart. For an autonomous weapon, that may not be the case. Does that mean that the responsibility lies with the human who programmed the machine? Should we treat the programmer the same as we treat the human who physically pulled the trigger? Current U.S. policy doesn’t allow for a weapon to be fired without a human in the loop. As such, this alleviates the responsibility problem and places it on the human. However, is this the best use of automated systems and, more importantly, will our adversaries adhere to this same policy? It’s almost assured that the answer to both questions is no. There is little reason to believe that our adversaries will employ the same high level of ethics as the Army. This means Soldiers will likely encounter autonomous weapons that can target, slew, and fire on their own on the future battlefield. The human Soldier facing them will be slower, less accurate, and therefore less lethal. So the Army is at a crossroads where it must decide if employing automated weapons aligns with its ethical principles or if they will be compromised by doing so. It must also be prepared to deal with a future battlefield where it is at a distinct disadvantage as its adversaries can fire with speed and accuracy unmatched by humans. Policy must address these dilemmas and discussion must be framed in a battlefield where autonomous weapons operating at machine speed are the norm.”

“Fully autonomous weapons with no human in the loop will be employed on the battlefield in the near future. Their employment may not necessarily be by the United States, but they will be present on the battlefield by 2050. This presents two distinct dilemmas regarding this technology. The first dilemma is determining responsibility when an autonomous weapon does not act in a manner consistent with our expectations. For a traditional weapon, the decision to fire always comes back to a human counterpart. For an autonomous weapon, that may not be the case. Does that mean that the responsibility lies with the human who programmed the machine? Should we treat the programmer the same as we treat the human who physically pulled the trigger? Current U.S. policy doesn’t allow for a weapon to be fired without a human in the loop. As such, this alleviates the responsibility problem and places it on the human. However, is this the best use of automated systems and, more importantly, will our adversaries adhere to this same policy? It’s almost assured that the answer to both questions is no. There is little reason to believe that our adversaries will employ the same high level of ethics as the Army. This means Soldiers will likely encounter autonomous weapons that can target, slew, and fire on their own on the future battlefield. The human Soldier facing them will be slower, less accurate, and therefore less lethal. So the Army is at a crossroads where it must decide if employing automated weapons aligns with its ethical principles or if they will be compromised by doing so. It must also be prepared to deal with a future battlefield where it is at a distinct disadvantage as its adversaries can fire with speed and accuracy unmatched by humans. Policy must address these dilemmas and discussion must be framed in a battlefield where autonomous weapons operating at machine speed are the norm.” Conflict in the Mid-21st Century will witness the proliferation of unmanned, robotic, semi-autonomous, and autonomous weapons, platforms, and combatants that will dramatically change the role of Soldiers on the battlefield. At the

Conflict in the Mid-21st Century will witness the proliferation of unmanned, robotic, semi-autonomous, and autonomous weapons, platforms, and combatants that will dramatically change the role of Soldiers on the battlefield. At the