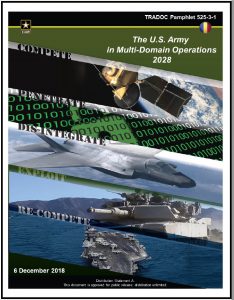

[Editor’s Note: The U.S. Army’s capstone unclassified document on the Operational Environment (OE) states:

“Russia can be considered our “pacing threat,” and will be our most capable potential foe for at least the first half of the Era of Accelerated Human Progress [now through 2035]. It will remain a key strategic competitor through the Era of Contested Equality [2035 through 2050].” TRADOC Pamphlet (TP) 525-92, The Operational Environment and the Changing Character of Warfare, p. 12.

In today’s companion piece to the previously published China: Our Emergent Pacing Threat, the Mad Scientist Laboratory reviews what we’ve learned about Russia in an anthology of insights gleaned from previous posts regarding our current pacing threat — this is a far more sophisticated strategic competitor than your Dad’s (or Mom’s!) Soviet Union — Enjoy!].

The dichotomy of war and peace is no longer a useful construct for thinking about national security or the development of land force capabilities. There are no longer defined transitions from peace to war and competition to conflict. This state of simultaneous competition and conflict is continuous and dynamic, but not necessarily cyclical. Russia will seek to achieve its national interests short of conflict and will use a range of actions from cyber to kinetic against unmanned systems walking up to the line of a short or protracted armed conflict.

1. Hemispheric Competition and Conflict: Over the last twenty years, Russia has been viewed as regional competitor in Eurasia, seeking to undermine and fracture traditional Western institutions, democracies, and alliances. It is now transitioning into a hemispheric threat with a primary focus on challenging the  U.S. Army all the way from our home station installations (i.e., the Strategic Support Area) to the Close Area fight. We can expect cyber attacks against critical infrastructure, the use of advanced information warfare such as deepfakes targeting units and families, and the possibility of small scale kinetic attacks during what were once uncontested administrative actions of deployment. There is no institutional memory for this type of threat and adding time and required speed for deployment is not enough to exercise Multi-Domain Operations.

U.S. Army all the way from our home station installations (i.e., the Strategic Support Area) to the Close Area fight. We can expect cyber attacks against critical infrastructure, the use of advanced information warfare such as deepfakes targeting units and families, and the possibility of small scale kinetic attacks during what were once uncontested administrative actions of deployment. There is no institutional memory for this type of threat and adding time and required speed for deployment is not enough to exercise Multi-Domain Operations.

See: Blurring Lines Between Competition and Conflict

2. Cyber Operations: Russia has already employed tactics designed to exploit vulnerabilities arising from Soldier connectivity. In the ongoing Ukrainian conflict, for example,  Russian cyber operations coordinated attacks against Ukrainian artillery, in just one case of a “really effective integration of all these [cyber] capabilities with kinetic measures.” By sending spoofed text messages to Ukrainian soldiers informing them that their support battalion has retreated, their bank account has been exhausted, or that they are simply surrounded and have been abandoned, they trigger personal communications, enabling the Russians to fix and target Ukrainian positions. Taking it one step further, they have even sent false messages to the families of soldiers informing them that their loved one was killed in action. This sets off a chain of events where the family member will immediately call or text the soldier, followed

Russian cyber operations coordinated attacks against Ukrainian artillery, in just one case of a “really effective integration of all these [cyber] capabilities with kinetic measures.” By sending spoofed text messages to Ukrainian soldiers informing them that their support battalion has retreated, their bank account has been exhausted, or that they are simply surrounded and have been abandoned, they trigger personal communications, enabling the Russians to fix and target Ukrainian positions. Taking it one step further, they have even sent false messages to the families of soldiers informing them that their loved one was killed in action. This sets off a chain of events where the family member will immediately call or text the soldier, followed  by another spoofed message to the original phone. With a high number of messages to enough targets, an artillery strike is called in on the area where an excess of cellphone usage has been detected. To translate into plain English, Russia has successfully combined traditional weapons of land warfare (such as artillery) with the new potential of cyber warfare.

by another spoofed message to the original phone. With a high number of messages to enough targets, an artillery strike is called in on the area where an excess of cellphone usage has been detected. To translate into plain English, Russia has successfully combined traditional weapons of land warfare (such as artillery) with the new potential of cyber warfare.

See: Nowhere to Hide: Information Exploitation and Sanitization and Hal Wilson‘s Britain, Budgets, and the Future of Warfare.

3. Influence Operations: Russia seeks to shape public opinion and influence decisions through targeted information operations (IO) campaigns, often relying on weaponized social media. Russia recognizes the importance of AI, particularly to match and overtake the superior military capabilities that the United States  and its allies have held for the past several decades. Highlighting this importance, Russian President Vladimir Putin in 2017 stated that “whoever becomes the leader in this sphere will become the ruler of the world.” AI-guided IO tools can empathize with an audience to say anything, in any way needed, to change the perceptions that drive those physical weapons. Future IO systems will be able to individually monitor and affect tens of thousands of people at once.

and its allies have held for the past several decades. Highlighting this importance, Russian President Vladimir Putin in 2017 stated that “whoever becomes the leader in this sphere will become the ruler of the world.” AI-guided IO tools can empathize with an audience to say anything, in any way needed, to change the perceptions that drive those physical weapons. Future IO systems will be able to individually monitor and affect tens of thousands of people at once.

Russian bot armies continue to make headlines in executing IO. The New York Times maintains about a dozen Twitter feeds and produces around 300 tweets a day, but Russia’s Internet Research Agency (IRA) regularly puts out 25,000 tweets in the same twenty-four hours. The IRA’s bots are really just low-tech curators; they collect, interpret, and display desired information to promote the Kremlin’s narratives.

Next-generation bot armies will employ far faster computing techniques and profit from an order of magnitude greater network speed when 5G services are fielded. If “Repetition is a key tenet of IO execution,” then this machine gun-like ability to fire information at an audience will, with empathetic precision and custom content, provide the means to change a decisive audience’s very reality. No breakthrough science is needed, no bureaucratic project office required. These pieces are already there, waiting for an adversary to put them together.

Next-generation bot armies will employ far faster computing techniques and profit from an order of magnitude greater network speed when 5G services are fielded. If “Repetition is a key tenet of IO execution,” then this machine gun-like ability to fire information at an audience will, with empathetic precision and custom content, provide the means to change a decisive audience’s very reality. No breakthrough science is needed, no bureaucratic project office required. These pieces are already there, waiting for an adversary to put them together.

One future vignette posits Russia’s GRU (Military Intelligence) employing AI Generative Adversarial Networks (GANs) to create fake persona injects that mimic select U.S. Active Army, ARNG, and USAR commanders making disparaging statements about their confidence in our allies’ forces, the legitimacy of the mission, and their faith in our political leadership. Sowing these injects across unit social media accounts, Russian Information Warfare specialists could seed doubt and erode trust in the chain of command amongst a percentage of susceptible Soldiers, creating further friction.

One future vignette posits Russia’s GRU (Military Intelligence) employing AI Generative Adversarial Networks (GANs) to create fake persona injects that mimic select U.S. Active Army, ARNG, and USAR commanders making disparaging statements about their confidence in our allies’ forces, the legitimacy of the mission, and their faith in our political leadership. Sowing these injects across unit social media accounts, Russian Information Warfare specialists could seed doubt and erode trust in the chain of command amongst a percentage of susceptible Soldiers, creating further friction.

See: Weaponized Information: One Possible Vignette, Own the Night, The Death of Authenticity: New Era Information Warfare, and MAJ Chris Telley‘s Influence at Machine Speed: The Coming of AI-Powered Propaganda

4. Isolation: Russia seeks to cocoon itself from retaliatory IO and Cyber Operations. At the October 2017 meeting of the Security Council, “the FSB [Federal Security Service] asked the government to develop an independent  ‘Internet’ infrastructure for BRICS nations [Brazil, Russia, India, China, South Africa], which would continue to work in the event the global Internet malfunctions.” Security Council members argued the Internet’s threat to national security is due to:

‘Internet’ infrastructure for BRICS nations [Brazil, Russia, India, China, South Africa], which would continue to work in the event the global Internet malfunctions.” Security Council members argued the Internet’s threat to national security is due to:

“… the increased capabilities of Western nations to conduct offensive operations in the informational space as well as the increased readiness to exercise these capabilities.”

Having its own root servers would make Russia independent of monitors like the International Corporation for Assigned Names and Numbers (ICANN) and protect the country in the event of “outages or deliberate interference.” “Putin sees [the] Internet as [a] CIA tool.”

See: Dr. Mica Hall‘s The Cryptoruble as a Stepping Stone to Digital Sovereignty and Howard R. Simkin‘s Splinternets

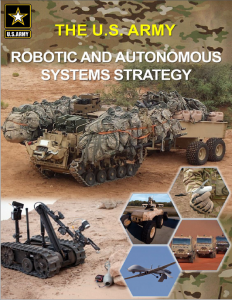

5. Battlefield Automation: Given the rapid proliferation of unmanned and autonomous technology, we are already in the midst of a new arms race. Russia’s  Syria experience — and monitoring the U.S. use of unmanned systems for the past two decades — convinced the Ministry of Defense (MOD) that its forces need more expanded unmanned combat capabilities to augment existing Intelligence, Surveillance, and Reconnaissance (ISR) Unmanned Aerial Vehicle (UAV) systems that allow Russian forces to observe the battlefield in real time.

Syria experience — and monitoring the U.S. use of unmanned systems for the past two decades — convinced the Ministry of Defense (MOD) that its forces need more expanded unmanned combat capabilities to augment existing Intelligence, Surveillance, and Reconnaissance (ISR) Unmanned Aerial Vehicle (UAV) systems that allow Russian forces to observe the battlefield in real time.

The next decade will see Russia complete the testing and evaluation of an entire lineup of combat drones that were in different stages of development over the previous decade. They include the heavy Ohotnik combat UAV (UCAV); mid-range Orion that was tested in Syria; Russian-made Forpost, a UAV that was originally assembled via Israeli license; mid-range Korsar; and long-range Altius that was billed as Russia’s equivalent to the American Global Hawk drone. All of these UAVs are several years away from potential acquisition by armed forces, with some going through factory tests, while others graduating to military testing and evaluation. These UAVs will have a range from over a hundred to possibly thousands of kilometers, depending on the model, and will be able to carry weapons for a diverse set of missions.

Russian ground forces have also been testing a full lineup of Unmanned Ground Vehicles (UGVs), from small to tank-sized vehicles armed with machine guns, cannon, grenade launchers, and sensors. The MOD is conceptualizing how such UGVs could be used in a range of combat scenarios, including urban combat.  However, in a candid admission, Andrei P. Anisimov, Senior Research Officer at the 3rd Central Research Institute of the Ministry of Defense, reported on the Uran-9’s critical combat deficiencies during the 10th All-Russian Scientific Conference entitled “Actual Problems of Defense and Security,” held in April 2018. The Uran-9 is a test bed system and much has to take place before it could be successfully integrated into current Russian concept of operations. What is key is that it has been tested in a combat environment and the Russian military and defense establishment are incorporating lessons learned into next-gen systems. We could expect more eye-opening lessons learned from its’ and other UGVs potential deployment in combat.

However, in a candid admission, Andrei P. Anisimov, Senior Research Officer at the 3rd Central Research Institute of the Ministry of Defense, reported on the Uran-9’s critical combat deficiencies during the 10th All-Russian Scientific Conference entitled “Actual Problems of Defense and Security,” held in April 2018. The Uran-9 is a test bed system and much has to take place before it could be successfully integrated into current Russian concept of operations. What is key is that it has been tested in a combat environment and the Russian military and defense establishment are incorporating lessons learned into next-gen systems. We could expect more eye-opening lessons learned from its’ and other UGVs potential deployment in combat.

Another significant trend is the gradual shift from manual control over unmanned systems to a fully autonomous mode, perhaps powered by a limited Artificial  Intelligence (AI) program. The Russian MOD has already communicated its desire to have unmanned military systems operate autonomously in a fast-paced and fast-changing combat environment. While the actual technical solution for this autonomy may evade Russian designers in this decade due to its complexity, the MOD will nonetheless push its developers for near-term results that may perhaps grant such fighting vehicles limited semi-autonomous status. The MOD would also like this AI capability be able to direct swarms of air, land, and sea-based unmanned and autonomous systems.

Intelligence (AI) program. The Russian MOD has already communicated its desire to have unmanned military systems operate autonomously in a fast-paced and fast-changing combat environment. While the actual technical solution for this autonomy may evade Russian designers in this decade due to its complexity, the MOD will nonetheless push its developers for near-term results that may perhaps grant such fighting vehicles limited semi-autonomous status. The MOD would also like this AI capability be able to direct swarms of air, land, and sea-based unmanned and autonomous systems.

The Russians have been public with both their statements about new technology being tested and evaluated, and with possible use of such weapons in current and future conflicts. There should be no strategic or tactical surprise when military robotics are finally encountered in future combat.

See proclaimed Mad Scientist Sam Bendett‘s Major Trends in Russian Military Unmanned Systems Development for the Next Decade, Autonomous Robotic Systems in the Russian Ground Forces, and Russian Ground Battlefield Robots: A Candid Evaluation and Ways Forward,

6. Innovation: Russia has developed a military innovation center — Era Military Innovation Technopark — near the city of Anapa (Krasnodar Region) on the northern coast of the Black Sea. Touted as “A Militarized Silicon Valley in Russia,” the facility will be co-located with representatives of Russia’s top arms manufacturers which will “facilitate the growth of the efficiency of interaction among educational, industrial, and research organizations.” By bringing together the best and brightest in the field of “breakthrough technology,” the Russian leadership hopes to see “development in such fields as nanotechnology and biotech, information and telecommunications technology, and data protection.”

That said, while Russian scientists have often been at the forefront of technological innovations, the country’s poor legal system prevents these discoveries from ever bearing fruit. Stifling bureaucracy and a broken legal system prevent Russian scientists and innovators from profiting from their discoveries. The jury is still out as to whether Russia’s Era Military Innovation Technopark can deliver real innovation.

See: Ray Finch‘s “The Tenth Man” — Russia’s Era Military Innovation Technopark

Russia’s embrace of these and other disruptive technologies and the way in which they adopt hybrid strategies that challenge traditional symmetric advantages and conventional ways of war increases their ability to challenge U.S. forces across multiple domains. As an authoritarian regime, Russia is able to more easily ensure unity of effort and a whole-of-government focus over the Western democracies. It will continue to seek out and exploit fractures and gaps in the U.S. and its allies’ decision-making, governance, and policy.

Russia’s embrace of these and other disruptive technologies and the way in which they adopt hybrid strategies that challenge traditional symmetric advantages and conventional ways of war increases their ability to challenge U.S. forces across multiple domains. As an authoritarian regime, Russia is able to more easily ensure unity of effort and a whole-of-government focus over the Western democracies. It will continue to seek out and exploit fractures and gaps in the U.S. and its allies’ decision-making, governance, and policy.

If you enjoyed this post, check out these other Mad Scientist Laboratory anthologies:

-

- The Information Environment: Competition and Conflict anthology, a collection of previously published blog posts that serves as a primer on this topic and examines the convergence of technologies that facilitates information weaponization.

- FY18 Mad Scientist Laboratory Anthology, serves up “the best of” futures oriented assessments published during the MadSciBlog’s first year.

1978 who, as part of his final degree project, developed a workable nuclear weapons design with nothing more than the pre-Internet Science Library as a resource. They still talk about the visit from the FBI on campus, and the fact that his professor only begrudgingly gave him an A- as a final grade.”

1978 who, as part of his final degree project, developed a workable nuclear weapons design with nothing more than the pre-Internet Science Library as a resource. They still talk about the visit from the FBI on campus, and the fact that his professor only begrudgingly gave him an A- as a final grade.” detonation. So, as my colleague [name redacted] (far more qualified in matters scientific and technical) points out, with the advances since the advent of the Internet and World Wide Web, the opportunity to obtain the ‘Secret Sauce’ necessary to achieve criticality have likewise advanced exponentially. He has opined that it is quite feasible for a malevolent private actor, armed with currently foreseeable emerging capabilities, to seek and achieve nuclear capabilities utilizing Artificial Intelligence (AI)-based data and communications analysis modalities. Balancing against this emerging capability are the competing and ever-growing capabilities of the state to surveil and discover such endeavors and frustrate them before (hopefully) reaching fruition. Of course, you’ll understand if I only allude to them in this forum and say nothing further in that regard.”

detonation. So, as my colleague [name redacted] (far more qualified in matters scientific and technical) points out, with the advances since the advent of the Internet and World Wide Web, the opportunity to obtain the ‘Secret Sauce’ necessary to achieve criticality have likewise advanced exponentially. He has opined that it is quite feasible for a malevolent private actor, armed with currently foreseeable emerging capabilities, to seek and achieve nuclear capabilities utilizing Artificial Intelligence (AI)-based data and communications analysis modalities. Balancing against this emerging capability are the competing and ever-growing capabilities of the state to surveil and discover such endeavors and frustrate them before (hopefully) reaching fruition. Of course, you’ll understand if I only allude to them in this forum and say nothing further in that regard.” “It is quite conceivable, in this context, that the future of the Internet for our purposes revolves around one continuous game of cat and mouse as identities are sought and hidden between white hat and black hat players. A real, but unanticipated, version of Ray Kurtzweil’s singularity that nonetheless poses fundamental challenges for a free society. In the operational environment to 2050, cyber-operations will no longer be a new domain but one to be taken into account as a matter of course.”

“It is quite conceivable, in this context, that the future of the Internet for our purposes revolves around one continuous game of cat and mouse as identities are sought and hidden between white hat and black hat players. A real, but unanticipated, version of Ray Kurtzweil’s singularity that nonetheless poses fundamental challenges for a free society. In the operational environment to 2050, cyber-operations will no longer be a new domain but one to be taken into account as a matter of course.”

Assembling the device itself is no easy task; it requires precision engineering and the casting of high explosives, which cannot be done without significant pre-existing expertise. However, the brightest mechanical engineers and even explosives technicians can be legally hired on the open market, if not for the direct participation in the project, then for training and knowledge transfer for the project team. Private organizations have achieved even more complicated engineering feats (e.g., rocket engines at SpaceX), so this part looks feasible.

Assembling the device itself is no easy task; it requires precision engineering and the casting of high explosives, which cannot be done without significant pre-existing expertise. However, the brightest mechanical engineers and even explosives technicians can be legally hired on the open market, if not for the direct participation in the project, then for training and knowledge transfer for the project team. Private organizations have achieved even more complicated engineering feats (e.g., rocket engines at SpaceX), so this part looks feasible.

our short, on-line

our short, on-line

The

The

Realizing that algorithms supporting future Intelligence, Surveillance, and Reconnaissance (ISR) networks and Commander’s decision support aids will have inherent biases — what is the impact on future warfighting? This question is exceptionally relevant as Soldiers and Leaders consider the influence of biases in man-machine relationships, and their potential ramifications on the battlefield, especially with regard to the rules of engagement (i.e., mission execution and combat efficiency versus the proportional use of force and minimizing civilian casualties and collateral damage).

Realizing that algorithms supporting future Intelligence, Surveillance, and Reconnaissance (ISR) networks and Commander’s decision support aids will have inherent biases — what is the impact on future warfighting? This question is exceptionally relevant as Soldiers and Leaders consider the influence of biases in man-machine relationships, and their potential ramifications on the battlefield, especially with regard to the rules of engagement (i.e., mission execution and combat efficiency versus the proportional use of force and minimizing civilian casualties and collateral damage). The Mad Scientist Initiative has developed a series of questions to help frame the discussion regarding what biases we are willing to accept and in what cases they will be acceptable. Feel free to share your observations and questions in the comments section of this blog post (below) or email them to us at: usarmy.jble.tradoc.mbx.army-mad-scientist@mail.mil.

The Mad Scientist Initiative has developed a series of questions to help frame the discussion regarding what biases we are willing to accept and in what cases they will be acceptable. Feel free to share your observations and questions in the comments section of this blog post (below) or email them to us at: usarmy.jble.tradoc.mbx.army-mad-scientist@mail.mil. 2) In what types of systems will we accept biases? Will machine learning applications in supposedly non-lethal warfighting functions like sustainment, protection, and intelligence be given more leeway with regards to bias?

2) In what types of systems will we accept biases? Will machine learning applications in supposedly non-lethal warfighting functions like sustainment, protection, and intelligence be given more leeway with regards to bias? 4) At what point will the pace of innovation and introduction of this technology on the battlefield by our adversaries cause us to forego concerns of bias and rapidly field systems to gain a decisive Observe, Orient, Decide, and Act (OODA) loop and combat speed advantage on the

4) At what point will the pace of innovation and introduction of this technology on the battlefield by our adversaries cause us to forego concerns of bias and rapidly field systems to gain a decisive Observe, Orient, Decide, and Act (OODA) loop and combat speed advantage on the

According to Anisimov’s report, the overall Russian UGV and unmanned military systems development arch is similar to the one proposed by the

According to Anisimov’s report, the overall Russian UGV and unmanned military systems development arch is similar to the one proposed by the