[Editor’s Note: Mad Scientist Laboratory is pleased to present the first of two guest blog posts by Dr. Nir Buras. In today’s post, he makes the compelling case for the establishment of man-machine rules. Given the vast technological leaps … Read the rest

105. Emerging Technologies as Threats in Non-Kinetic Engagements

[Editor’s Note: Mad Scientist Laboratory is pleased to present today’s post by returning guest blogger and proclaimed Mad Scientist Dr. James Giordano and CAPT (USN – Ret.) L. R. Bremseth, identifying the national security challenges presented by emerging … Read the rest

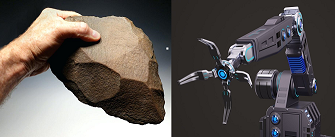

102. The Human Targeting Solution: An AI Story

[Editor’s Note: Mad Scientist Laboratory is pleased to present the following post by guest blogger CW3 Jesse R. Crifasi, envisioning a combat scenario in the not too distant future, teeing up the twin challenges facing the U.S Army … Read the rest

101. TRADOC 2028

[Editor’s Note: The U.S. Army Training and Doctrine Command (TRADOC) mission is to recruit, train, and educate the Army, driving constant improvement and change to ensure the Total Army can deter, fight, and win on any battlefield now and … Read the rest

99. “The Queue”

[Editor’s Note: Mad Scientist Laboratory is pleased to present our October edition of “The Queue” – a monthly post listing the most compelling articles, books, podcasts, videos, and/or movies that the U.S. Army’s Training and Doctrine Command (TRADOC) Mad … Read the rest

[Editor’s Note: Mad Scientist Laboratory is pleased to present our October edition of “The Queue” – a monthly post listing the most compelling articles, books, podcasts, videos, and/or movies that the U.S. Army’s Training and Doctrine Command (TRADOC) Mad … Read the rest