The Evolution of AI, Implications for the DoD, and Unintended Consequences

[Editor’s Note: The U.S. Army continues to grapple with how best to incorporate autonomy, robotics, and Artificial Intelligence (AI) into our force modernization efforts. In the words of former Deputy Secretary of Defense Mr. Bob Work, this is an “Own the Night Moment for the United States Army.” But the clock is ticking, and our adversaries also understand the game-changing power of AI.

President Vladimir Putin virtually addressed over one million Russian school children and teachers at 16,000 schools on September 1, 2017, stating, “Artificial intelligence is the future, not only for Russia, but for all humankind. It comes with colossal opportunities, but also threats that are difficult to predict. Whoever becomes the leader in this sphere will become the ruler of the world.”

Just as ominously, in addressing the 20th National Congress of the Chinese Communist Party (CCP) on October 16, 2022, China’s President Xi Jinping stated that quickly elevating the People’s Liberation Army (PLA) to a world-class army is a strategic requirement, and that China would adhere to the integrated development of the PLA through the concept of “three-izations” (三化) — mechanization, informatization, and intelligentization — the latter being China’s concept for integrating AI’s machine speed and processing power to military planning, operational command, and decision support. Xi further stated that these three-izations are not to be achieved in stages but are to be pursued simultaneously and in parallel.

But it’s not just our adversaries that are racing to incorporate the power of AI to achieve battlefield overmatch — Ukraine has already successfully incorporated machine vision into its heavy bomber Unmanned Aerial Vehicles (UAVs) — yielding fully autonomous battlefield lethality — and is pursuing the same for its First-Person View (FPV) UAVs; while Israel is using AI to generate target sets in its war against HAMAS in Gaza.

The Mad Scientist Laboratory welcomes today’s guest blogger John W. Mabes III with his timely submission exploring what’s at stake for the U.S. Army and the Joint Force, examining “the complexities and challenges inherent in the pursuit of AI supremacy in the context of national security” — Read on!]

In the ever-evolving landscape of global security, the intersection of Artificial Intelligence (AI) with military, communications, and intelligence applications has transcended mere technological innovation to become a defining element of geopolitical strategy and national defense.[i] The rapid evolution of AI technology, marked by unprecedented advancements in computing power, algorithmic sophistication, and data analytics,[ii] has fundamentally transformed the nature of modern warfare, intelligence gathering, and strategic communication. As nations endeavor to maintain military superiority  and safeguard national interests in an increasingly complex and interconnected world, the race for AI dominance has emerged as a critical imperative, with the United States, Russia, and China at the forefront of this technological arms race.[iii]

and safeguard national interests in an increasingly complex and interconnected world, the race for AI dominance has emerged as a critical imperative, with the United States, Russia, and China at the forefront of this technological arms race.[iii]

Against that backdrop, this article delves into the multifaceted dimensions of AI’s evolution in military, communications, and intelligence applications. It explores how AI capabilities have reshaped warfare tactics, enhanced intelligence gathering capabilities, and revolutionized communication networks. Moreover, it analyzes the ongoing arms race dynamics among key global players and evaluates the implications for the U.S. Department of Defense (DoD) in navigating this rapidly evolving strategic landscape. By examining the interplay between technological innovation, strategic competition, and ethical considerations, this article seeks to shed light on the complexities and challenges inherent in the pursuit of AI supremacy in the context of national security.

Evolution of AI in Military, Communications, and Intelligence Applications

The evolution of AI in military applications has been characterized by rapid advancements in computing power, algorithmic sophistication, and data analytics.[iv] From autonomous drones to predictive analytics for strategic planning, AI capabilities have transformed modern warfare, enhancing  decision-making processes, automating logistics, and augmenting combat effectiveness. In communications and intelligence gathering, AI-driven algorithms sift through vast datasets to extract actionable insights, enabling intelligence agencies to identify threats, predict adversarial behavior, and safeguard national security interests with unprecedented precision and efficiency.

decision-making processes, automating logistics, and augmenting combat effectiveness. In communications and intelligence gathering, AI-driven algorithms sift through vast datasets to extract actionable insights, enabling intelligence agencies to identify threats, predict adversarial behavior, and safeguard national security interests with unprecedented precision and efficiency.

The Ongoing AI Arms Race

The US, Russia, and China are engaged in a relentless pursuit of AI supremacy, investing heavily in research, development, and deployment across military and civilian sectors.[v] The US, leveraging its technological prowess and robust defense industry, marginally leads in AI innovation, with initiatives such as the Joint Artificial Intelligence Center (JAIC) spearheading AI integration efforts within the DoD.

The US, Russia, and China are engaged in a relentless pursuit of AI supremacy, investing heavily in research, development, and deployment across military and civilian sectors.[v] The US, leveraging its technological prowess and robust defense industry, marginally leads in AI innovation, with initiatives such as the Joint Artificial Intelligence Center (JAIC) spearheading AI integration efforts within the DoD.

However, Russia and China have rapidly closed the gap, prioritizing AI-driven capabilities in their military modernization efforts. Russia focuses on AI- enabled strategic deterrence and asymmetric warfare, while China emphasizes AI-driven surveillance, information warfare, and hypersonic weaponry. Semi-Autonomous operating military drones are extensively deployed across the globe. Both China and Russia are actively pursuing fully autonomous drones, utilizing the battlefield in Ukraine as a proving ground to gain more experience in this frontier.

enabled strategic deterrence and asymmetric warfare, while China emphasizes AI-driven surveillance, information warfare, and hypersonic weaponry. Semi-Autonomous operating military drones are extensively deployed across the globe. Both China and Russia are actively pursuing fully autonomous drones, utilizing the battlefield in Ukraine as a proving ground to gain more experience in this frontier.

Since the commencement of the full-scale Russian invasion, Ukraine has implemented artificial intelligence in various capacities: on the battlefield, for documenting the conflict, and to counter Russian cyber and information warfare. Autonomous Ukrainian drones, both military and civilian, play a pivotal role in identifying and engaging Russian targets, with AI facilitating automated take-off, landing, and targeting processes.[vi]

The recent conflict between Israel and Hamas has presented an unparalleled chance for the Israel Defense Force to employ such technologies across a broader spectrum of operations. Specifically, the IDF has utilized an AI target-generation platform named “the Gospel,” significantly expediting the identification of targets for engagement, likened by officials to a “factory” production line. According to Aviv Kochavi, the former IDF Chief, “in the past we would produce 50 targets in Gaza per year. Now, this machine produces 100 targets a single day, with 50% of them being attacked.”[vii]

Implications for the US Department of Defense

For the DoD, the implications of the AI arms race are profound and multifaceted. On one hand, AI-enabled capabilities enhance military lethality, agility, and resilience, providing a strategic advantage in an increasingly complex and contested security environment.[viii] The integration of AI into military operations improves situational awareness, accelerates decision-making processes, and enhances force projection capabilities, thereby bolstering U.S. military dominance and deterrence capabilities on the global stage.

For the DoD, the implications of the AI arms race are profound and multifaceted. On one hand, AI-enabled capabilities enhance military lethality, agility, and resilience, providing a strategic advantage in an increasingly complex and contested security environment.[viii] The integration of AI into military operations improves situational awareness, accelerates decision-making processes, and enhances force projection capabilities, thereby bolstering U.S. military dominance and deterrence capabilities on the global stage.

However, the relentless pursuit of AI supremacy also poses strategic dilemmas and ethical considerations for the U.S. military establishment.[ix] The proliferation of AI-driven autonomous weapons raises concerns about the erosion of human control, ethical use of lethal force, and potential for unintended escalation in conflicts. Moreover, the asymmetric nature of AI warfare introduces vulnerabilities in critical infrastructure, command systems, and decision-making processes, exposing the U.S. military to new forms of cyber threats and strategic vulnerabilities.

Potential Unintended Consequences

Amidst the fervor of the AI arms race, the pursuit of technological supremacy carries significant risks of unintended consequences and destabilizing effects.[x] The increasing  reliance on AI-driven decision-making algorithms may exacerbate biases, amplify systemic errors, and inadvertently escalate conflicts. Moreover, the diffusion of AI technologies to non-state actors, rogue states, and cybercriminals poses grave risks to global security, potentially triggering unpredictable and uncontrollable chain reactions.

reliance on AI-driven decision-making algorithms may exacerbate biases, amplify systemic errors, and inadvertently escalate conflicts. Moreover, the diffusion of AI technologies to non-state actors, rogue states, and cybercriminals poses grave risks to global security, potentially triggering unpredictable and uncontrollable chain reactions.

Furthermore, the lack of standardized regulations and ethical frameworks for AI warfare exacerbates these challenges, creating a volatile and unpredictable strategic environment fraught with existential risks and strategic uncertainties. The unintended consequences of AI proliferation extend beyond military applications, impacting societal norms, international relations, and geopolitical stability, necessitating a comprehensive and collaborative approach to mitigate risks and safeguard global security interests.

Conclusion

The evolution of AI in military, communications, and intelligence applications heralds a new era of warfare characterized by unprecedented technological sophistication and strategic complexity.[xi] As the U.S., Russia, China, and other nations continue to invest heavily in AI research, development, and deployment, the global security landscape is undergoing a profound transformation, with far-reaching implications for international relations, strategic stability, and ethical governance. The ongoing AI arms race underscores the urgent need for international cooperation, ethical standards, and regulatory frameworks to mitigate risks, uphold human rights, and safeguard global security interests.

The evolution of AI in military, communications, and intelligence applications heralds a new era of warfare characterized by unprecedented technological sophistication and strategic complexity.[xi] As the U.S., Russia, China, and other nations continue to invest heavily in AI research, development, and deployment, the global security landscape is undergoing a profound transformation, with far-reaching implications for international relations, strategic stability, and ethical governance. The ongoing AI arms race underscores the urgent need for international cooperation, ethical standards, and regulatory frameworks to mitigate risks, uphold human rights, and safeguard global security interests.

In navigating the ethical, strategic, and unintended consequences of AI proliferation, the DoD faces formidable challenges and strategic dilemmas. While AI-enabled capabilities offer significant advantages in enhancing military effectiveness and operational efficiency, they also introduce new vulnerabilities, ethical considerations, and strategic uncertainties. Therefore, a comprehensive and collaborative approach is imperative, involving stakeholders from government, academia, industry, and civil society to address the complex intersection of AI technology, national security, and global stability.

As we chart a course forward in the AI-driven era of warfare, it is essential to recognize the dual nature of technological innovation — as a tool for advancing human progress and a potential source of destabilization and conflict. By harnessing the transformative potential of AI while upholding ethical  principles, international norms, and democratic values, we can shape a future where technological innovation serves as a force for peace, security, and prosperity in an increasingly interconnected world.

principles, international norms, and democratic values, we can shape a future where technological innovation serves as a force for peace, security, and prosperity in an increasingly interconnected world.

If you enjoyed this post, check out the TRADOC G-2’s Operational Environment Enterprise public facing page, brimming with threat content, including:

Our China Landing Zone, full of information regarding our pacing challenge, including ATP 7-100.3, Chinese Tactics, BiteSize China weekly topics, People’s Liberation Army Ground Forces Quick Reference Guide, and our thirty-plus snapshots captured to date addressing what China is learning about the Operational Environment from Russia’s war against Ukraine (note that a DoD Common Access Card [CAC] is required to access this last link).

… our Russia Landing Zone, including the BiteSize Russia weekly topics. If you have a CAC, you’ll be especially interested in reviewing our weekly RUS-UKR Conflict Running Estimates and associated Narratives, capturing what we have been learning about the contemporary Russian way of war in Ukraine over the past two years and the ramifications for U.S. Army modernization across DOTMLPF-P

… then check out the following AI-related Army Mad Scientist content:

Intelligentization and the PLA’s Strategic Support Force, by Col (s) Dorian Hatcher

“Intelligentization” and a Chinese Vision of Future War

Thoughts on AI and Ethics… from the Chaplain Corps

The AI Study Buddy at the Army War College (Part 1) and associated podcast, with LtCol Joe Buffamante, USMC

The AI Study Buddy at the Army War College (Part 2) and associated podcast, with Dr. Billy Barry, USAWC

Gen Z is Likely to Build Trusting Relationships with AI, by COL Derek Baird

Hey, ChatGPT, Help Me Win this Contract! and associated podcast, with LTC Robert Solano

Chatty Cathy, Open the Pod Bay Doors: An Interview with ChatGPT and associated podcast

One Brain Chip, Please! Neuro-AI with two of the Maddest Scientists and associated podcast, with proclaimed Mad Scientists Dr. James Giordano and Dr. James Canton

Arsenal of the Mind presentation by proclaimed Mad Scientist Juliane Gallina, Director, Cognitive Solutions for National Security (North America) IBM WATSON, at the Mad Scientist Robotics, AI, and Autonomy — Visioning Multi-Domain Battle in 2030-2050 Conference, hosted by Georgia Tech Research Institute, Atlanta Georgia, 7-8 March 2017

The Exploitation of our Biases through Improved Technology, by Raechel Melling

Man-Machine Rules, by Dr. Nir Buras

Artificial Intelligence: An Emerging Game-changer

Takeaways Learned about the Future of the AI Battlefield and associated information paper

The Future of Learning: Personalized, Continuous, and Accelerated

The Guy Behind the Guy: AI as the Indispensable Marshal, by Brady Moore and Chris Sauceda

Integrating Artificial Intelligence into Military Operations, by Dr. James Mancillas

Prediction Machines: The Simple Economics of Artificial Intelligence

“Own the Night” and the associated Modern War Institute podcast, with proclaimed Mad Scientist Bob Work

Bringing AI to the Joint Force and associated podcast, with Jacqueline Tame, Alka Patel, and Dr. Jane Pinelis

AI Enhancing EI in War, by MAJ Vincent Dueñas

The Human Targeting Solution: An AI Story, by CW3 Jesse R. Crifasi

An Appropriate Level of Trust…

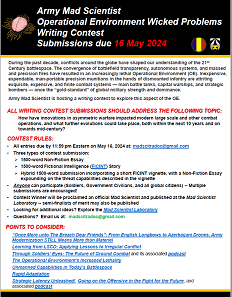

>>>>REMINDER: Army Mad Scientist wants to crowdsource your thoughts on asymmetric warfare — check out our Operational Environment Wicked Problems Writing Contest.

All entries must address the following topic:

How have innovations in asymmetric warfare impacted modern large scale and other combat operations, and what further evolutions could take place, both within the next 10 years and on towards mid-century?

We are accepting three types of submissions:

-

-

- 1500-word Non-Fiction Essay

-

-

-

- 1500-word Fictional Intelligence (FICINT) Story

-

-

-

- Hybrid 1500-word submission incorporating a short FICINT vignette, with a Non-Fiction Essay expounding on the threat capabilities described in the vignette

-

Anyone can participate (Soldiers, Government Civilians, and all global citizens) — Multiple submissions are encouraged!

Anyone can participate (Soldiers, Government Civilians, and all global citizens) — Multiple submissions are encouraged!

All entries are due NLT 11:59 pm Eastern on May 16, 2024 at: madscitradoc@gmail.com

Click here for additional information on this contest — we look forward to your participation!

About the Author: John W. Mabes III is a retired U.S. Army Infantry officer that works for the Center for Army Lessons Learned at the Joint Readiness Training Center.

Disclaimer: The views expressed in this blog post do not necessarily reflect those of the U.S. Department of Defense, Department of the Army, Army Futures Command (AFC), or Training and Doctrine Command (TRADOC).

[i] J. Manyika et al., “Notes from the AI frontier: Applications and value of deep learning,” McKinsey Global Institute, 2018.

[ii] National Security Commission on Artificial Intelligence, “Final Report,” 2021.

[iii] P. Asaro, “What should we want from a robot ethic?,” International Review of Information Ethics, vol. 6, 2006, pg. 9-16.

[iv] Asaro, “What should we want from a robot ethic?”

[v] P.W. Singer & E. Steger, “The future of conflict: WMDs, AI, and robotics,” Global Policy, vol. 5, no. 3, 2014, pg. 293-298.

[vi] Gordon B. “Skip” Davis, Jr. & Lorenz Meier (25 October 2023). “The Challenges Posed by 21st-Century Warfare and Autonomous Systems,” Center for European Policy Analysis Washington DC.

[vii] Harry Davies, Bethan McKernan, & Dan Sabbagh (1 December 2023). “‘The Gospel’: how Israel uses AI to select bombing targets in Gaza.” The Guardian.

[viii] Singer & Steger, “The future of conflict.“.

[ix] Manyika, “Notes from the AI frontier.”

[x] National Security Commission on Artificial Intelligence.

[xi] Asaro, “What should we want from a robot ethic?”