[Editor’s Note: The Army’s Mad Scientist Laboratory is pleased to feature another post by the United States Army War College (USAWC) Team Future Nerds, excerpted from their final report entitled The Rise of the Digital Native: How the Next Generation of Analysts and Technology are Changing the Intelligence Landscape. This report was a group research project for Team Future Nerds’ Master of Strategic Studies degree. This research project occurred for approximately four months, from January 2023 through April 2023, and answered the following questions posed by LTG Laura A. Potter, Deputy Chief of Staff G2, Headquarters, Department of the Army:

How do 18-22-year-old intelligence analysts likely consume, synthesize, and communicate information today?

How is information consumption likely to evolve in ways that will change end-user information consumption habits between now and 2040?

Today’s post excerpts COL Derek Baird‘s piece examining the issue of trust and Artificial Intelligence (AI) from a generational perspective. Tomorrow’s Army recruits will be digital natives, accustomed to using AI to augment and supplement decision-making in their everyday lives. But as we’ve seen with  previous revolutions in information technology, our adversaries could exploit and weaponize this AI dependency as an additional attack surface, creating new threat vectors via patching and data poisoning to exploit inherent biases and malignly influence AI users. We need to build resiliency into our AI-enabled systems to ensure trust worthiness or risk becoming increasingly vulnerable to manipulation by nefarious state and non-state actors — Read on!]

previous revolutions in information technology, our adversaries could exploit and weaponize this AI dependency as an additional attack surface, creating new threat vectors via patching and data poisoning to exploit inherent biases and malignly influence AI users. We need to build resiliency into our AI-enabled systems to ensure trust worthiness or risk becoming increasingly vulnerable to manipulation by nefarious state and non-state actors — Read on!]

Executive Summary

Generation Z is likely (55-70%) to trust AI more than past generations, leading to increasing use of artificial intelligence. Trustworthiness is a key element of  sustained use of AI today and the future. Gen Z trust is due to their digital native status, accessing information anytime, anywhere, and their ability to integrate emerging technology into their daily lives. Despite several unique challenges to trustworthy AI, such as transparency, reliability, security and privacy, Generation Z is likely to continue to integrate AI into their daily lives.

sustained use of AI today and the future. Gen Z trust is due to their digital native status, accessing information anytime, anywhere, and their ability to integrate emerging technology into their daily lives. Despite several unique challenges to trustworthy AI, such as transparency, reliability, security and privacy, Generation Z is likely to continue to integrate AI into their daily lives.

Discussion

Generation Z is known for being digital natives who have grown up in a world where technology is integrated into every aspect of their lives. They are more apt to trust AI than previous generations. In March 2021, the University of Queensland in Australia conducted a five-country study on trustworthy AI. The study concluded that Gen Z trust AI 10% more than the Baby Boomer generation (34% vs 24%). More recent surveys also suggest that over 70% of Gen Z believe AI will have a positive impact on the world.

Generation Z is known for being digital natives who have grown up in a world where technology is integrated into every aspect of their lives. They are more apt to trust AI than previous generations. In March 2021, the University of Queensland in Australia conducted a five-country study on trustworthy AI. The study concluded that Gen Z trust AI 10% more than the Baby Boomer generation (34% vs 24%). More recent surveys also suggest that over 70% of Gen Z believe AI will have a positive impact on the world.

A notable example of trusted AI are virtual assistants. Anthropomorphism, attributing human characteristics to objects such as virtual assistants, increases trust resilience. Virtual assistants are becoming more ubiquitous in society. Gartner, an organization that uses experts and tools to guide  organizations to make smarter decisions and stronger performance on an organization’s mission-critical priorities, predicts that by 2025, 50% of knowledge workers will use a virtual assistant on a daily basis, up from 2% in 2019.

organizations to make smarter decisions and stronger performance on an organization’s mission-critical priorities, predicts that by 2025, 50% of knowledge workers will use a virtual assistant on a daily basis, up from 2% in 2019.

Generation Z, as digital natives, embrace technology and trust that it is going to have a positive effect on their livelihood. Gen Z have experienced information ubiquity for their entire lives — accessing it anytime, anywhere — and have been at the forefront of technology shifts. These attributes enable Gen Z to be more comfortable with and are more willing to trust AI.

Developing trustworthy AI is important to pave the way for increased integration of AI now and in future generations. Trustworthy AI refers to artificial intelligence systems that can be relied upon to function as intended, while also operating in an ethical and socially responsible manner. It is built on a foundation of autonomy, privacy, transparency, and security.

Developing trustworthy AI is important to pave the way for increased integration of AI now and in future generations. Trustworthy AI refers to artificial intelligence systems that can be relied upon to function as intended, while also operating in an ethical and socially responsible manner. It is built on a foundation of autonomy, privacy, transparency, and security.

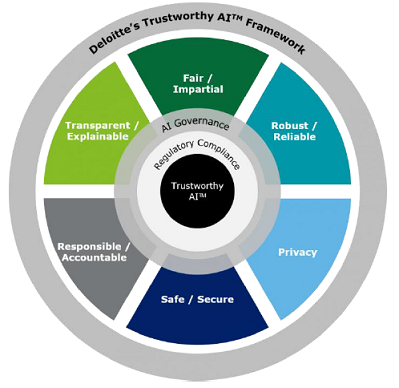

Trustworthy AI should be able to recognize and mitigate errors, and alert humans or secondary systems when necessary. Constant feedback loops provide mechanisms to reduce machine learning biases and ethical dilemmas, thereby increasing trustworthiness in the system. The Deloitte AI Institute developed a framework (see Figure 1, below) to identify and mitigate potential risks related to AI ethics at every stage of the AI lifecycle.

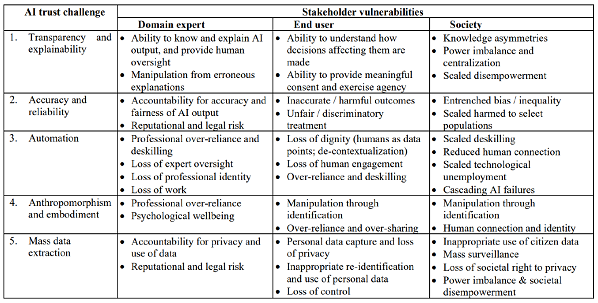

Trustworthy AI comes with unique challenges. A review of trust in AI highlighted five central AI trust challenges (see Table 1, below): 1) transparency and explainability, 2) accuracy and reliability, 3) automation, 4) anthropomorphism, and 5) mass data extraction. These challenges represent vulnerabilities as a key element of trust. Understanding and mitigating these risks is central to building trust in AI.

To this end, the European Commission’s AI High-Level Expert Group (AI HLEG) developed an initiative to ensure that AI is human-centric and trustworthy. Regulating AI to ensure security and privacy is a key variable to ensure AI trustworthiness.

Trustworthy AI is crucial in ensuring that the development and use of AI systems in the public and private sectors are safe and effective. It is also important to note that trustworthy AI is not only a technical matter but also a social and ethical one, requiring collaboration across different fields and perspectives to ensure that AI works for the benefit of all. A framework like trustworthy AI can help organizations manage the unique risks associated with AI while ensuring that their systems remain transparent and accountable.

Analytic Confidence

The analytic confidence for this estimate is moderate. Sources were reliable and corroborated with one another. This report was generated using the author’s analysis and AI (Chat GTP, Perplexity, Unrestricted Intelligence, and Elicit) to help develop a framework and identify resources. All AI provided resources were verified to ensure validity. This report is subject to change based on new information gathered and analyzed through more detailed, collaborative research.

If you enjoyed this post, check out Team Future Nerds‘ complete The Rise of the Digital Native: How the Next Generation of Analysts and Technology are Changing the Intelligence Landscape final report.

… then review the following related Mad Scientist content:

Engaging Generations Z and Alpha: Communicating Effectively with Digital Natives, by LTC James Esquivel

Weaponized Information: What We’ve Learned So Far…, Insights from the Mad Scientist Weaponized Information Series of Virtual Events and all of this series’ associated content and videos — especially Keith Law‘s presentation on Decision Making, as well as his Bias, Behavior, and Baseball blog post and associated podcast

The Exploitation of our Biases through Improved Technology, by Raechel Melling

Insights from Army Mad Scientist’s Back to the Future Conference

The Death of Authenticity: New Era Information Warfare

Sub-threshold Maneuver and the Flanking of U.S. National Security, by Dr. Russell Glenn

The Erosion of National Will – Implications for the Future Strategist, by Dr. Nick Marsella

A House Divided: Microtargeting and the next Great American Threat, by 1LT Carlin Keally

Recruiting the All-Volunteer Force of the Future and The Inexorable Role of Demographics, by proclaimed Mad Scientist Caroline Duckworth

U.S. Demographics, 2020-2028: Serving Generations and Service Propensity

The AI Study Buddy at the Army War College (Part 1) and associated podcast, with LtCol Joe Buffamante, USMC

The AI Study Buddy at the Army War College (Part 2) and associated podcast, with Dr. Billy Barry

Chatty Cathy, Open the Pod Bay Doors: An Interview with ChatGPT and associated podcast

Artificial Intelligence: An Emerging Game-changer

Takeaways Learned about the Future of the AI Battlefield and associated information paper

The Guy Behind the Guy: AI as the Indispensable Marshal, by Brady Moore and Chris Sauceda

Integrating Artificial Intelligence into Military Operations, by Dr. James Mancillas

Prediction Machines: The Simple Economics of Artificial Intelligence

“Own the Night” and the associated Modern War Institute podcast, with proclaimed Mad Scientist Bob Work

Bringing AI to the Joint Force and associated podcast, with Jacqueline Tame, Alka Patel, and Dr. Jane Pinelis

AI Enhancing EI in War, by MAJ Vincent Dueñas

The Human Targeting Solution: An AI Story, by CW3 Jesse R. Crifasi

An Appropriate Level of Trust…

>>>>ANNOUNCEMENT: Join USA Fight Club, CAE USA, and Army Mad Scientist on 23 September 2023 (this Saturday night!) from 1800-2200 for an evening of wargaming — check out the flyer here for more information!

>>>>ANNOUNCEMENT: Join USA Fight Club, CAE USA, and Army Mad Scientist on 23 September 2023 (this Saturday night!) from 1800-2200 for an evening of wargaming — check out the flyer here for more information!

>>>>REMINDER: The U.S. Army War College is preparing for the Third Annual Strategic Landpower Symposium on 7-9 May 2024.

Last year’s Second Annual Strategic Landpower Symposium included several general officer speakers. GEN Flynn, CG, USARPAC, was the Keynote speaker; GEN Hokanson, Chief, National Guard Bureau, was a guest speaker; and LTG Bernabe, CG, III Corps, was our Capstone speaker among seven other general officer speakers and panelists. Videos of this event can be found here and on the Strategic Landpower Symposium website.

Last year, we received 26 papers from the military academic community, including several submissions from foreign allied and partner officers and were able to select eight for publication through Marine Corps University Press. These selected papers are in the final stages of publication and will be available at the 2024 Strategic Landpower Symposium.

Check out the Call for Papers flyer for next year’s symposium, providing the event’s themes, proposed abstract submission guidelines/suspense, and paper submission guidelines/suspense. For additional information about the Symposium, click here.

Check out the Call for Papers flyer for next year’s symposium, providing the event’s themes, proposed abstract submission guidelines/suspense, and paper submission guidelines/suspense. For additional information about the Symposium, click here.

Disclaimer: The views expressed in this blog post do not necessarily reflect those of the U.S. Department of Defense, Department of the Army, Army Futures Command (AFC), or Training and Doctrine Command (TRADOC).