[Editor’s Note: Mad Scientist Laboratory is pleased to present (somewhat belatedly) our July edition of “The Queue” – a monthly post listing the most compelling articles, books, podcasts, videos, and/or movies that the U.S. Army’s Training and Doctrine Command (TRADOC) Mad Scientist Initiative has come across during the past month. In this anthology, we address how each of these works either informs or challenges our understanding of the Future Operational Environment. We hope that you will add “The Queue” to your essential reading, listening, or watching each month!]

1.  “P.W. Singer: Adapt Fast, or Fail,” by Brendan Nicholson, Australian Strategic Policy Institute, 7 July 2018.

“P.W. Singer: Adapt Fast, or Fail,” by Brendan Nicholson, Australian Strategic Policy Institute, 7 July 2018.

Mr. Nicholson summarizes a recent presentation by one of our favorite Mad Scientists, P.W. Singer from New America. Mr. Singer warns that as more and more items are linked to the internet of things, the opportunities for nations and societies (also non-state actors and super-empowered individuals) to attack and be attacked become much broader. He states that “all of this technology does not mean that we will see humans eliminated from war anytime soon. Rather, just like the steam engine and the plane and the computer, we will see changes in the human skills that are most needed and less needed. This movement of people skills can and should change everything from our recruiting and training to our doctrine and organizational design.” This movement of people skills was a key aspect of last week’s Mad Scientist Learning in 2050 Conference, conducted at Georgetown University on 8 – 9 August. The demands on Leaders and skills required to compete in the changing character of war are probably fundamentally different. Mr. Singer challenges us to choose real change and not change just enough to fail. His example of the USS Arizona with its two catapult-launched float planes demonstrates a bureaucracy’s incremental approach in the face of revolutionary change. That change – modern bombers – made this once great warship a monument to a “Day that will live in Infamy.”

2. The Quantum Spy: A Thriller, by David Ignatius, W. W. Norton & Company, Inc., 17 November 2017.

David Ignatius, famed spy novelist and Washington Post journalist, tackles not only espionage but also a multitude of disruptive technologies in his new thriller, The Quantum Spy. The book revolves around a race towards leap-ahead developments in quantum computing between the United States and China; but a looming subplot is the cat and mouse game of counterintelligence, infiltration, and insertion of moles between the Central Intelligence Agency and the Chinese Ministry of State Security. CIA case officer and Army veteran Harris Chang struggles with his Chinese heritage, devotion to America, and the sometimes unscrupulous role of his organization in fighting to protect America’s secrets. The book is replete with detailed and accurate descriptions of American innovation efforts. The depiction of the infiltration on American college campuses and research institutions by foreign students being sponsored and often directed by foreign adversaries is alarming and timely given recent real-world events such as a Chinese student taking groundbreaking work on metamaterials at Duke University back to his home country. The book raises important questions about the balance between open, collaborative innovation (that opens up a number of vulnerabilities) and more restrictive, government-funded research (that may be more secure), both of which are critical in the current Era of Accelerated Human Progress (now through 2035) as described in The Operational Environment and the Changing Character of Future Warfare. Similarly to Agents of Innocence and Body of Lies, David Ignatius has created a work that not only features a fantastic story but that includes many government, military, and intelligence implications.

3. “War and the Human Brain” podcast with Dr. James Giordano and Mr. John Amble, Modern War Institute, 24 July 2018 (originally aired in 2017) – review by Marie Murphy.

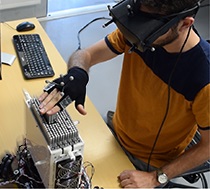

Modern War Institute’s John Amble spoke with Dr. James Giordano about his research in neuroscience and using “the brain as a weapon” following his presentation at the Mad Scientist Visualizing Multi Domain Battle in 2030-2050 Conference, 25-26 July 2017, at Georgetown University, Washington, D.C. After a brief historical overview of neuroscience’s military applications, Dr. Giordano explains how recent research on electric and magnetic trans-cranial stimulation and implantable electrodes has opened up possibilities and controversies. Soldiers of the future could obtain modifications that improve memory, cognition, and vigilance while decreasing fatigue. Conversely, there is an ethical dilemma when it comes to discontinuing, removing, or deactivating these improvements; there is concern regarding the Soldier potentially feeling disabled or disenabled afterwards. The discussion transitioned to the implications of “drugs, bugs, toxins, and tools,” all of which can have some kind of effect on neurological activity, and all of which can be weaponized. These capabilities, while not considered weapons of mass destruction, are categorized as weapons of mass disruption. These tools and technologies pose a real, rising threat in the future Operational Environment; are deployable by nation-states, non-state actors, and super-empowered individuals; and can be specifically targeted for optimal impact. Read more about these capabilities in the Mad Scientist Bio Convergence and Soldier 2050 Conference Final Report.

4. “Creating Genetically Modified Babies Is ‘Morally Permissible,’ Says Ethics Committee,” by Kristin Houser, Futurism, 17 July 2018.

On 17 July 2018, the UK’s Nuffield Council on Bioethics issued a press release in conjunction with their publication of Genome editing and human reproduction. The Council, established in 1991 to address ethical issues raised by new developments in biology and medicine, “concluded that editing the DNA of a human embryo, sperm, or egg to influence the characteristics of a future person (‘heritable genome editing’) could be morally permissible.” Futurism interpreted this as meaning we are “one step closer to designer babies,” and concluded it “is a promising sign for anyone eager for the day gene-editing lets them create the offspring of their dreams.” That said, the Council recommends two overarching principles governing the ethical use of heritable genome editing: “they must be intended to secure, and be consistent with, the welfare of the future person; and they should not increase disadvantage, discrimination or division in society.” The Council also noted that current British law precludes the genomic editing of embryos that are to be placed in a womb. So, no Brave New World in our future, right?

Not necessarily.… As Mr. Hank Greely, Professor of Law, Stanford University, pointed out this spring at our Mad Scientist Bio Convergence and Soldier 2050 Conference, we are on the cusp of being able to use skin cells to generate lines of viable embryos, which then may be subjected to Preimplantation Genetic Diagnoses prior to selection and implantation to preclude a host of genetic diseases and ensure healthier babies (who could possibly object to that?). With the advent of genetic editing and artificial wombs, we will be able to manipulate the genomic coding of any given embryo (initially to address genetic disease, but eventually to enhance capabilities), implant it, and then “decant” the resulting progeny. Sound farfetched? At the same conference, Ms. Elsa Kania, CNAS, noted that the PRC is currently gene editing human embryos and conducting human clinical trials. Their Bio-Google Initiative (BGI) is soliciting DNA from their geniuses in an attempt to understand the genomic basis for intelligence. With the advent of genetically enhanced humans, it is conceivable that we could face adversaries in the Deep Future Operational Environment with warrior caste soldiers, each modified genetically as embryos for greater strength, endurance, and combat performance in complex and extreme environments (e.g., high / low temperatures, low atmospheric pressures) and with optimized Brain Computer Interfaces. Previous regimes sought to populate their forces with “Supermen” — genomic editing may provide future regimes with the post-industrial means of accomplishing this objective “ … by the lights of perverted science.”

5. “This VR Horror Game Is Exactly as Scary as Your Body Can Handle,” by Kristin Houser, Futurism, 22 July 2018.

Red Meat Games released its fifth virtual reality (VR) project, Bring to Light. Developers designed the VR horror game to push players to their terror limits with the help of a biometric sensor. Right now, Bring to Light is the first VR game to use biometric feedback to effect gameplay; it calls to mind the Black Mirror episode “Playtest,” a near-future cautionary tale of the risks associated with combining VR and Augmented Reality (AR) with gaming. In spite of this, AR and VR will become more integrated and player involved. As discussed in last month’s edition of “The Queue,” VR has the potential to also accelerate learning and enhance retention when used to train our Soldiers and Leaders.

6. “’Shapeshift’ lets you feel objects that aren’t there” video, C/NET, 21 July 2018.

Stanford University is working on a technology known as “Shapeshift” that presents users with a haptic “touch” interface that provides a bridge between VR and the physical world. Shapeshift is a high-resolution, compact, modular shape display consisting of 288 actuated pins (4.85mm×4.85mm, 2.8mm inter-pin spacing) formed by six 2×24 pin modules. It is reminiscent of pin art toys played with by children and adults alike for years. The interface will allow users to truly feel the objects they see and interact with in VR, bringing about an entirely new level of immersion into constructed virtual or augmented worlds. The implications for accurate and intuitive modeling, design, simulation, and training are astounding. In the future, such interfaces could be utilized in vehicles, on or with weapons, and integrated in classrooms and other training venues.

7. Smart Bandages designed to monitor and tailor treatment for chronic wounds, by Mike Silver / Tufts University, TuftsNow, 6 July 2018.

Engineers from Tufts University have re-designed the bandage with the intent of taking it from a passive treatment to an active treatment for chronic wounds. These skin wounds can be from burns, diabetes, or other medical conditions that overwhelm the normal regenerative capabilities of the skin. The bandage monitors the pH and temperature and can administer drugs when either goes out of normal range. While the bandage treats only certain chronic skin conditions at present, it is easy to see future implications of this technology, especially in Soldiers on the battlefield. Persistent or serious wounds can be monitored and treated in real-time without needing to take the Soldier out of the fight or waiting for medical advice and treatment from a professional. This could reduce cost and recovery time. What is the next step beyond smart bandages? Will it be feasible to have general health sensors and a variety of treatments embedded on the Soldier in the future?

If you read, watch, or listen to something this month that you think has the potential to inform or challenge our understanding of the Future Operational Environment, please forward it (along with a brief description of why its potential ramifications are noteworthy to the greater Mad Scientist Community of Action) to our attention at: usarmy.jble.tradoc.mbx.army-mad-scientist@mail.mil — we may select it for inclusion in our next edition of “The Queue”!

For the U.S. military to maintain its overmatch capabilities, innovation is an absolute necessity. As noted in

For the U.S. military to maintain its overmatch capabilities, innovation is an absolute necessity. As noted in  1. Identifying the NEED:

1. Identifying the NEED:

3. Identifying the EXPERTISE:

3. Identifying the EXPERTISE: 4. Identifying the RESOURCES:

4. Identifying the RESOURCES:

AR and MR function in real-time, bringing the elements of the digital world into a Soldier’s perceived real world, resulting in optimal, timely, and relevant decisions and actions. AR and MR allow for the overlay of information and sensor data into the physical space in a way that is intuitive, serves the point of need, and requires minimal training to interpret. AR and MR will enable the U.S. military to survive in complex environments by decentralizing decision-making from mission command and placing substantial capabilities in Soldiers’ hands in a manner that does not overwhelm them with information.

AR and MR function in real-time, bringing the elements of the digital world into a Soldier’s perceived real world, resulting in optimal, timely, and relevant decisions and actions. AR and MR allow for the overlay of information and sensor data into the physical space in a way that is intuitive, serves the point of need, and requires minimal training to interpret. AR and MR will enable the U.S. military to survive in complex environments by decentralizing decision-making from mission command and placing substantial capabilities in Soldiers’ hands in a manner that does not overwhelm them with information.

In addition, AR and MR will revolutionize training, empowering Soldiers to train as they fight. Soldiers will be able to use real-time sensor data from unmanned aerial vehicles to visualize battlefield terrain with geographic awareness of roads, buildings, and other structures before conducting their missions. They will be able to rehearse courses of action and analyze them before execution to improve situational awareness. AR and MR are increasingly valuable aids to tactical training in preparation for combat in complex and congested

In addition, AR and MR will revolutionize training, empowering Soldiers to train as they fight. Soldiers will be able to use real-time sensor data from unmanned aerial vehicles to visualize battlefield terrain with geographic awareness of roads, buildings, and other structures before conducting their missions. They will be able to rehearse courses of action and analyze them before execution to improve situational awareness. AR and MR are increasingly valuable aids to tactical training in preparation for combat in complex and congested

1. Real World: Pre-internet real, touch-feel-and-smell world.

1. Real World: Pre-internet real, touch-feel-and-smell world. 2. Digital Reality 1.0: There are many already existing digital realities that people can immerse themselves into, which include gaming, as well as social media and worlds such as

2. Digital Reality 1.0: There are many already existing digital realities that people can immerse themselves into, which include gaming, as well as social media and worlds such as  3. Digital Reality 2.0: The Mixed Reality (MR) world of Virtual Reality (VR) and Augmented Reality (AR). These technologies are still in their early stages; however, they show tremendous potential for receiving, and perceiving information, as well as experiencing narratives through synthetic or captured moments.

3. Digital Reality 2.0: The Mixed Reality (MR) world of Virtual Reality (VR) and Augmented Reality (AR). These technologies are still in their early stages; however, they show tremendous potential for receiving, and perceiving information, as well as experiencing narratives through synthetic or captured moments.  Suspended moments of actual real-world environments involve 360 degree cameras which capture a video moment in time; these already exist and the degree in which it feels like the VR user is teleported to that geographical and temporal moment in time will, for the most part, depend on the quality of the video and the sound. This VR experience can also be modified, edited and amended just like regular videos are edited today. This, coupled with technologies that authentically replicate voice (ex: Adobe VoCo) and technologies that can change faces in videos, create open-ended possibilities for ‘fake’ authentic videos and soundbites that can be embedded.

Suspended moments of actual real-world environments involve 360 degree cameras which capture a video moment in time; these already exist and the degree in which it feels like the VR user is teleported to that geographical and temporal moment in time will, for the most part, depend on the quality of the video and the sound. This VR experience can also be modified, edited and amended just like regular videos are edited today. This, coupled with technologies that authentically replicate voice (ex: Adobe VoCo) and technologies that can change faces in videos, create open-ended possibilities for ‘fake’ authentic videos and soundbites that can be embedded.  Some say the combination of voice command, artificial intelligence, and AR will make screens a thing of the past. Google is experimenting with their new app

Some say the combination of voice command, artificial intelligence, and AR will make screens a thing of the past. Google is experimenting with their new app  4. Brain Computer Interface (BCI): Also called Brain Machine Interface (BMI). BCI has the potential to create another reality when the brain is seamlessly connected to the internet. This may also include connection to artificial intelligence and other brains. This technology is currently being developed, and the space for ‘minimally invasive’ BCI has exploded. Should it work as intended, the user would, in theory, be directly communicating to the internet through thought, the lines would blur between the user’s memory and knowledge and the augmented intelligence its brain accessed in real-time through BCI.

4. Brain Computer Interface (BCI): Also called Brain Machine Interface (BMI). BCI has the potential to create another reality when the brain is seamlessly connected to the internet. This may also include connection to artificial intelligence and other brains. This technology is currently being developed, and the space for ‘minimally invasive’ BCI has exploded. Should it work as intended, the user would, in theory, be directly communicating to the internet through thought, the lines would blur between the user’s memory and knowledge and the augmented intelligence its brain accessed in real-time through BCI.  In this sense it would also be able to communicate with others through thought using BCI as the medium. The sharing of information, ideas, memories and emotions through this medium would create a new way of receiving, creating and transmitting information, as well as a new reality experience. However, for those with a

In this sense it would also be able to communicate with others through thought using BCI as the medium. The sharing of information, ideas, memories and emotions through this medium would create a new way of receiving, creating and transmitting information, as well as a new reality experience. However, for those with a  5. Whole Brain Emulation (WBE): Brings a very new dimension to the information landscape. It is very much still in the early stages, however, if successful, this would create a virtual immortal sentient existence which would live and interact with the other realities. It is still unclear if the uploaded mind would be sentient, how it would interact with its new world (the cloud), and what implications it would have on those who know or knew the person.

5. Whole Brain Emulation (WBE): Brings a very new dimension to the information landscape. It is very much still in the early stages, however, if successful, this would create a virtual immortal sentient existence which would live and interact with the other realities. It is still unclear if the uploaded mind would be sentient, how it would interact with its new world (the cloud), and what implications it would have on those who know or knew the person.  As the technology is still new, many avenues for brain uploading are being explored which include it being done while a person is alive and when a person dies. Ultimately a ‘copy’ of the mind would be made and the computer would run a simulation model of the uploaded brain, it is also expected to have a conscious mind of its own. This uploaded, fully functional brain could live in a virtual reality or in a computer which takes physical form in a robot or biological body. Theoretically, this technology would allow uploaded minds to interact with all realities and be able to create and share information.

As the technology is still new, many avenues for brain uploading are being explored which include it being done while a person is alive and when a person dies. Ultimately a ‘copy’ of the mind would be made and the computer would run a simulation model of the uploaded brain, it is also expected to have a conscious mind of its own. This uploaded, fully functional brain could live in a virtual reality or in a computer which takes physical form in a robot or biological body. Theoretically, this technology would allow uploaded minds to interact with all realities and be able to create and share information.  For example, if Osama bin Laden’s brain had been uploaded to the cloud, his living followers for generations to come could interact with him and acquire feedback and guidance. Another example is Adolf Hitler;

For example, if Osama bin Laden’s brain had been uploaded to the cloud, his living followers for generations to come could interact with him and acquire feedback and guidance. Another example is Adolf Hitler;  if his brain were to have been uploaded, his modern-day followers would be able to interact with him through cognitive augmentation and AI. This of course could be used to ‘keep’ loved ones in our lives, however the technology has broader implications when it is used to perpetuate harmful ideologies, shape opinions, and mobilize populations into violent action. As mind-boggling as all this may sound, the WBE “hypothetical futuristic process of scanning the mental state of a particular brain substrate and copying it to a computer” is being scientifically pursued. In 2008, the Future of Humanity Institute at Oxford University published a technical report about the

if his brain were to have been uploaded, his modern-day followers would be able to interact with him through cognitive augmentation and AI. This of course could be used to ‘keep’ loved ones in our lives, however the technology has broader implications when it is used to perpetuate harmful ideologies, shape opinions, and mobilize populations into violent action. As mind-boggling as all this may sound, the WBE “hypothetical futuristic process of scanning the mental state of a particular brain substrate and copying it to a computer” is being scientifically pursued. In 2008, the Future of Humanity Institute at Oxford University published a technical report about the  Despite the many questions that remain unanswered and a lack of a human brain upload proof of concept, a new startup,

Despite the many questions that remain unanswered and a lack of a human brain upload proof of concept, a new startup,