[Editor’s Note: The U.S. Army Futures Command (AFC) and Training and Doctrine Command (TRADOC) co-sponsored the Mad Scientist Disruption and the Operational Environment Conference with the Cockrell School of  Engineering at The University of Texas at Austin on 24-25 April 2019 in Austin, Texas. Today’s post is excerpted from this conference’s Final Report and addresses how the speed of technological innovation and convergence continues to outpace human governance. The U.S. Army must not only consider how best to employ these advances in modernizing the force, but also the concomitant ethical, moral, and legal implications their use may present in the Operational Environment (see links to the newly published TRADOC Pamphlet 525-92, The Operational Environment and the Changing Character of Warfare, and the complete Mad Scientist Disruption and the Operational Environment Conference Final Report at the bottom of this post).]

Engineering at The University of Texas at Austin on 24-25 April 2019 in Austin, Texas. Today’s post is excerpted from this conference’s Final Report and addresses how the speed of technological innovation and convergence continues to outpace human governance. The U.S. Army must not only consider how best to employ these advances in modernizing the force, but also the concomitant ethical, moral, and legal implications their use may present in the Operational Environment (see links to the newly published TRADOC Pamphlet 525-92, The Operational Environment and the Changing Character of Warfare, and the complete Mad Scientist Disruption and the Operational Environment Conference Final Report at the bottom of this post).]

Technological advancement and subsequent employment often outpaces moral, ethical, and legal standards. Governmental and regulatory bodies are then caught between technological progress and the evolution of social thinking. The Disruption and the Operational Environment Conference uncovered and explored several tension points that the Army may be challenged by in the future.

Space

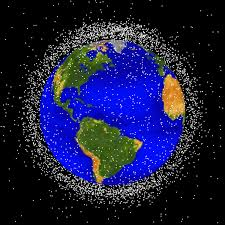

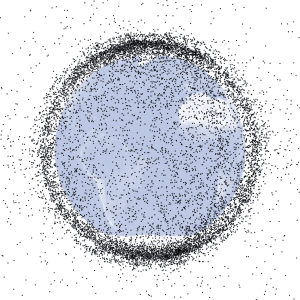

Space is one of the least explored domains in which the Army will operate; as such, we may encounter a host of associated ethical and legal dilemmas. In the course of warfare, if the Army or an adversary intentionally or inadvertently destroys commercial communications infrastructure – GPS satellites – the ramifications to the economy, transportation, and emergency services would be dire and deadly. The Army will be challenged to consider how and where National Defense measures in space affect non-combatants and American civilians on the ground.

International governing bodies may have to consider what responsibility space-faring entities – countries, universities, private companies – will have for mitigating orbital congestion caused by excessive launching and the aggressive exploitation of space. If the Army is judicious with its own footprint in space, it could reduce the risk of accidental collisions and unnecessary clutter and congestion. It is extremely expensive to clean up space debris and deconflicting active operations is essential. With each entity acting in their own self-interest, with limited binding law or governance and no enforcement, overuse of space could lead to a “tragedy of the commons” effect.1 The Army has the opportunity to more closely align itself with international partners to develop guidelines and protocols for space operations to avoid potential conflicts and to influence and shape future policy. Without this early intervention, the Army may face ethical and moral challenges in the future regarding its addition of orbital objects to an already dangerously cluttered Low Earth Orbit. What will the Army be responsible for in democratized space? Will there be a moral or ethical limit on space launches?

Autonomy in Robotics

Robotics have been pervasive and normalized in military operations in the post-9/11 Operational Environment. However, the burgeoning field of autonomy in robotics with the potential to supplant humans in time-critical decision-making will bring about significant ethical, moral, and legal challenges that the Army, and larger DoD are currently facing. This issue will be exacerbated in the Operational Environment by an increased utilization and reliance on autonomy.

The increasing prevalence of autonomy will raise a number of important questions. At what point is it more ethical to allow a machine to make a decision that may save lives of either combatants or civilians? Where does fault, responsibility, or attribution lie when an autonomous system takes lives? Will defensive autonomous operations – air defense systems, active protection systems – be more ethically acceptable than offensive – airstrikes, fire missions – autonomy? Can Artificial Intelligence/Machine Learning (AI/ML) make decisions in line with Army core values?

Deepfakes and AI-Generated Identities, Personas, and Content

A new era of Information Operations (IO) is emerging due to disruptive technologies such as deepfakes – videos that are constructed to make a person appear to say or do something that they never said or did – and AI Generative Adversarial Networks (GANs) that produce fully original faces, bodies, personas, and robust identities.2 Deepfakes and GANs are alarming to national security experts as they could trigger accidental escalation, undermine trust in authorities, and cause unforeseen havoc. This is amplified by content such as news, sports, and creative writing similarly being generated by AI/ML applications.

This new era of IO has many ethical and moral implications for the Army. In the past, the Army has utilized industrial and early information age IO tools such as leaflets, open-air messaging, and cyber influence mechanisms to shape perceptions around the world. Today and moving forward in the Operational Environment, advances in technology create ethical questions such as: is it ethical or legal to use cyber or digital manipulations against populations of both U.S. allies and strategic competitors? Under what title or authority does the use of deepfakes and AI-generated images fall? How will the Army need to supplement existing policy to include technologies that didn’t exist when it was written?

AI in Formations

With the introduction of decision-making AI, the Army will be faced with questions about trust, man-machine relationships, and transparency. Does AI in cyber require the same moral benchmark as lethal decision-making? Does transparency equal ethical AI? What allowance for error in AI is acceptable compared to humans? Where does the Army allow AI to make decisions – only in non-combat or non-lethal situations?

Commanders, stakeholders, and decision-makers will need to gain a level of comfort and trust with AI entities exemplifying a true man-machine relationship. The full integration of AI into training and combat exercises provides an opportunity to build trust early in the process before decision-making becomes critical and life-threatening. AI often includes unintentional or implicit bias in its programming. Is bias-free AI possible? How can bias be checked within the programming? How can bias be managed once it is discovered and how much will be allowed? Finally, does the bias-checking software contain bias? Bias can also be used in a positive way. Through ML – using data from previous exercises, missions, doctrine, and the law of war – the Army could inculcate core values, ethos, and historically successful decision-making into AI.

If existential threats to the United States increase, so does pressure to use artificial and autonomous systems to gain or maintain overmatch and domain superiority. As the Army explores shifting additional authority to AI and autonomous systems, how will it address the second and third order ethical and legal ramifications? How does the Army rectify its traditional values and ethical norms with disruptive technology that rapidly evolves?

If you enjoyed this post, please see:

-

- The entire Mad Scientist Disruption and the Operational Environment Conference Final Report, dated 25 July 2019.

-

- “Ethics and the Future of War panel, facilitated by LTG Dubik (USA-Ret.) at the Mad Scientist Visualizing Multi Domain Battle 2030-2050 Conference, facilitated at Georgetown University, on 25-26 July 2017.

Just Published! TRADOC Pamphlet 525-92, The Operational Environment and the Changing Character of Warfare, 7 October 2019, describes the conditions Army forces will face and establishes two distinct timeframes characterizing near-term advantages adversaries may have, as well as breakthroughs in technology and convergences in capabilities in the far term that will change the character of warfare. This pamphlet describes both timeframes in detail, accounting for all aspects across the Diplomatic, Information, Military, and Economic (DIME) spheres to allow Army forces to train to an accurate and realistic Operational Environment.

1 Munoz-Patchen, Chelsea, “Regulating the Space Commons: Treating Space Debris as Abandoned Property in Violation of the Outer Space Treaty,” Chicago Journal of International Law, Vol. 19, No. 1, Art. 7, 1 Aug. 2018. https://chicagounbound.uchicago.edu/cgi/viewcontent.cgi?article=1741&context=cjil

2 Robitzski, Dan, “Amazing AI Generates Entire Bodies of People Who Don’t Exist,” Futurism.com, 30 Apr. 2019. https://futurism.com/ai-generates-entire-bodies-people-dont-exist

Positioning, Navigation, and Timing (PNT).

Positioning, Navigation, and Timing (PNT).

There are efforts, such as University of Texas-Austin’s tool

There are efforts, such as University of Texas-Austin’s tool