[Editor’s Note: The U.S. Army Training and Doctrine Command (TRADOC) recruits, trains, educates, develops, and builds the Army, driving constant improvement and change to ensure that the Army can successfully compete and deter, fight, and decisively win on any … Read the rest

191. Competition in 2035: Anticipating Chinese Exploitation of Operational Environments

[Editor’s Note: In today’s post, Mad Scientist Laboratory explores China’s whole-of-nation approach to exploiting operational environments, synchronizing government, military, and industry activities to change geostrategic power paradigms via competition in 2035. Excerpted from products previously developed and published by … Read the rest

174. A New Age of Terror: The Future of CBRN Terrorism

[Editor’s Note: Mad Scientist Laboratory is pleased to publish today’s post by guest blogger Zachary Kallenborn. In the first of a series of posts, Mr. Kallenborn addresses how the convergence of emerging technologies is eroding barriers to terrorist … Read the rest

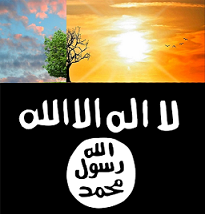

136. Future Threats: Climate Change and Islamic Terror

[Editor’s Note: Mad Scientist Laboratory welcomes back returning guest blogger Mr. Matthew Ader, whose cautionary post warns of the potential convergence of Islamic terrorism and climate change activism, possibly resonating with western populations that have not been (to … Read the rest

78. The Classified Mind – The Cyber Pearl Harbor of 2034

[Editor’s Note: Mad Scientist Laboratory is pleased to publish the following post by guest blogger Dr. Jan Kallberg, faculty member, United States Military Academy at West Point, and Research Scientist with the Army Cyber Institute at West Point. … Read the rest