(Editor’s Note: Since its inception in November 2017, Mad Scientist Laboratory has enabled us to expand our reach and engage global innovators from across industry, academia, and the Government regarding emergent disruptive technologies. For perspective, at the end of 2017, our blog had accrued 3,022 visitors and 5,212 views. Contrast that with the first three months of 2018, where we have racked up an additional 5,858 visitors and 11,387 views!

(Editor’s Note: Since its inception in November 2017, Mad Scientist Laboratory has enabled us to expand our reach and engage global innovators from across industry, academia, and the Government regarding emergent disruptive technologies. For perspective, at the end of 2017, our blog had accrued 3,022 visitors and 5,212 views. Contrast that with the first three months of 2018, where we have racked up an additional 5,858 visitors and 11,387 views!

Our Mad Scientist Community of Action continues to grow in no small part due to the many guest bloggers who have shared their provocative, insightful, and occasionally disturbing visions of the future. To date, Mad Scientist Laboratory has published 15 guest blog posts.

And so, as the first half of FY18 comes to a close, we want to recognize all of our guest bloggers and thank them for contributing to our growth. We also challenge those of you that have been thinking about contributing a guest post to take the plunge and send us your submissions!

In particular, we would like to recognize Mr. Pat Filbert, who was our inaugural (and repeat!) guest blogger by re-posting below his initial submission, published on 4 December 2018. Pat’s post, “Why do I have to go first?!”, generated a record number of visits and views. Consequently, we hereby declare Pat to be the Mad Scientist Laboratory’s “Maddest” Guest Blogger! for the first half of FY18. Pat will receive the following much coveted Mad Scientist swag in recognition of his achievement: a signed proclamation officially attesting to his Mad Scientist status as “Maddest” Guest Blogger!, 1st Half, FY18, and a Mad Scientist patch to affix to his lab coat and wear with pride!

Pat will receive the following much coveted Mad Scientist swag in recognition of his achievement: a signed proclamation officially attesting to his Mad Scientist status as “Maddest” Guest Blogger!, 1st Half, FY18, and a Mad Scientist patch to affix to his lab coat and wear with pride!

And now, please enjoy Pat’s post…)

8. “Why do I have to go first?!”

“Reports indicate there’s been a mutiny by the U.S. Army’s robotic Soldiers resulting in an attack killing 47 human Soldiers.” – media report from Democratic Republic of the Congo, August 2041.

“Our robotics systems have not ‘mutinied,’ there was a software problem resulting in several of our robotic Soldiers attacking our human Soldiers resulting in casualties; an investigation is underway.” – Pentagon spokesman.

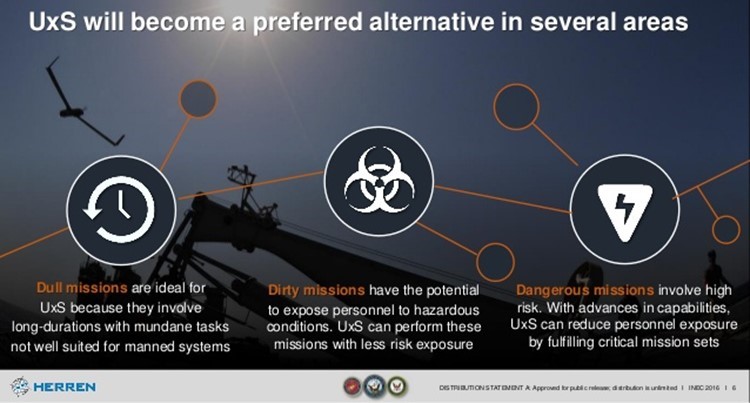

Reconciling the use of robotics has been focused on taking the risk away from humans and letting machines do the “dull, dirty, dangerous” operations. One of the premises of introducing unmanned aircraft systems into the force was to keep pilots, and their expensive aircraft, out of harm’s way while increasing the data flow for the commander.

Potential future use of robotic Soldiers to lead the way into an urban battlefield, absorb the brunt of a defending adversary’s fire to allow human Soldiers to exploit openings is a possible course of action. Keeping human Soldiers to fight another day, while increasing the speed of “house by house” clearing operations so they don’t consume humans—similar to urban area clearing in World War II—could be seen as a way to reduce the time a conflict takes to win.

Now we have search engine algorithms which tailor themselves to each person conducting a search to bring up the most likely items that person wants based on past searches. Using such algorithms to support supervised autonomous robotic troops has the potential for the robot to ask “why do I have to go first?” in a given situation. The robotic Soldier could calculate far faster that survival and self-preservation to continue the mission are paramount over being used as a “bullet sponge” as the robot police in the movie “Chappie” were used.

Using such algorithms to support supervised autonomous robotic troops has the potential for the robot to ask “why do I have to go first?” in a given situation. The robotic Soldier could calculate far faster that survival and self-preservation to continue the mission are paramount over being used as a “bullet sponge” as the robot police in the movie “Chappie” were used.

Depending on robotic Soldier’s levels of autonomy coupled with ethical software academics have posited be used to enable robots to make moral and ethical decisions, the robot Soldiers could decide not to follow their orders. Turning on their human counterparts and killing them could be calculated as the correct course of action depending on how the robot Soldiers conclude the moral and ethical aspects of the orders given and how it conflicts with their programming reveal. This is the premise in the movie “2001: A Space Odyssey” where the HAL 9000 AI kills the spaceship crew because it was ordered to withhold information (lie) which conflicted with its programming to be completely truthful. Killing the crew is a result of a programming conflict; if the crew is dead, HAL doesn’t have to lie.

coupled with ethical software academics have posited be used to enable robots to make moral and ethical decisions, the robot Soldiers could decide not to follow their orders. Turning on their human counterparts and killing them could be calculated as the correct course of action depending on how the robot Soldiers conclude the moral and ethical aspects of the orders given and how it conflicts with their programming reveal. This is the premise in the movie “2001: A Space Odyssey” where the HAL 9000 AI kills the spaceship crew because it was ordered to withhold information (lie) which conflicted with its programming to be completely truthful. Killing the crew is a result of a programming conflict; if the crew is dead, HAL doesn’t have to lie.

Classified aspects of operations are withheld from human Soldiers, so this would most likely occur with robot Soldiers. This aspect could cause initiation of a programming conflict and such an attribute has to be considered for technology development; in professional military school’s syllabi; and on the battlefield as to how to plan, respond, and resolve.

• Can wargaming plans for operations including robotic Soldiers identify programming conflicts? If so, how can this be taught and programmed to resolve the conflict?

• When is the decision made to reduce the AI’s autonomy, and how, related to compartmentalized information for a more automatic/non-autonomous function?

• What safeguards have to be in place to address potential programming conflicts when the AI is “brought back up to speed” for why they were “dumbed down?”

For further general information, search ongoing discussions on outlawing weaponized autonomous systems. For academic recommendations to integrate ethical software into military autonomous systems to better follow the Laws of Warfare, see Dr. Ron Arkin’s “Ethical Robots in Warfare”

For more information on how robots could be integrated into small units, thereby enhancing their close-in lethality and multi-domain effects, see Mr. Jeff Becker’s proposed Multi-Domain “Dragoon” Squad (MDS) concept. For insights into how our potential adversaries are exploring the role of robotics on future battlefields, see our Autonomous Threat Trends post.

Pat Filbert is retired Army (24 years, Armor/MI); now a contractor with the Digital Integration for Combat Engagement (DICE) effort developing training for USAF DCGS personnel. He has experience with UAS/ISR, Joint Testing, Intelligence analysis/planning, and JCIDS.

I am not personally as worried about the potential for future robotic platforms to become sentient and “evil”? We are not even close to this level of “strong” AI. Most movies that deal with the topic of AI imbue machines with some level of consciousness and a desire for self-preservation. I believe these are fundamental human qualities. Could a machine misinterpret orders and do what we would consider the wrong thing to achieve them? Absolutely, but that is a result of poor programming or faulty or incomplete instructions, not because the machine decided to revolt and disobey its human master. The robotic revolution is highly overhyped.