[Editor’s Note: Army Mad Scientist welcomes guest blogger Raechel Melling with her post exploring how our adversaries can target the Nation at the granular level, exploiting our inherent biases via much improved deepfake and voice artificial intelligence technology. This hemispheric threat can be targeted remotely to strike at our Soldiers and their families in garrison, eroding their trust in national institutions, elected leaders, commanders, and comrades-in-arms.

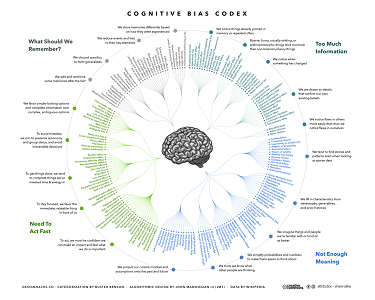

This threat vector is easily democratized and can be employed by both great and lesser states, non-state actors, multinational corporations, and super-empowered individuals (like Shamook, referenced in the post below). Our adversaries will employ this technology within a larger information operations campaign. Through constant barrages of mis- and disinformation, our adversaries will target seams to erode trust and exploit and enlarge societal fissures. Due to anchoring bias and cognitive dissonance, attempts at countering this narrative serve only to entrench it further in the targeted population. Instead, we should ‘inoculate’ our Soldiers to “minimize these effects by developing critical thinkers and training [them] to recognize their internal biases.” Only “by building a robust mental defense and process as a mental foundation, designed to combat misinformation and disinformation… [will we] win the fight against today’s information threats,… [and] the next generation of threats.” Read on!]

As the saying goes, seeing is believing. Human nature is to believe what we see to be true. This inherent bias fosters a fundamental human flaw – we are susceptible to misinformation. Advancements in technology, for example deepfake technology, or highly realistic fake images and videos, allow creators to exploit this inherent human bias. Creators of misinformation leverage these biases to trick us into believing what they want. With technology improving and advancing at such rapid rates, this virtual deception is getting easier and more impactful. The exploitation of our inherent biases gives malicious actors great power and influence over us and our society. Although, it’s not only malicious actors we need to be aware of, but also our  very human nature. It is becoming increasingly important to recognize how our internal biases leave us vulnerable to misinformation that can influence the general population, manipulate the will of a nation, and worsen civil divides.

very human nature. It is becoming increasingly important to recognize how our internal biases leave us vulnerable to misinformation that can influence the general population, manipulate the will of a nation, and worsen civil divides.

The Power to Rewrite History

The release of the recent 2021 film Army of the Dead proved that technology has reached another groundbreaking milestone. The computer-generated imagery used to completely replace all footage of one actor with footage of an entirely new actress in post-production is a feat that fosters feelings of both awe and fear. The replacement actress filmed every scene independently after the movie had already been completed and was then digitally added into the original footage. With the advancements in this technology, the new footage seamlessly integrated into the existing material making it difficult to tell any changes were made at all.

Army of the Dead is an advanced version of a deepfake. Although not used by a nefarious actor in this example, the possibility of this technology in the hands of bad actors is alarming. With the capability to realistically alter historical video documents, our adversaries have the power to rewrite history. They have the power to show that Person X was marching at a Ku Klux Klan rally when they really weren’t, or that Nation X wasn’t holding people in labor camps when they actually were. If our adversaries can manipulate and control the narrative to this degree, they can effectively use our biases against us to rationalize anything.

Army of the Dead is an advanced version of a deepfake. Although not used by a nefarious actor in this example, the possibility of this technology in the hands of bad actors is alarming. With the capability to realistically alter historical video documents, our adversaries have the power to rewrite history. They have the power to show that Person X was marching at a Ku Klux Klan rally when they really weren’t, or that Nation X wasn’t holding people in labor camps when they actually were. If our adversaries can manipulate and control the narrative to this degree, they can effectively use our biases against us to rationalize anything.

It may seem as though this technology was only well implemented because the work was done by a large film production company with millions of dollars to spend getting it right. While this is true, large, rich companies are not the only ones using this technology and executing it flawlessly. Lucasfilm, the production company responsible for the new series The Mandalorian, received a lot of criticism around their attempt at de-aging VFX technology to show a young Luke Skywalker in the final episode. The scene was then altered, and ultimately improved, by a well-known YouTube deepfake creator who goes by the name Shamook. The deepfake has been viewed over 2 million times and was so impressive that Lucasfilm hired Shamook to join their team. It’s clear we could be in trouble when million-dollar companies are outperformed by “amateurs” at their own game. The democratization of this technology gives any amateur with the skills and reach of Shamook the potential to create serious damage.

Not only have the visual effects of deepfakes improved, but voice artificial intelligence (AI) has advanced as well. New technology can synthesize the voice of voice actors to create completely new lines of dialogue that an actor has not recorded. The AI trains on a voice actor’s speech to generate brand new lines and has become so advanced that voice actors are concerned that their jobs will become obsolete. With this technology, adversaries could fabricate and circulate a phone call in which the U.S. President orders the Secretary of Defense to commit war crimes. If these advancements in voice AI are combined with the advancements in visual effects, the creation of truly realistic deepfakes becomes that much easier.

Not only have the visual effects of deepfakes improved, but voice artificial intelligence (AI) has advanced as well. New technology can synthesize the voice of voice actors to create completely new lines of dialogue that an actor has not recorded. The AI trains on a voice actor’s speech to generate brand new lines and has become so advanced that voice actors are concerned that their jobs will become obsolete. With this technology, adversaries could fabricate and circulate a phone call in which the U.S. President orders the Secretary of Defense to commit war crimes. If these advancements in voice AI are combined with the advancements in visual effects, the creation of truly realistic deepfakes becomes that much easier.

No Advanced Technology Needed

What about information not designed to purposely trick the viewer into believing something untrue? What about information that is simply misinterpreted by the viewer to be something it’s not? Human nature leaves us vulnerable to various types of biases such as confirmation bias and anchoring bias. When we see or hear something initially, whether true or not, it tends to skew our subsequent thinking – anchoring bias. In addition, pictures and videos can be taken out of context and used to confirm a belief that is already held by an individual but not actually supported by the evidence available – confirmation bias. These two biases can feed off each other to truly alter the way we perceive information.

In early 2021, Kelly Donohue, after winning his third straight Jeopardy game, made a hand gesture with his palm facing in, his index finger and thumb forming a circle, and his other three fingers extended to the side. Many people, including a large group of former contestants on the show, believed that Donohue was messaging to white supremacy groups who have adopted this symbol. Hundreds of former Jeopardy contestants wrote a letter demanding the show take action against Donohue, however, Donohue maintains that his hand gesture was simply to represent his third win and nothing more.

With so many recent examples in the news where this gesture was intentionally used to symbolize white supremacy, the former contestants had this idea anchored in their minds. Instead of analyzing the situation objectively, they started with a premise that was not supported by any available evidence and drew conclusions accordingly. In this case, false conclusions. We often think these biases don’t affect us, yet even a group of highly intelligent Jeopardy winners fell victim. Anyone can be a victim.

Implications for the Army

In a previous Mad Scientist Laboratory post, we asked the question, “How will the masses struggle with being flooded with a steady stream of AI-generated deepfakes constantly conveying mixed messages and troll armies that are indistinguishable from their fellow citizens, students, and Soldiers?” The answer is we will continue to have our biases exploited and made to believe  things that can harm our society. In the same blog post, we stated “deepfakes are alarming to national security experts as they could trigger accidental escalation, undermine trust in authorities, and cause unforeseen havoc.” This technology holds the potential to be a destructive social force.

things that can harm our society. In the same blog post, we stated “deepfakes are alarming to national security experts as they could trigger accidental escalation, undermine trust in authorities, and cause unforeseen havoc.” This technology holds the potential to be a destructive social force.

Many years ago, the rise of television news truly fostered our current bias to believe what we see from media outlets. There were many barriers to being on television news outlets which established a certain amount of trust built in through the vetting process. This bias has continued into the age where many people consume their news from the internet, an outlet with much lower barriers for entry and vetting, making it harder for society to maintain this trust. The ease at which anything can be put on the internet – altered videos, false soundbites, and inaccurate stories – has expedited the need for society to recognize our biases are putting us at risk and work to deconstruct them.

The combination of advanced technology and our constructed biases creates the ideal scenario in which a nefarious actor can cause damage. Soldiers are among those at high risk of being targeted by deepfakes. A soundbite of a Soldier’s replicated voice describing abuse from a senior Leader while on deployment, or a deepfake video of a unit being ambushed and destroyed could be sent to Soldiers’ families to tear at the seams of trust and disrupt military readiness – The Chief of Staff of the Army’s 2nd priority.

Opportunities for the Army

Countering deepfakes has no easy, enduring technological solution. Various deepfake detection systems, like the ones created by the Army Research Laboratory and Facebook may be used to find and counter deepfakes; however, the inevitable problem with a technology solution to a technology problem is that it becomes a cat and mouse game. As soon as creators know what systems are using to detect deepfakes, they will adapt and those weaknesses will disappear with the next iteration of deepfakes.

Although tech solutions may assist with mitigating the risks, a more holistic and longer-lasting solution is to mitigate our inherent biases. The Army has the opportunity to minimize these effects by developing critical thinkers and training Soldiers to recognize their internal biases. If we do not have Soldiers capable of critically questioning information they receive, we hand power over to our adversaries who will gladly use our idleness against us. The impetus cannot be on anyone else but the individual consumer to ensure the information they receive is accurate. Is the information independently corroborated in other sources? Is there supporting evidence?

It may benefit the Army to focus on training against disinformation as a spectrum of national security or potentially even a warfighting function. As Keith Law has stated, changing large organizations like the Army (or Major League Baseball) requires grassroots efforts. The Army may see the biggest reward by focusing on young Soldiers just beginning their careers in order to  truly ingrain the fight against disinformation into Army culture. When those young Soldiers become senior leaders, they will be better equipped at processing information without the effects of biases.

truly ingrain the fight against disinformation into Army culture. When those young Soldiers become senior leaders, they will be better equipped at processing information without the effects of biases.

Conclusion

We will never rid ourselves of our biases completely, but to protect ourselves from falling for misinformation, an emphasis on training critical thinkers who recognize our biases and fight to dismantle them may give the Army an advantage on the future battlefield. If we blindly trust the information we receive without doing our due diligence as a consumer, we may fall victim ourselves to malicious actors who can exploit our biases and have us believe false narratives. Allowing ourselves to be manipulated leaves us vulnerable to destructive thinking and ultimately a breakdown of the political and social structures in our societies. Recognizing, questioning, and demanding evidence of purported information is a potential defense against this threat.  Having a robust mental defense and process as a mental foundation, designed to combat misinformation and disinformation will not only help to win the fight against today’s information threats, but also the next generation of threats. That said, we must redouble our guard for the next threat after deepfakes and be ready to adapt to future information warfare attacks.

Having a robust mental defense and process as a mental foundation, designed to combat misinformation and disinformation will not only help to win the fight against today’s information threats, but also the next generation of threats. That said, we must redouble our guard for the next threat after deepfakes and be ready to adapt to future information warfare attacks.

If you enjoyed this post, review the following content:

Bias, Behavior, and Baseball with Keith Law, the associated podcast, and the video from Keith Law‘s presentation on Decision Making [view via a non-DoD network] delivered during our Mad Scientist Weaponized Information Virtual Conference on 21 July 2020

The Death of Authenticity: New Era Information Warfare

Influence at Machine Speed: The Coming of AI-Powered Propaganda by MAJ Chris Telley

Sub-threshold Maneuver and the Flanking of U.S. National Security, by Dr. Russell Glenn

The Erosion of National Will – Implications for the Future Strategist, by Dr. Nick Marsella

…explore the following related posts and podcasts:

Disinformation, Revisionism, and China with Doowan Lee and associated podcast

The Convergence: The Next Iteration of Warfare with Lisa Kaplan and associated podcast

The Convergence: True Lies – The Fight Against Disinformation with Cindy Otis and associated podcast

The Convergence: Political Tribalism and Cultural Disinformation with Samantha North and associated podcast

… and check out the following Information Warfare posts:

A House Divided: Microtargeting and the next Great American Threat, by 1LT Carlin Keally

Weaponized Information: What We’ve Learned So Far…, Insights from the Mad Scientist Weaponized Information Series of Virtual Events and all of this series’ associated content and videos [access via a non-DoD network]

Weaponized Information: One Possible Vignette and Three Best Information Warfare Vignettes

Raechel Melling is an Army Career Program 26 Fellow with the Headquarters, U.S. Army Training and Doctrine Command (TRADOC). She graduated from The College of William and Mary as a Government major and Accounting minor. She has had the opportunity to work in various organizations within the TRADOC staff, including the G-5, G-8, Command Group, the Congressional Activities Office, and most recently the G-2’s Mad Scientist Team.

Disclaimer: The views expressed in this blog post do not necessarily reflect those of the Department of Defense, Department of the Army, Army Futures Command (AFC), or Training and Doctrine Command (TRADOC).

Insightful post, thanks. Keeping our inherent biases at bay is a necessary thing in navigating the world of the future.