[Editor’s Note: Mad Scientist Laboratory is pleased to excerpt today’s post from Dr. James Mancillas‘ paper entitled Integrating Artificial Intelligence into Military Operations: A Boyd Cycle Framework (a link to this complete paper may be found at the bottom of this post). As Dr. Mancillas observes, “The conceptual employment of Artificial Intelligence (AI) in military affairs is rich, broad, and complex. Yet, while not fully understood, it is well accepted that AI will disrupt our current military decision cycles. Using the Boyd cycle (OODA loop) as an example, “AI” is examined as a system-of-systems; with each subsystem requiring man-in-the-loop/man-on-the-loop considerations. How these challenges are addressed will shape the future of AI enabled military operations.” Enjoy!]

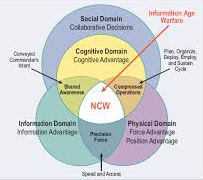

Success in the battlespace is about collecting information, evaluating that information, then making quick, decisive decisions. Network Centric Warfare (NCW) demonstrated this concept during the emerging phases of information age warfare.  As the information age has matured, adversaries have adopted its core tenant — winning in the decision space is winning in the battle space.1 The competitive advantage that may have once existed has eroded. Additionally, the principal feature of information age warfare — the ability to gather, and store communication data — has begun to exceed human processing capabilities.2 Maintaining a competitive advantage in the information age will require a new way of integrating an ever-increasing volume of data into a decision cycle.

As the information age has matured, adversaries have adopted its core tenant — winning in the decision space is winning in the battle space.1 The competitive advantage that may have once existed has eroded. Additionally, the principal feature of information age warfare — the ability to gather, and store communication data — has begun to exceed human processing capabilities.2 Maintaining a competitive advantage in the information age will require a new way of integrating an ever-increasing volume of data into a decision cycle.

Future AI systems offer the potential to continue maximizing the advantages of information superiority, while overcoming limits in human cognitive abilities. AI systems with their near endless and faultless memory, lack of emotional vestment, and potentially unbiased analyses, may continue to complement future military leaders with competitive cognitive advantages. These advantages may only emerge if AI is understood, properly utilized, and integrated into a seamless decision process.

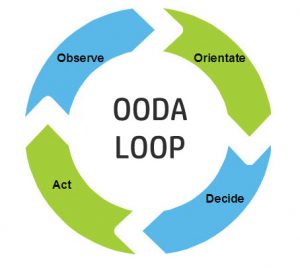

The OODA (Observe, Orient, Decide, and Act) Loop provides a methodical approach to explore: (1) how future autonomous AI systems may participate in the various elements of decision cycles; (2) what aspects of military operations may need to change to accommodate future AI systems; and (3) how implementation of AI and its varying degrees of autonomy may create a competitive decision space.3

The OODA (Observe, Orient, Decide, and Act) Loop provides a methodical approach to explore: (1) how future autonomous AI systems may participate in the various elements of decision cycles; (2) what aspects of military operations may need to change to accommodate future AI systems; and (3) how implementation of AI and its varying degrees of autonomy may create a competitive decision space.3

Observe

The automation of observe can be performed using AI systems, either as a singular activity or as part of a broader integrated analysis. Systems that observe require sophisticated AI analyses and systems. Within these systems, various degrees of autonomy can be applied. Because observe is a combination of different activities, the degree of autonomy for scanning the environment may differ from the degree of autonomy for recognizing potentially significant events. Varying degrees of autonomy may be applied to very specific tasks integral to scanning and recognizing.

High autonomous AI systems may be allowed to select or alter scan patterns, times and frequencies, boundary conditions, and other parameters; potentially including the selection of the scanning platforms and their sensor packages. High autonomous AI systems, integrated into feedback systems, could also alter and potentially optimize the scanning process, allowing AI systems to independently assess the effectiveness of previous scans and explore alternative scanning processes.

High autonomous AI systems may be allowed to select or alter scan patterns, times and frequencies, boundary conditions, and other parameters; potentially including the selection of the scanning platforms and their sensor packages. High autonomous AI systems, integrated into feedback systems, could also alter and potentially optimize the scanning process, allowing AI systems to independently assess the effectiveness of previous scans and explore alternative scanning processes.

Low autonomous AI systems might be precluded from altering pattern recognition parameters or thresholds for flagging an event as potentially significant. In this domain, AI systems could perform potentially complex analyses, but with limited ability to explore alternative approaches to examine additional environmental data.

When AI systems operate as autonomous observation systems, they could easily be integrated into existing doctrine, organizations, and training. Differences between AI systems and human observers must be taken into account, especially when we consider manned and unmanned mixed teams. For example: AI systems could operate with human security forces, each with potentially different endurance limitations. Sentry outpost locations and configurations described by existing Field Manuals may need to be revised to address differing considerations for AI systems, i.e., safety, degrees of autonomy, communication, physical capabilities, dimensions, and integration issues with human forces.

When AI systems operate as autonomous observation systems, they could easily be integrated into existing doctrine, organizations, and training. Differences between AI systems and human observers must be taken into account, especially when we consider manned and unmanned mixed teams. For example: AI systems could operate with human security forces, each with potentially different endurance limitations. Sentry outpost locations and configurations described by existing Field Manuals may need to be revised to address differing considerations for AI systems, i.e., safety, degrees of autonomy, communication, physical capabilities, dimensions, and integration issues with human forces.

The potential for ubiquitous and ever-present autonomous AI observation platforms presents a new dimension to informational security. The possibility of persistent, covert, and mobile autonomous observation systems offer security challenges that we only have just begun to understand. Information security within the cyber domain is just one example of the emerging challenges that AI systems can create as they continue to influence the physical domain.

Orient

Orient is the processes and analyses that establish the relative significance and context of the signal or data observed. An observation in its original raw form is unprocessed data of potential interest. The orientation and prioritization of that observation begins when the observation is placed within the context of (among other things) previous experiences, organizational / cultural / historic frameworks, or other observations.

One of the principal challenges of today’s military leader is managing the ever-increasing flow of information available to them. The ease and low cost of collecting, storing, and communicating has resulted in a supply of data that  exceeds the cognitive capacity of most humans.4 As a result, numerous approaches are being considered to maximize the capability of commanders to prioritize and develop data rich common operating pictures.5 These approaches include improved graphics displays as well as virtual reality immersion systems. Each is designed to give a commander access to larger and still larger volumes of data. When commanders are saturated with information, however, further optimizing the presentation of too much data may not significantly improve battlespace performance.

exceeds the cognitive capacity of most humans.4 As a result, numerous approaches are being considered to maximize the capability of commanders to prioritize and develop data rich common operating pictures.5 These approaches include improved graphics displays as well as virtual reality immersion systems. Each is designed to give a commander access to larger and still larger volumes of data. When commanders are saturated with information, however, further optimizing the presentation of too much data may not significantly improve battlespace performance.

The emergence of AI systems capable of contextualizing data has already begun. The International Business Machine (IBM) Corporation has already fielded advanced cognitive systems capable of performing near human level complex analyses.6 It is expected that this trend will continue and AI systems will continue to displace humans performing many staff officer “white collar” activities.7 Much of the analyses performed by existing systems, i.e., identifying market trends or evaluating insurance payouts, have been in environments with reasonably understood rules and boundaries.

Autonomy issues associated with AI systems orientating data and developing situational awareness pictures are complex. AI systems operating with a high autonomy can: independently prioritize data; add or remove data from an operational picture; possibly de-conflict contradictory data streams; change informational lenses; and can set priorities and hierarchies. High autonomous AI systems could continuously ensure the operational picture is the best reflection of the current information. The tradeoff to this “most accurate” operational picture might be a rapidly evolving operational picture with little continuity that could possibly confound and confuse system users. This type AI might require a blind faith acceptance to the picture presented.

Autonomy issues associated with AI systems orientating data and developing situational awareness pictures are complex. AI systems operating with a high autonomy can: independently prioritize data; add or remove data from an operational picture; possibly de-conflict contradictory data streams; change informational lenses; and can set priorities and hierarchies. High autonomous AI systems could continuously ensure the operational picture is the best reflection of the current information. The tradeoff to this “most accurate” operational picture might be a rapidly evolving operational picture with little continuity that could possibly confound and confuse system users. This type AI might require a blind faith acceptance to the picture presented.

At the other end of the spectrum, low autonomous AI systems might not explore alternative interpretations of data. These systems may use only prescribed informational lenses, and data priorities established by system users or developers. The tradeoff for a stable and consistent operational picture might be one that is biased by the applied informational lenses and data applications. This type of AI may just show us what we want to see.

Additional considerations arise concerning future human-AI collaborations. Generic AI systems that prioritize information based on a set of standard rules may not provide the optimal human-AI paring. Instead, AI systems that are adapted to complement a specific leader’s attributes may enhance his decision-making. These man-machine interfaces could be developed over an entire career. As such, there may be a need to ensure flexibility and portability in autonomous systems, to allow leaders to transition from job to job and retain access to AI systems that are “optimized” for their specific needs.

The use of AI systems for the consolidation, prioritization, and framing of data may require a review of how military doctrine and policy guides the use of information. Similar to the development of rules of engagement, doctrine and policy present challenges to developing rules of information framing — potentially prescribing or restricting the use of informational lenses. Under a paradigm where AI systems could implement doctrine and policy without question or moderation, the consequences of a policy change might create a host of unanticipated consequences.

Additionally, AI systems capable of consolidating, prioritizing, and evaluating large streams of data may invariably displace the staff that currently performs those activities.8 This restructuring could preserve high level decision making positions, while vastly reducing personnel performing data compiling, logistics, accounting, and other decision support activities. The effect of this restructuring might be the loss of many positions that develop the judgment of future leaders. As a result, increased automation of data analytics, and subsequent decreases in the staff supporting those activities, may create a shortage of leaders with experience in these analytical skills and tested judgment.

Additionally, AI systems capable of consolidating, prioritizing, and evaluating large streams of data may invariably displace the staff that currently performs those activities.8 This restructuring could preserve high level decision making positions, while vastly reducing personnel performing data compiling, logistics, accounting, and other decision support activities. The effect of this restructuring might be the loss of many positions that develop the judgment of future leaders. As a result, increased automation of data analytics, and subsequent decreases in the staff supporting those activities, may create a shortage of leaders with experience in these analytical skills and tested judgment.

Decide

Decide is the process used to develop and then select a course of action to achieve a desired end state. Prior to selecting a course of action, the military decision making process requires development of multiple courses of actions (COAs) and consideration of their likely outcomes, followed by the selection of the COA with the preferred outcome.

The basis for developing COA’s and choosing among them can be categorized as rules-based or values-based decisions. If an AI system is using a rules-based decision process, there is inherently a human-in-the-loop, regardless of the level of the AI autonomy. This is because human value judgments are inherently contained within the rule development process. Values-based decisions explore ends, ways, and means, through the lenses of feasibility and suitability, while also potentially addressing issues of acceptability and/or risk. Values-based decisions are generally associated with subjective value assessments, greater dimensionality, and generally contain some legal, moral, or ethical qualities. The generation of COA’s and their selection may involve substantially more nuanced judgments.

Differentiation of COAs may require evaluations of disparate value propositions. Values such as speed of an action, materiel costs, loss of life, liberty, suffering, morale, risk, and numerous other values often need to be weighed when selecting a COA for a complex issue. These subjective values, not easily quantified or universally weighted, can present significant challenges in assessing the level of autonomy to grant to AI decision activities. As automation continues to encroach into the decision space, these subjective areas may offer the best opportunities for humans to continue to contribute.

The employment of values-based or rules-based decisions tends to vary according to the operational environment and the level of operation. Tactical applications often tend towards rules-based decisions, while operational and strategic applications tend towards values-based decisions. Clarifying doctrine, training, and policies on rules-based and values-based decisions could be an essential element of ensuring that autonomous decision making AI systems are effectively understood, trusted, and utilized.

Act

The last element of the OODA Loop is Act. For AI systems, this ability to manipulate the environment may take several forms. The first form may be indirect, where an AI system concludes its manipulation step by notifying an operator of its recommendations. The second form may be through direct manipulation, both in the cyber and the physical or “real world” domains.

Manipulation in the cyber domain may include the retrieval or dissemination of information, the performance of analysis, the execution of cyber warfare activities, or any number of other cyber activities. In the physical realm, AI systems can manipulate the environment through mechanized systems tied into an electronic system. These mechanized systems may be a direct extension of the AI system or may be separate systems operated remotely.

Manipulation in the cyber domain may include the retrieval or dissemination of information, the performance of analysis, the execution of cyber warfare activities, or any number of other cyber activities. In the physical realm, AI systems can manipulate the environment through mechanized systems tied into an electronic system. These mechanized systems may be a direct extension of the AI system or may be separate systems operated remotely.

Within the OODA framework, once the decision has been made, the act is reflexive. For advanced AI systems, there is the potential for feedback to be provided and integrated as an action is taken. If the systems supporting the decision operate as expected, and events unfold as predicted, the importance of the degree of autonomy for the AI system (to act) may be trivial. However, if events unfold unexpectedly, the autonomy of an AI system to respond could be of great significance.

Consider a scenario where an observation point (OP) is being established. The decision to set up the OP was supported by many details. Among these concerns were: the path taken to set up the OP, the optimal location of the OP, the expected weather conditions, and the exact time the OP would be operational. Under a strict out-of-scope interpretation, if any of the real world details differed from those supporting the original decision, they would all be viewed as adjustments to the decision, and the decision would be voided. Under a less restrictive in-scope interpretation, if the details closely matched the expected conditions, they would be viewed as adjustments to the approved decision, and the decision would still be valid.

High autonomous AI systems could be allowed to make in-scope adjustments to the “act”. Allowing adjustments to the “act” would preclude a complete OODA cycle review. By avoiding this requirement — a new OODA cycle — an AI system might outperform low autonomous AI elements (and human oversight) and provide an advantage to the high autonomous system. Low autonomous AI systems following the out-of-scope perspective would be required to re-initiate a new decision cycle every time the real world did not exactly match expected conditions. While the extreme case may cause a perpetual initiation of OODA cycles, some adjustments could be made to the AI system to mitigate some of these concerns. The question still remains to determine the level of change that is significant enough to restart the OODA loop. Ultimately, designers of the system would need to consider how to resolve this issue.

High autonomous AI systems could be allowed to make in-scope adjustments to the “act”. Allowing adjustments to the “act” would preclude a complete OODA cycle review. By avoiding this requirement — a new OODA cycle — an AI system might outperform low autonomous AI elements (and human oversight) and provide an advantage to the high autonomous system. Low autonomous AI systems following the out-of-scope perspective would be required to re-initiate a new decision cycle every time the real world did not exactly match expected conditions. While the extreme case may cause a perpetual initiation of OODA cycles, some adjustments could be made to the AI system to mitigate some of these concerns. The question still remains to determine the level of change that is significant enough to restart the OODA loop. Ultimately, designers of the system would need to consider how to resolve this issue.

This is not a comprehensive examination of autonomous AI systems performing the act step of the OODA loop. Yet in the area of doctrine, training, and leadership, an issue rises for quick discussion. Humans often employ assumptions when assigning/performing an action. There is a natural assumption that real world conditions will differ from those used in the planning and authorization process. When those differences appear large, a decision is re-evaluated. When the differences appear small, a new decision is not sought, and some risk is accepted. The amount of risk is often intuitively assessed and depending on personal preferences, the action continues or is stopped. Due to the more literal nature of computational systems, autonomous systems may not have the ability to assess and accept “personal” risks. Military doctrine addressing command and leadership philosophies, i.e., Mission Command and decentralized operations, should be reviewed and updated, as necessary, to determine their applicability to operations in the information age.9

The integration of future AI systems has the potential to permeate the entirety of military operations, from acquisition philosophies to human-AI team collaborations. This will require the development of clear categories of AI systems and applications, aligned along axes of trust, with rules-based and values-based decision processes clearly demarcated. Because of the nature of machines to abide to literal interpretations of policy, rules, and guidance, a review of their development should be performed to minimize unforeseen consequences.

If you enjoyed this post, please review Dr. Mancillas’ complete report here;

… see the following MadSci blog posts:

-

- The Guy Behind the Guy: AI as the Indispensable Marshal, by Mr. Brady Moore and Mr. Chris Sauceda

- AI Enhancing EI in War, by MAJ Vincent Dueñas

- The Human Targeting Solution: An AI Story by CW3 Jesse R. Crifasi

- Bias and Machine Learning

- An Appropriate Level of Trust…

… read the Crowdsourcing the Future of the AI Battlefield information paper;

… and peruse the Final Report from the Mad Scientist Robotics, Artificial Intelligence & Autonomy Conference, facilitated at Georgia Tech Research Institute (GTRI), 7-8 March 2017.

Dr. Mancillas received a PhD in Quantum Physics from the University of Tennessee. He has extensive experience performing numerical modeling of complex engineered systems. Prior to working for the U.S. Army, Dr. Mancillas worked for the Center For Nuclear Waste and Regulatory Analyses, an FFRDC established by the Nuclear Regulatory Commission to examine the deep future of nuclear materials and their storage.

Disclaimer: The views expressed herein are those of the author(s) and do not necessarily reflect the official policy or position of the U.S. Army Training and Doctrine Command (TRADOC), Army Futures Command (AFC), Department of the Army, Department of Defense, or the U.S. Government.

1 Roger N. McDermott, Russian Perspectives on Network-Centric Warfare: The Key Aim of Serdyukov’s Reform (Fort Leavenworth, KS: Foreign Military Studies Office (Army), 2011).

2 Ang Yang, Abbass Hussein, and Sarker Ruhul. “Evolving Agents for Network Centric Warfare,” Proceedings of the 7th Annual Workshop on Genetic and Evolutionary Computation, 2005, 193-195.

3 Decision space is the range of options that military leaders explore in response to adversarial activities. Competitiveness in the decision space is based on abilities to develop more options, more effective options and to develop and execute them more quickly. Numerous approaches to managing decision space exist. NCW is an approach that emphasizes information rich communications and a high degree of decentralized decisions to generate options and “self synchronized” activities.

4 Yang, Hussein, and Ruhul. “Evolving Agents for Network Centric Warfare,” 193-195.

5 Alessandro Zocco, and Lucio Tommaso De Paolis. “Augmented Command and Control Table to Support Network-centric Operations,” Defence Science Journal 65, no. 1 (2015): 39-45.

6 The IBM Corporation, specifically IBM Watson Analytics, has been employing “cognitive analytics” and natural language dialogue to perform “big data” analyses. IBM Watson Analytics has been employed in the medical, financial and insurance fields to perform human level analytics. These activities include reading medical journals to develop medical diagnosis and treatment plans; performing actuary reviews for insurance claims; and recommending financial customer engagement and personalized investment strategies.

7 Smith and Anderson. “AI, Robotics, and the Future of Jobs”; Executive Office of the President, “Artificial Intelligence, Automation, and the Economy,” December 2016, https://obamawhitehouse.archives.gov/sites/whitehouse.gov/files/documents/Artificial-Intelligence-Automation-Economy.PDF, (accessed online 5 March 2017).

8 Smith and Anderson. “AI, Robotics, and the Future of Jobs”; Executive Office of the President, “Artificial Intelligence, Automation, and the Economy,” December 2016, https://obamawhitehouse.archives.gov/sites/whitehouse.gov/files/documents/Artificial-Intelligence-Automation-Economy.PDF, (accessed online 5 March 2017).

9 Jim Storr, “A Command Philosophy for the Information Age: The Continuing Relevance of Mission Command,” Defence Studies 3, no. 3 (2003): 119-129.