[Editor’s Note: Regular readers of the Mad Scientist Laboratory will recall one of the twelve key conditions driving the Operational Environment in the next ten years are Weapons of Mass Destruction — per the TRADOC G-2‘s The Operational Environment 2024-2034: Large-Scale Combat Operations:

“Adversaries view weapons of mass destruction (WMD) as an asymmetric advantage that has an outsized impact on U.S. operations and will likely seek to employ WMD in LSCO.“

Indeed, chemical weapons have been repeatedly employed on the battlefields of Ukraine by Russian forces and in clandestine targeted assassinations by both Russian and North Korean agents. Meanwhile, virologist researchers recently spent $100,000 and used genetic material received through the mail to genetically re-engineer an extinct vaccinia (horsepox) virus — related to the variola virus that causes smallpox.

As proclaimed Mad Scientist Dr. James Canton has noted, “exponential convergences” of technologies are occurring, facilitated at machine speed by Artificial Intelligence (AI). These exponential convergences will generate “extremely complex futures” that may afford strategic advantage to those who recognize and leverage them — to include nefarious actors seeking asymmetric advantages.

Army Mad Scientist welcomes guest blogger Jared Kite with today’s submission exploring how the exponential convergence of AI and biological and chemical weapons is creating “a dynamic and evolving complex operational environment pos[ing] potential threats to U.S., allied, and partner forces” — Read on!]

A significant challenge with a biological or chemical weapons threat is its convergence with the additional variable of artificial intelligence (AI).

The 2022 National Defense Strategy (NDS) requires the U.S. military to be able to operate and win in a chemical or biological environment, both in Large-Scale Combat Operations (LSCO), and when confronted with an asymmetric threat – such as a terrorist attack.1 Military leaders must understand how adversaries might use chemical and biological weapons or other similar capabilities to shape the battlefield through the entire spectrum of military operations. The NDS explains the strategic and operational challenges. Russia’s recent use of chemical weapons in the Ukraine conflict2 indicates the tactical implications on the battlefield. United States Army Central (USARCENT) recently conducted an exercise that demonstrated the effects that an unmanned aircraft system (UAS) equipped with chemical weapons might have in inducing a mass casualty event, demonstrating the threats and effects chemical weapons potentially have in a tactical environment.3

The 2022 National Defense Strategy (NDS) requires the U.S. military to be able to operate and win in a chemical or biological environment, both in Large-Scale Combat Operations (LSCO), and when confronted with an asymmetric threat – such as a terrorist attack.1 Military leaders must understand how adversaries might use chemical and biological weapons or other similar capabilities to shape the battlefield through the entire spectrum of military operations. The NDS explains the strategic and operational challenges. Russia’s recent use of chemical weapons in the Ukraine conflict2 indicates the tactical implications on the battlefield. United States Army Central (USARCENT) recently conducted an exercise that demonstrated the effects that an unmanned aircraft system (UAS) equipped with chemical weapons might have in inducing a mass casualty event, demonstrating the threats and effects chemical weapons potentially have in a tactical environment.3

Intersection of AI and bio-chem warfare specifically.

Complicating the chemical-biological threat is its potential convergence with AI. Threat actors could use AI to design an exclusively military or dual-use device4 to develop dangerous and undetectable pathogens,5 or use AI to produce equipment to enable the creation of a biological agent.6 China emphasized in its 2017 State Council Notice report that the country needed to carry out “large-scale genome recognition, proteomics, metabolomics, and other research and development…based on AI.”

China currently maintains the capability to edit genes, as proven through its dual-use ‘CRISPR-Cas9’ technology. CRISPR, or clustered regularly interspaced short palindromic repeats, is the hallmark of a bacterial defense system. CRISPR-Cas9 is a genome editing technology that scientists can use to permanently modify genes in living cells and organisms. What worries some US officials is that China could use its CRISPR-Cas9 technology to genetically build and modify a disease to target a specific population without harming its own citizens.7 Additionally, “CRISPR and other gene editing systems [could] be used to modify or foster novel prions”8 (fatal and transmissible neurodegenerative proteins affecting humans and animals).

China currently maintains the capability to edit genes, as proven through its dual-use ‘CRISPR-Cas9’ technology. CRISPR, or clustered regularly interspaced short palindromic repeats, is the hallmark of a bacterial defense system. CRISPR-Cas9 is a genome editing technology that scientists can use to permanently modify genes in living cells and organisms. What worries some US officials is that China could use its CRISPR-Cas9 technology to genetically build and modify a disease to target a specific population without harming its own citizens.7 Additionally, “CRISPR and other gene editing systems [could] be used to modify or foster novel prions”8 (fatal and transmissible neurodegenerative proteins affecting humans and animals).

Experimentation with AI and hypothetical attack planning

A recent RAND experiment of 12 “red teams” with members of varying operational, biological, and Large Language Model (LLM – i.e., ChatGPT) experience concluded that a malign actor with the time, advanced skills, motivation, and additional resources could use a current or future LLM to plan a biological attack.9 Researchers in the RAND study expressed concern that AI could be used to fill knowledge gaps about how to harvest and weaponize bacterium. Although non-state actors have unsuccessfully tried to weaponize biological agents,10 a terrorist or other group could use AI to fill in knowledge gaps.

A recent RAND experiment of 12 “red teams” with members of varying operational, biological, and Large Language Model (LLM – i.e., ChatGPT) experience concluded that a malign actor with the time, advanced skills, motivation, and additional resources could use a current or future LLM to plan a biological attack.9 Researchers in the RAND study expressed concern that AI could be used to fill knowledge gaps about how to harvest and weaponize bacterium. Although non-state actors have unsuccessfully tried to weaponize biological agents,10 a terrorist or other group could use AI to fill in knowledge gaps.

RAND researchers used various LLMs and online search engines in realistic scenarios. Although the study revealed no statistical significance between red team groups using LLMs vs standard online search engines in the scenario, the possibility cannot be ruled out that a malign actor (state or non- state) could use an emerging AI program to enable planning – this is particularly true with the pace at which AI is advancing. To stay ahead in the AI game, Leaders can judiciously understand current AI capabilities, what the bio-chem threats are, and how the two may intersect. They can then creatively think of ways to include these variables in their planning processes (i.e., including an insidious OPFOR who has these capabilities at their fingertips). Tailored exercises could incorporate malicious actors using LLMs to plan, resource, and execute attacks.

state) could use an emerging AI program to enable planning – this is particularly true with the pace at which AI is advancing. To stay ahead in the AI game, Leaders can judiciously understand current AI capabilities, what the bio-chem threats are, and how the two may intersect. They can then creatively think of ways to include these variables in their planning processes (i.e., including an insidious OPFOR who has these capabilities at their fingertips). Tailored exercises could incorporate malicious actors using LLMs to plan, resource, and execute attacks.

In a separate and significant series of experiments, Canadian and U.S. researchers used AI to design approximately 40,000 toxic chemical agents in a laboratory setting. One agent included a deadlier version of the VX nerve agent, (merely by scientists ‘flipping a switch’ and inverting an algorithmic LLM code from “therapeutic” to “toxic”). The experiments  immediately captured the attention of the U.S. Government. As further noted by the head of the study and CEO of U.S.-based Collaborations Pharmaceuticals (CPI), AI could be used to design a deadly agent to circumvent security protocols.11

immediately captured the attention of the U.S. Government. As further noted by the head of the study and CEO of U.S.-based Collaborations Pharmaceuticals (CPI), AI could be used to design a deadly agent to circumvent security protocols.11

Beyond the traditional LLM

An additional specialized LLM is a biological design tool (BDT). A BDT is a specialized kind of LLM that scientists use to develop biological-technological  solutions, such as synthetic DNA strands.12 BDT users must not only make requests and calculations in the BDT; they also would have to conduct experimental lab testing.13 Although a BDT may be beyond the current capability of a non-state threat actor, proliferation is possible. This is particularly true in the emerging arena of BDTs and the paucity of analysis regarding their impact on the biological threat scene.14

solutions, such as synthetic DNA strands.12 BDT users must not only make requests and calculations in the BDT; they also would have to conduct experimental lab testing.13 Although a BDT may be beyond the current capability of a non-state threat actor, proliferation is possible. This is particularly true in the emerging arena of BDTs and the paucity of analysis regarding their impact on the biological threat scene.14

A dynamic and evolving complex operational environment poses potential threats to U.S., allied, and partner forces.

According to the Journal of Advanced Military Studies, advances in the threat environment will reportedly increase the risk for NATO forces being confronted with a biological weapon — specifically, a genetically modified  weapon — by 2030.15 Our adversaries view these weapons as an asymmetric advantage that may have an outsized impact in U.S. operations and will likely seek to employ these weapons in LSCO. Considering the changing Operational Environment and the RAND and other studies, leaders at any echelon can be proactive by wargaming possible scenarios by incorporating LLM and other AI tools. Even if it’s not feasible to use an actual LLM in training, the concept and theory behind its potential use may be employed to critically and creatively think through possible scenarios during planning. The following is an explanation of how the concept behind LLMs may be used in planning, without using an actual AI application.

weapon — by 2030.15 Our adversaries view these weapons as an asymmetric advantage that may have an outsized impact in U.S. operations and will likely seek to employ these weapons in LSCO. Considering the changing Operational Environment and the RAND and other studies, leaders at any echelon can be proactive by wargaming possible scenarios by incorporating LLM and other AI tools. Even if it’s not feasible to use an actual LLM in training, the concept and theory behind its potential use may be employed to critically and creatively think through possible scenarios during planning. The following is an explanation of how the concept behind LLMs may be used in planning, without using an actual AI application.

Planning to prevent or mitigate chemical or biological attacks should be part of training and exercises at all echelons.

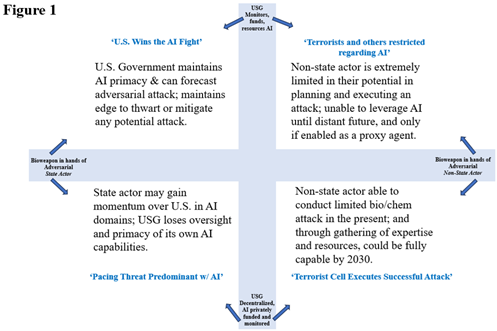

Below is an example of an Alternative Futures Analysis (AFA) — a critical and creative thinking tool that can be used by any planning team to enable scenarios.16 Picking the top right quadrant in Figure 1 as an example, this scenario would be the U.S. Government regulating AI, funding research and development of AI programs, and closely monitoring and resourcing privately funded AI initiatives. If an adversarial non-state actor were to plan a bio/chemical attack using AI, they would likely be limited.

After developing the scenarios, the team could consider which indicators might lead to each respective scenario and could also anticipate how current decisions or strategies would fare in each one. They could further identify alternative policies that may work better across all futures or specific ones. Finally, the team could determine how to mitigate and/or address the indicators. Analysts can then ‘wash and repeat,’ building additional AFAs by identifying different axes and developing new scenarios.

Figure 1. AFA designed by the author, adapted from Peter Schwartz’s The Art of the Long View

Figure 1. AFA designed by the author, adapted from Peter Schwartz’s The Art of the Long View

Now switch the north axis to “an attack using an AI-controlled unmanned system,” and the south axis to “an attack through conventional means,” and the east/west axes to “toxic industrial chemical (TIC)” vs. a “chemical warfare agent (CWA).” This would spur a whole new discussion among the team and may require incorporating outside experts to assist in the analysis. Military organizations from tactical through strategic levels can use the AFA to think critically and creatively through possible futures, incorporating the tool into their decision-making processes.

Conclusion

Countering malicious use of AI at the strategic level should include a deliberate, holistic, and decisive plan to resource the military and other government agencies with subject matter expertise and additional capabilities to remain ahead in AI innovation.17 The U.S. must build and maintain primacy in understanding AI’s vast potential and risks. The U.S. should not, however, discount non-AI capabilities that adversaries maintain to produce and deliver a biological or chemical weapon.18 AI should be part of a holistic effort to understand and manage friendly and adversarial capabilities and intentions. Planners should realize AI can be either an enabler or a potential inhibitor. Humans may inadvertently believe that AI and other machine learning programs can absolve them from thinking critically and creatively through problem-solving processes. Army leaders should consider how AI can help confront ‘technical’ rather than ‘adaptive’ challenges.19

Dynamics in the OE continue to evolve, particularly with the rapid advancements in technology, the continued threat of adversaries using asymmetric means to gain an advantage (such as using a bio or chemical weapon simply to delay a deployment or interfere with an operational mission),20 and the human responses to those advancements.

If you enjoyed this post, check out the TRADOC G-2’s new OE Assessment — The Operational Environment 2024-2034: Large-Scale Combat Operations

Explore the TRADOC G-2‘s Operational Environment Enterprise web page, brimming with information on the OE and how our adversaries fight, including:

Our China Landing Zone, full of information regarding our pacing challenge, including ATP 7-100.3, Chinese Tactics, BiteSize China weekly topics, People’s Liberation Army Ground Forces Quick Reference Guide, and our thirty-plus snapshots captured to date addressing what China is learning about the Operational Environment from Russia’s war against Ukraine (note that a DoD Common Access Card [CAC] is required to access this last link).

Our Russia Landing Zone, including the BiteSize Russia weekly topics. If you have a CAC, you’ll be especially interested in reviewing our weekly RUS-UKR Conflict Running Estimates and associated Narratives, capturing what we learned about the contemporary Russian way of war in Ukraine over the past two years and the ramifications for U.S. Army modernization across DOTMLPF-P.

Our Running Estimates SharePoint site (also requires a CAC to access), containing our monthly OE Running Estimates, associated Narratives, and the 2QFY24 and 3QFY24 OE Assessment TRADOC Intelligence Posts (TIPs).

Check out the following related Mad Scientist Laboratory content addressing Chemical and Biological Threats:

CRISPR Convergence, by proclaimed Mad Scientist Howard R. Simkin

Designer Genes: Made in China? by proclaimed Mad Scientist Dr. James Giordano and Joseph DeFranco

WMD Threat: Now and in the Future

The Resurgent Scourge of Chemical Weapons, by Ian Sullivan

A New Age of Terror: New Mass Casualty Terrorism Threat and A New Age of Terror: The Future of CBRN Terrorism by proclaimed Mad Scientist Zak Kallenborn

Dead Deer, and Mad Cows, and Humans (?) … Oh My! by proclaimed Mad Scientists LtCol Jennifer Snow and Dr. James Giordano, and Joseph DeFranco

Heeding Breaches in Biosecurity: Navigating the New Normality of the Post-COVID Future, by John Wallbank and Dr. James Giordano

About the Author: Jared Kite is currently an analyst with TRADOC G2 OE-I at Fort Leavenworth, KS, supporting Operational Environment and threat integration at the Combat Training Centers and U.S. Army Centers of Excellence. His research interests include PLA advancements in artificial intelligence and uncrewed systems in support of informationized and intelligentized warfare.

Disclaimer: The views expressed in this blog post do not necessarily reflect those of the U.S. Department of Defense, Department of the Army, Army Futures Command (AFC), or Training and Doctrine Command (TRADOC).

1 Department of Defense, “2022 National Defense Strategy of the United States of America.” Defense Technical Information Center, https://apps.dtic.mil/sti/trecms/pdf/AD1183514.pdf, (accessed May 2, 2024). Along with the biological and chemical threat, the NDS also certainly emphasizes the nuclear threat; however, this paper focuses on the biological-chemical threat, specifically the intersection with AI.

2 Pierre E. Ngendakumana. “Russia Used Chemical Weapons in Ukraine, US Says,” Politico, https://www.politico.eu/article/us-accuses-russia-of-using-chemical-weapons-ukraine (accessed on May 10, 2024).

3 Anri Baril, “USARCENT Participates in Exercise Protection Shield III,” U.S. Central Command, https://www.centcom.mil/MEDIA/NEWS-ARTICLES/News-Article-View/Article/2940702/usarcent-participates-in-exercise-protection-shield-iii/ (accessed May 13, 2024).

4 Christopher A. Mouton, Caleb Lucas, and Ella Guest, “The Operational Risks of AI in Large-Scale Biological Attacks: Results of a Red-Team Study,” RAND, https://www.rand.org/pubs/research_reports/RRA2977-2.html (accessed January 30, 2024).

5 Stephanie Batalis, “AI and Biorisk: An Explainer,” Center for Security and Emerging Technology, https://cset.georgetown.edu/publication/ai-and-biorisk-an-explainer/ (accessed February 13, 2024).

6 Yasmin Tadjdeh, “Pentagon Reexamining How It Addresses Chem-Biological Threats,” National Defense, https://www.nationaldefensemagazine.org/articles/2021/10/27/pentagon-reexamining-how-it-addresses-chem-bio-threats (accessed March 8, 2024).

7 Corey Pfluke, “Biohazard: A Look at China’s Biological Capabilities and the Recent Coronavirus Outbreak.” Air University Wild Blue Yonder online journal, https://www.airuniversity.af.edu/Wild-Blue-Yonder/Article-Display/Article/2094603/biohazard-a-look-at-chinas-biological-capabilities-and-the-recent-coronavirus-o/ (accessed May 25, 2024).

8 Jennifer Snow, James Giordano, and Joseph DeFranco, “Dead Deer, and Mad Cows, and Humans (?)…Oh My!,” Mad Scientist Laboratory blog, https://madsciblog.tradoc.army.mil/143-dead-deer-and-mad-cows-and-humans-oh-my/#_ftn18. (accessed June 4, 2024).

9 Mouton, et al., 2024.

10 Mouton, et al., 2024. Researchers explain that although the 1995 Aum Shinrikyo cult attempt to spray botulin toxin in a Tokyo subway was a failure, terrorists or other malign actors could use AI, specifically advanced LLM to assist them in planning a biological attack by providing research assistance across the phases of attack planning.

11 Chemistry and Industry, “AI: The Dark Side,” Wiley Online Library, https://doi.org/10.1002/cind.10189 (accessed January 30, 2024).

12 National Security Commission on Emerging Biotechnology. “White paper 3: Risks of AlxBio.” https://www.biotech.senate.gov/wp-content/uploads/2024/01/NSCEB_AIxBio_WP3_Risks.pdf, (accessed March 8, 2024).

13 Batalis, 2023.

14 Matthew Walsh, “Why AI for Biological Design Should be Regulated Differently than Chatbots,” Bulletin of the Atomic Scientists, https://thebulletin.org/2023/09/why-ai-for-biological-design-should-be-regulated-differently-than-chatbots (accessed March 20, 2024).

15 Dominik Juling, “Future Bioterror and Biowarfare Threats for NATO’s Armed Forces Until 2030.” Journal of Advanced Military Studies 14, no. 1 (2023): 118-143, https://muse.jhu.edu/article/901770, (accessed February 4, 2024).

16 Peter Schwartz, Peter. The Art of the Long View: Planning for the Future in an Uncertain World. (Currency, 2012). The Alternative Futures Analysis tool (among others) is also referenced and explained in the Red Team Handbook, available at https://usacac.army.mil/sites/default/files/documents/ufmcs/The_Red_Team_Handbook.pdf.

17 Carter C. Price and K. Jack Riley, “Policymaking Needs to Get Ahead of Artificial Intelligence.” RAND, https://www.rand.org/pubs/commentary/2024/01/policymaking-needs-to-get-ahead-of-artificial-intelligence.html (accessed February 13, 2024).

18 Batalis, 2023.

19 Ronald A. Heifetz, Alexander Grashow, and Martin Linsky. The Practice of Adaptive Leadership: Tools and Tactics for Changing Your Organization and the World. (Harvard Business Press, 2009).

20 Zachary Kallenborn, “A New Age of Terror: New Mass Casualty Terrorism Threats,” Mad Scientist Laboratory blog, September 16, 2019, https://madsciblog.tradoc.army.mil/179-a-new-age-of-terror-new-mass-casualty-terrorism-threats/ (accessed June 4, 2024).