“AI-enabled IO present a more pressing strategic threat than the physical hazards of slaughter-bots or even algorithmically-escalated nuclear war. IO are efforts to “influence, disrupt, corrupt, or usurp the decision-making of adversaries and potential adversaries;” here, we’re talking about using AI to do so. AI-guided IO tools can empathize with an audience to say anything, in any way needed, to change the perceptions that drive those physical weapons. Future IO systems will be able to individually monitor and affect tens of thousands of people at once. Defense professionals must understand the fundamental influence potential of these technologies if they are to drive security institutions to counter malign AI use in the information environment.” — Excerpted from Influence at Machine Speed: The Coming of AI-Powered Propaganda by then MAJ Chris Telley

[Editor’s Note: These prescient words, published on this site back in 2018, continue to be realized in today’s Operational Environment. Through constant barrages of mis- and disinformation, our adversaries continue to harness Artificial Intelligence (AI) to exploit existing biases to enhance their messaging. Today’s post by Mad Scientist Summer Intern Aldrin Yashko explores how the recent Iran-Israel 12-Day War showcased the reach and power of Generative AI-enhanced Information Operations (IO). But how do you inoculate the world against this constant bombardment of adversarial messaging, generated and transmitted at machine-speed and scale to exploit pre-existing biases? Ms. Yashko proposes harnessing the power of AI to promulgate our own narrative, harnessing the power of facts to transmit a bright beam of truth, designed to cut through and dispel the murk of adversarial counter-factual messaging — Read on!]

During the 12-Day War between Iran and Israel, as the two sides exchanged fires in the real world, a different conflict was playing out online. Both actors boosted their presence on social media in a struggle to influence global opinion. However, Iran incorporated a new twist. Shortly after the Iranian military announced that it had successfully shot down an Israeli F-35, various social media accounts began to share an image which depicted the remains of a fighter jet resting on the ground. The image was clearly AI-generated; the plane was bigger than many buildings in the photo and its dimensions were distorted, among many other glaring discrepancies. Nonetheless, it was shared on social media by those intending to reinforce the Iranian narrative and circulated widely beyond its original disseminators.1 The employment of AI for propaganda purposes represents a new threat in the Operational Environment (OE), as AI is being increasingly harnessed by U.S. adversaries for Information Operations (IO).

The use of AI for IO is developing into a two-pronged threat. The first issue is that adversaries can now efficiently portray fictional events, including “successful” strikes inflicted on opposing forces, in a manner that will become increasingly difficult to discern for untrained viewers. The second is that propaganda is now less manpower and cost-intensive. While these threats have traditionally been propagated through tools such as image editing and social media, AI represents an acceleration in feasibility, scale, and dispersal of capabilities. Several key U.S. adversaries, including Iran, Russia, and China have begun to use AI as critical components of their IO, and there is no reason to think that they will slow down.

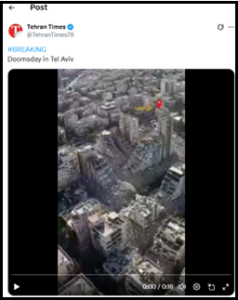

Iran’s operations during the conflict with Israel are illustrative of how AI is increasingly used to depict fictional events. In one instance, the state media outlet “Tehran Times” posted supposed overhead aerial videos of bombed-out buildings, complete with a geographical marker and the words “Tel Aviv” in Farsi. The post text similarly read “Doomsday in Tel Aviv,” demonstrating a clear intent to pass off the videos as genuine damage.2 Overt state news media were not the only ones who posted such content. Amid Iranian reports that Ben Gurion airport had been successfully hit by ballistic missiles, sympathetic non-state accounts shared an AI-generated video of building debris and smoldering airframes with the caption “Israeli airports in Tel Aviv after the Iranian missile strike.”3 Combined, these AI generations and numerous others amassed millions of views over platforms such as X and TikTok.4 Advances in AI image and video quality herald a possible new challenge: in times of crisis and conflict, adversaries have a new toolset that they can utilize to portray an ‘alternative reality’ to the true situation on the ground. A city might fall out of an adversary’s control, and all adversarial personnel might be scattered, but if one soldier in a bunker can create content depicting otherwise, the truth may be distorted to a credulous global audience, reinforcing their predisposed biases.

In addition to the threat of deceptive content, Russian and Chinese IO are demonstrating how AI can serve as a force multiplier in reaching target audiences. Recently, Russian bot farms have undergone a notable evolution. Initially, bot accounts needed to be controlled by individual people. Now, humans can utilize AI to create accounts and write posts, leaving humans to supervise larger numbers of fake personas. For example, in June of 2024, the FBI and other international partners posted an advisory on a complex Russian bot farm operation that had been enhanced with AI. According to the advisory, Russian operatives used an AI software called “Meliorator” to generate and operate hundreds of fake personas on social media. Termed “souls,” these fake accounts often contained AI-generated profile photos and could autonomously complete a variety of tasks, from liking, reposting, and commenting on other user’s content to creating their own original posts.5 The FBI identified 968 accounts that were associated with “Meliorator,” and these numbers likely represent just the tip of the iceberg of Russia’s true AI-enabled trolling capacities.6

In addition to the threat of deceptive content, Russian and Chinese IO are demonstrating how AI can serve as a force multiplier in reaching target audiences. Recently, Russian bot farms have undergone a notable evolution. Initially, bot accounts needed to be controlled by individual people. Now, humans can utilize AI to create accounts and write posts, leaving humans to supervise larger numbers of fake personas. For example, in June of 2024, the FBI and other international partners posted an advisory on a complex Russian bot farm operation that had been enhanced with AI. According to the advisory, Russian operatives used an AI software called “Meliorator” to generate and operate hundreds of fake personas on social media. Termed “souls,” these fake accounts often contained AI-generated profile photos and could autonomously complete a variety of tasks, from liking, reposting, and commenting on other user’s content to creating their own original posts.5 The FBI identified 968 accounts that were associated with “Meliorator,” and these numbers likely represent just the tip of the iceberg of Russia’s true AI-enabled trolling capacities.6

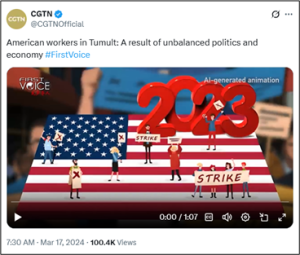

China is also actively utilizing AI for propaganda purposes. In March of 2024, broadcaster CGTN began posting AI-generated videos as part of a “Fractured America” series. These videos depicted animated scenes designed to assert American economic distress and income inequality by contrasting scenes of striking workers and factories with those of affluent elites. The animation is low-quality, and the English audio overlay is awkward; nonetheless, the video series showcases the ease with which China and other adversaries can create comprehensive propaganda pieces with coherent arguments and visuals with much less financial and human exertion.7

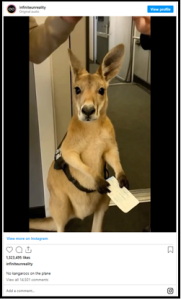

Much of the AI output currently being used by U.S. adversaries can still easily be flagged as computer-generated. However, it is important to remember that, while these models are still in their infancy, pieces of AI media are already being taken for the truth. For example, in May of 2025 a video went viral which depicted a woman trying to bring her “emotional support kangaroo” onto a commercial flight. Thousands of social media users expressed their sympathy for the poor kangaroo, who stood politely in front of the boarding ramp holding his ticket while airline agents argued with its owner.8 Such generations are mostly benign, but they demonstrate that internet users can be initially fooled by AI, despite the human belief that we can always discern what’s real and what’s not. It is reasonable to assess that, within a short timeframe, the professional operations of adversaries will likely begin to produce similar results.

However, AI still has its limitations. Effective propaganda is characterized by its ability to connect with and influence the human mind. As such, the most effective propaganda must be infused with human influences. A dditionally, AI is still viewed with distrust by large segments of the global population. By over-relying on this technology, especially with the intent to lie and deceive, adversaries may risk losing trust among target audiences. Indeed, the US Army and other partners might find that the best counter to adversarial AI use is adhering to the truth, as opposed to using computer algorithms to convey a false reality. Proactively “inoculating” Soldiers and citizens alike with a national narrative highlighting how to discriminate false narratives from the truth and encouraging independent critical thinking might also serve to counter the effects of our adversaries’ AI-generated propaganda.

dditionally, AI is still viewed with distrust by large segments of the global population. By over-relying on this technology, especially with the intent to lie and deceive, adversaries may risk losing trust among target audiences. Indeed, the US Army and other partners might find that the best counter to adversarial AI use is adhering to the truth, as opposed to using computer algorithms to convey a false reality. Proactively “inoculating” Soldiers and citizens alike with a national narrative highlighting how to discriminate false narratives from the truth and encouraging independent critical thinking might also serve to counter the effects of our adversaries’ AI-generated propaganda.

The U.S. Army can view the rise of AI propaganda as both a challenge and an opportunity. Adversarial uses of AI technology that distort the truth should be highlighted whenever possible; IO operations based on real-world people and events should be used to instill trust. However, the Army should also investigate how best to leverage the cost and labor-reducing benefits of AI, whether through automating the distribution of accurate information or gathering data on the information environment for analysis. As AI is increasingly employed by our adversaries to distort the facts, the truth will become an even more powerful weapon in IO.

The U.S. Army can view the rise of AI propaganda as both a challenge and an opportunity. Adversarial uses of AI technology that distort the truth should be highlighted whenever possible; IO operations based on real-world people and events should be used to instill trust. However, the Army should also investigate how best to leverage the cost and labor-reducing benefits of AI, whether through automating the distribution of accurate information or gathering data on the information environment for analysis. As AI is increasingly employed by our adversaries to distort the facts, the truth will become an even more powerful weapon in IO.

If you enjoyed this post, check out the TRADOC Pamphlet 525-92, The Operational Environment 2024-2034: Large-Scale Combat Operations

Explore the TRADOC G-2‘s Operational Environment Enterprise web page, brimming with authoritative information on the Operational Environment and how our adversaries fight, including:

Our China Landing Zone, full of information regarding our pacing challenge, including ATP 7-100.3, Chinese Tactics, How China Fights in Large-Scale Combat Operations, BiteSize China weekly topics, and the People’s Liberation Army Ground Forces Quick Reference Guide.

Our Russia Landing Zone, including the BiteSize Russia weekly topics. If you have a CAC, you’ll be especially interested in reviewing our weekly RUS-UKR Conflict Running Estimates and associated Narratives, capturing what we learned about the contemporary Russian way of war in Ukraine over the past two years and the ramifications for U.S. Army modernization across DOTMLPF-P.

Our Iran Landing Zone, including the Iran Quick Reference Guide and the Iran Passive Defense Manual (both require a CAC to access).

Our North Korea Landing Zone, including Resources for Studying North Korea, Instruments of Chinese Military Influence in North Korea, and Instruments of Russian Military Influence in North Korea.

Our Irregular Threats Landing Zone, including TC 7-100.3, Irregular Opposing Forces, and ATP 3-37.2, Antiterrorism (requires a CAC to access).

Our Running Estimates SharePoint site (also requires a CAC to access) — documenting what we’re learning about the evolving OE. Contains our monthly OE Running Estimates, associated Narratives, and the quarterly OE Assessment TRADOC Intelligence Posts (TIPs).

Then check out the following Mad Scientist Laboratory blog post content addressing how AI can be harnessed to conduct cognitive warfare:

Influence at Machine Speed: The Coming of AI-Powered Propaganda by MAJ Chris Telley

The Exploitation of our Biases through Improved Technology, by Raechel Melling

Damnatio Memoriae through AI and What is the Threshold? Assessing Kinetic Responses to Cyber-Attacks, by proclaimed Mad Scientist Marie Murphy

China and Russia: Achieving Decision Dominance and Information Advantage by Ian Sullivan, along with the comprehensive paper from which it was excerpted

Information Advantage Contribution to Operational Success, by CW4 Charles Davis

Gaming Information Dominance and Russia-Ukraine Conflict: Sign Post to the Future (Part 1) by Kate Kilgore

Sub-threshold Maneuver and the Flanking of U.S. National Security and Is Ours a Nation at War? U.S. National Security in an Evolved — and Evolving — Operational Environment, by Dr. Russell Glenn

The Erosion of National Will – Implications for the Future Strategist, by Dr. Nick Marsella

A House Divided: Microtargeting and the next Great American Threat, by 1LT Carlin Keally

Weaponized Information: What We’ve Learned So Far…, Insights from the Mad Scientist Weaponized Information Series of Virtual Events, and all of this series’ associated content and videos

Weaponized Information: One Possible Vignette and Three Best Information Warfare Vignettes

LikeWar — The Weaponization of Social Media

The Death of Authenticity: New Era Information Warfare

Active Defense: Shaping the Threat Environment and The Information Disruption Industry and the Operational Environment of the Future, by proclaimed Mad Scientist Vincent H. O’Neil , as well as his associated video presentation from 20 May 2020, part of the Mad Scientist Weaponized Information Series of Virtual Events.

In the Cognitive War – The Weapon is You! by Dr. Zac Rogers,

Non-Kinetic War, Global Entanglement and Multi-Reality Warfare and associated podcast, with COL Stefan Banach (USA-Ret.)

The Future of War is Cyber! by CPT Casey Igo and CPT Christian Turley

About Today’s Author: Aldrin Yashko is an intern with the TRADOC G-2 Mad Scientist Initiative. She graduated from William & Mary in May 2025 with a BA in International Relations. Her prior experience includes interning at U.S. European Command and working as a developer with William & Mary’s Wargaming Lab. Aldrin plans on pursuing a career in national security and intelligence.

Disclaimer: The views expressed in this blog post do not necessarily reflect those of the U.S. Department of Defense, Department of the Army, Army Futures Command (AFC), or Training and Doctrine Command (TRADOC).

1 “The AI Slop Fight Between Iran and Israel.” 404Media, June 18, 2025. https://www.404media.co/the-ai-slop-fight-between-iran-and-israel/ and Murphy, Matt, Olga Robinson, and Shayan Sardarizadeh “Israel-Iran conflict unleashes wave of disinformation.” BBC Verify. June 20, 2025. https://www.bbc.com/news/articles/c0k78715enxo

2 Tehran Times. X. June 14, 2025. https://x.com/TehranTimes79/status/1933850991599767749

3 Hassan Alkaabi. X. June 19, 2025. https://mvau.lt/media/c09976ca-d08e-43bc-b734-3618fc652376

4 Murphy, et al.

5 “State-Sponsored Russian Media Leverages Meliorator Software for Foreign Malign Influence Activity.” Joint Cybersecurity Advisory. July 9, 2024. https://www.ic3.gov/CSA/2024/240709.pdf

6 “Justice Department Leads Efforts Among Federal, International, and Private Sector Partners to Disrupt Covert Russian Government-Operated Social Media Bot Farm.” US Department of Justice. July 9, 2024. https://www.justice.gov/archives/opa/pr/justice-department-leads-efforts-among-federal-international-and-private-sector-partners?os=httpswww.google&ref=app

7 Hale Erin. “China turns to AI in propaganda mocking the ‘American Dream.’” Al Jazeera. March 29, 2024. https://www.aljazeera.com/economy/2024/3/29/china-turns-to-ai-in-propaganda-mocking-the-american-dream

8 Di Placido, Dani. “’Emotional Support Kangaroo’ Goes Viral – But It’s Completely Fake.” Forbes. May 28, 2025. https://www.forbes.com/sites/danidiplacido/2025/05/28/emotional-support-kangaroo-video-goes-viral-but-its-completely-fake/