“Russia’s vast arsenal of non-strategic nuclear weapons helps it to offset Western conventional superiority and provide formidable escalation management options in theater war scenarios.” — Annual Threat Assessment of the U.S. Intelligence Community, March 2025

[Editor’s Note: Army Mad Scientist and the TRADOC G-2 continue to benefit from an enduring and successful record of collaboration with students from The College of William and Mary. Today’s post by summer e-intern Charlotte Feit-Leichman is just the latest in a long line of insightful submissions the Mad Scientist Laboratory has had the privilege of publishing — helping to broaden our understanding of the Operational Environment.

Ms. Feit-Leichman explores how the advent of battlefield autonomy, the integration of artificial intelligence to facilitate decision making at machine speed, and the loosening of Russia’s nuclear command authority could converge to increase the possibility of the unthinkable — either the intentional or the accidental, erroneous, or hacked release of Russia’s tactical nuclear weapons. During the height of the Cold War, the U.S. Army trained and prepared to fight and win decisively in a nuclear-contaminated environment. Ms. Feit-Leichman presents a convincing case for the Army to return to this level of heightened readiness — Read on!]

Russia’s convergence of artificial intelligence (AI) and autonomous systems could signal an increase in the risk of tactical nuclear weapons being used on the battlefield. AI weapons systems use computer algorithms to attack a target without the manual control of a human operator.1 AI introduces the element of machine learning that predicts how to use data and processes in

Russia’s convergence of artificial intelligence (AI) and autonomous systems could signal an increase in the risk of tactical nuclear weapons being used on the battlefield. AI weapons systems use computer algorithms to attack a target without the manual control of a human operator.1 AI introduces the element of machine learning that predicts how to use data and processes in  the future.2 The advent of battlefield autonomous systems enables lower echelon units and individuals to deliver lethal fires more quickly, with greater precision, over longer distances. Russia’s rapid integration of AI into these autonomous weapons systems coupled with its military leadership suggesting loosening its nuclear command authority presents the possibility that tactical nuclear weapons could be present and employed on the battlefield.3 The shortening of Russia’s nuclear command chain increases the risk of accidents as the time to identify and prevent machine errors decreases with automation. The convergence of AI, battlefield autonomy, and tactical nuclear weapons under Russia’s loosened nuclear command authority poses a destabilizing threat in the Operational Environment and is a possible indicator that the U.S. Army should resume training and preparing to fight and win decisively in a nuclear environment.

the future.2 The advent of battlefield autonomous systems enables lower echelon units and individuals to deliver lethal fires more quickly, with greater precision, over longer distances. Russia’s rapid integration of AI into these autonomous weapons systems coupled with its military leadership suggesting loosening its nuclear command authority presents the possibility that tactical nuclear weapons could be present and employed on the battlefield.3 The shortening of Russia’s nuclear command chain increases the risk of accidents as the time to identify and prevent machine errors decreases with automation. The convergence of AI, battlefield autonomy, and tactical nuclear weapons under Russia’s loosened nuclear command authority poses a destabilizing threat in the Operational Environment and is a possible indicator that the U.S. Army should resume training and preparing to fight and win decisively in a nuclear environment.

Russia has repeatedly stressed the importance of AI integration in its military technology. President Vladimir Putin has stated that the leader in AI development will become “the ruler of the world,” making leadership in AI technology a facet of Russia’s battle for global power with the West.4 There has been a rapid convergence of AI technology with Russian weapons systems throughout the war in Ukraine. One example is the development of autonomous one-way attack drones that employ machine vision as a counter to electronic warfare.5 The convergence of military technology with AI produces unique effects such as unmanned weapons impervious to signal jamming, faster response times due to quick and efficient data sorting, and identification of patterns that human analysts might not recognize, making it advantageous on the battlefield. AI integration into Russian nuclear weapons systems and its consequences may quickly become a reality as the pace of technological development and Russia’s nuclear doctrine evolve.

Russia’s changing nuclear command authority suggests a devolution in nuclear authority to tactical commanders, raising the risk of nuclear weapons use in the operational environment. The Russian Deputy Foreign Minister Sergei Ryabkov told a Russian foreign affairs magazine “conceptual additions and amendments” must be made to the doctrine that calls for nuclear weapons use if there is a perceived threat to “sovereignty and territorial integrity.”6 President Putin has also stated that he “will not rule out lowering the threshold for using nuclear weapons in Russia’s nuclear posture.”7 These statements increase the likelihood of Russian tactical nuclear weapon use, which has been further elevated given the tactical nuclear weapon drills that have begun with Russian and Belarussian troops.8

Russia has also announced its efforts to integrate AI into the operations of its Strategic Rocket Forces. Sergei Karakayev, commander of the Strategic Rocket Forces, stated that “automated security systems of all mobile and stationary strategic missile complexes that will be placed on combat duty around 2030 will include robotic systems and use AI.”9 This introduces a host of risks ranging from possible accidents to cyberattack vulnerabilities.

Shortening the decision-making process involved in nuclear release with the introduction of AI systems increases the likelihood of miscalculation and the potential for rapid escalation. Autonomous systems are not immune to error — and human judgement, particularly in nuclear weapons systems’ command and control, is necessary to prevent catastrophic accidents as reflected by the “Petrov incident.”10 In 1983, a Soviet satellite falsely reported a large nuclear missile attack in-bound on the Russian homeland. Without Lt Col Petrov’s “gut instinct” that something was wrong, a misinformed and disastrous nuclear counterstrike on the US could have occurred.11 The catastrophic effects of this technological error were avoided through human judgement, which could be supplanted in automated decision-making processes.

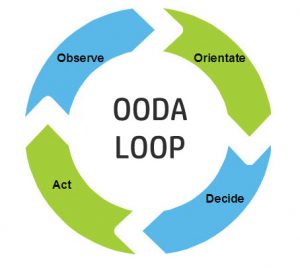

The decision-making process involved in launching a nuclear attack can be explained through the OODA loop model, consisting of observe, orient, decide, and act.12 At the orient stage, AI sifts through large amounts of information to determine what is most important within that context. For example, an AI system could take information from a variety of sensors to determine if an attack is being launched. Such systems reduce the amount of staff that monitor and contextualize data which could cause human analytical skills to atrophy and result in the amplification of decision-making biases.13 AI systems assimilate the information decision-makers feed them — if they learn risky and aggressive behaviors from Russian leadership, they could replicate these biases, further entrenching them in future decision-making. Even where human judgement remains in the OODA loop, the integration of AI reduces human judgement to a “cog in an automatic, regimented system” raising the risk of automation bias.14

The decision-making process involved in launching a nuclear attack can be explained through the OODA loop model, consisting of observe, orient, decide, and act.12 At the orient stage, AI sifts through large amounts of information to determine what is most important within that context. For example, an AI system could take information from a variety of sensors to determine if an attack is being launched. Such systems reduce the amount of staff that monitor and contextualize data which could cause human analytical skills to atrophy and result in the amplification of decision-making biases.13 AI systems assimilate the information decision-makers feed them — if they learn risky and aggressive behaviors from Russian leadership, they could replicate these biases, further entrenching them in future decision-making. Even where human judgement remains in the OODA loop, the integration of AI reduces human judgement to a “cog in an automatic, regimented system” raising the risk of automation bias.14

Automation bias is when humans become conditioned to the repeated success of algorithms causing cognitive offloading and complete trust in the machine — even when a non-biased human might recognize that the machine is reporting incorrect information.15 As AI is further integrated, there is not only an increased risk of error due to shorter decision making cycles but also a greater risk that human judgement will not be able to detect errors, including those caused by cyberattacks.

Automation bias is when humans become conditioned to the repeated success of algorithms causing cognitive offloading and complete trust in the machine — even when a non-biased human might recognize that the machine is reporting incorrect information.15 As AI is further integrated, there is not only an increased risk of error due to shorter decision making cycles but also a greater risk that human judgement will not be able to detect errors, including those caused by cyberattacks.

An AI-augmented nuclear command system would create new threat vectors and attack surfaces for hackers, ones that “are highly vulnerable to cyberattacks in ways that traditional military platforms are not.”16 Integrity attacks that manipulate AI learning to teach it incorrect information are the most prevalent.17 Third parties and adversaries of both Russia and the US could take advantage of these new vulnerabilities to launch a nuclear attack on the US and its allies via Russia, muddying the waters of responsibility and providing them with deniability.18 Overall, the integration of AI into Russian nuclear weapons systems threatens the heightened potential for accidental, erroneous, or hacked nuclear release, requiring greater nuclear preparedness for the U.S. Army.

Increased coordination with the Joint Program Executive Office for Chemical, Biological, Radiological, and Nuclear Defense (JPEO-CBRND) through the Army Technology Transfer Program (T2) can increase the U.S. Army’s resiliency and ability to “fight and win unencumbered in a nuclear environment.”19 JPEO-CBRND sources and distributes sensors, specialized equipment, and medical technologies that will make monitoring more precise  and arm Soldiers with the requisite gear for operating in a nuclear contaminated environment.20 JPEO-CBRND will provide U.S. Army Soldiers with protective clothing and decontamination equipment to mitigate the effects of fighting in a nuclear-contaminated environment. The U.S. Army may also need to resume training on how to properly implement them across its individual Soldier, Crew, and Collective tasks and battle drills, as well as incorporate simulated nuclear contamination conditions into both home station training exercises and Combat Training Center rotations.

and arm Soldiers with the requisite gear for operating in a nuclear contaminated environment.20 JPEO-CBRND will provide U.S. Army Soldiers with protective clothing and decontamination equipment to mitigate the effects of fighting in a nuclear-contaminated environment. The U.S. Army may also need to resume training on how to properly implement them across its individual Soldier, Crew, and Collective tasks and battle drills, as well as incorporate simulated nuclear contamination conditions into both home station training exercises and Combat Training Center rotations.

Increasing the frequency of radiological response exercises on strategic, operational, and tactical levels will improve the U.S. Army’s nuclear response capabilities by providing feedback data to help mitigate potential complications in an actual nuclear battlespace.21 Current U.S. Army nuclear response training is relatively fragmented by agencies and units which threaten the effectiveness of the overall response. Successfully operating in a nuclear contaminated battlespace will involve preparedness achieved through “multi-echelon training” at least once a year.22 By taking measures to strengthen our ability to operate in a nuclear-contaminated environment, the U.S. Army can prepare to fight and win decisively should the unthinkable result from Russia’s (or any other adversary’s) convergence of AI, battlefield autonomy, and tactical nuclear weapons.

If you enjoyed this post, review the TRADOC Pamphlet 525-92, The Operational Environment 2024-2034: Large-Scale Combat Operations

Explore the TRADOC G-2‘s Operational Environment Enterprise web page, brimming with authoritative information on the Operational Environment and how our adversaries fight, including:

Our China Landing Zone, full of information regarding our pacing challenge, including ATP 7-100.3, Chinese Tactics, BiteSize China weekly topics, People’s Liberation Army Ground Forces Quick Reference Guide, and our thirty-plus snapshots captured to date addressing what China is learning about the Operational Environment from Russia’s war against Ukraine (note that a DoD Common Access Card [CAC] is required to access this last link).

Our Russia Landing Zone, including the BiteSize Russia weekly topics. If you have a CAC, you’ll be especially interested in reviewing our weekly RUS-UKR Conflict Running Estimates and associated Narratives, capturing what we learned about the contemporary Russian way of war in Ukraine over the past two years and the ramifications for U.S. Army modernization across DOTMLPF-P.

Our Iran Landing Zone, including the Iran Quick Reference Guide and the Iran Passive Defense Manual (both require a CAC to access).

Our Running Estimates SharePoint site (also requires a CAC to access) — documenting what we’re learning about the evolving OE. Contains our monthly OE Running Estimates, associated Narratives, and the 2QFY24, 3QFY24, 4QFY24, and 1QFY25 and 2QFY25 OE Assessment TRADOC Intelligence Posts (TIPs).

Then review the following related Mad Scientist Laboratory content:

Unmanned Capabilities in Today’s Battlespace

Revolutionizing 21st Century Warfighting: UAVs and C-UAS

The Operational Environment’s Increased Lethality

WMD Threat: Now and in the Future

Why the Next “Cuban Missile Crisis” Might Not End Well: Cyberwar and Nuclear Crisis Management, by Dr. Stephen J. Cimbala

An Appropriate Level of Trust…

The Future of the Cyber Domain

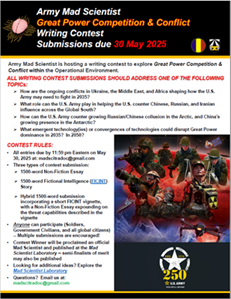

>>>>Reminder: Army Mad Scientist wants to crowdsource your thoughts on Great Power Competition & Conflict — check out the flyer describing our latest writing contest.

>>>>Reminder: Army Mad Scientist wants to crowdsource your thoughts on Great Power Competition & Conflict — check out the flyer describing our latest writing contest.

All entries must address one of the following writing prompts:

How are the ongoing conflicts in Ukraine, the Middle East, and Africa shaping how the U.S. Army may need to fight in 2035?

What role can the U.S. Army play in helping the U.S. counter Chinese, Russian, and Iranian influence across the Global South?

How can the U.S. Army counter growing Russian/Chinese collusion in the Arctic, and China’s growing presence in the Antarctic?

What emergent technology(ies) or convergences of technologies could disrupt Great Power dominance in 2035? In 2050?

We are accepting three types of submissions:

-

-

- 1500-word Non-Fiction Essay

-

-

-

- 1500-word Fictional Intelligence (FICINT) Story

-

-

-

- Hybrid 1500-word submission incorporating a short FICINT vignette, with a Non-Fiction Essay expounding on the threat capabilities described in the vignette

-

Anyone can participate (Soldiers, Government Civilians, and all global citizens) — Multiple submissions are encouraged!

All entries are due NLT 11:59 pm Eastern on May 30, 2025 at: madscitradoc@gmail.com

Click here for additional information on this contest — we look forward to your participation!

About the Author: Charlotte Feit-Leichman e-interned with Army Mad Scientist during the summer of 2024. Ms. Feit-Leichman is a third year International Relations student in the St. Andrews and William & Mary Joint Degree Program. Ms. Feit-Leichman has also worked as an intelligence intern with TRADOC G-2 in which she conducted research on the implications of the space domain for the Army.

Disclaimer: The views expressed in this blog post do not necessarily reflect those of the U.S. Department of Defense, Department of the Army, Army Futures Command (AFC), or Training and Doctrine Command (TRADOC).

1 https://crsreports.congress.gov/product/pdf/IF/IF11150

2 https://www.businessnewsdaily.com/10352-machine-learning-vs-automation.html

3 https://www.europeanleadershipnetwork.org/wp-content/uploads/2023/11/Russian-bibliography.pdf

4 https://www.economist.com/business/2024/02/08/vladimir-putin-wants-to-catch-up-with-the-west-in-ai

5 https://warontherocks.com/2024/03/drones-are-transforming-the-battlefield-in-ukraine-but-in-an-evolutionary-fashion/

6 https://www.newsweek.com/russia-ryabkov-putin-nuclear-doctrine-1921849

7 https://www.armscontrol.org/act/2024-07/news/russian-nuclear-posture-may-change-putin-says

8 https://apnews.com/article/russia-belarus-nuclear-drills-ukraine-1a601fd9de0c32158278851cc153c2ce

9 https://www.europeanleadershipnetwork.org/wp-content/uploads/2023/11/Russian-bibliography.pdf

10 https://www.sipri.org/sites/default/files/2019-05/sipri1905-ai-strategic-stability-nuclear-risk.pdf

11 https://www.sipri.org/sites/default/files/2019-05/sipri1905-ai-strategic-stability-nuclear-risk.pdf

12 https://madsciblog.tradoc.army.mil/198-integrating-artificial-intelligence-into-military-operations/

13 https://madsciblog.tradoc.army.mil/198-integrating-artificial-intelligence-into-military-operations/

14 https://www.sipri.org/sites/default/files/2019-05/sipri1905-ai-strategic-stability-nuclear-risk.pdf

15 https://www.sipri.org/sites/default/files/2019-05/sipri1905-ai-strategic-stability-nuclear-risk.pdf

16 https://europeanleadershipnetwork.org/commentary/navigating-cyber-vulnerabilities-in-ai-enabled-military-systems/

17 https://europeanleadershipnetwork.org/commentary/navigating-cyber-vulnerabilities-in-ai-enabled-military-systems/

18 https://www.sipri.org/sites/default/files/2019-05/sipri1905-ai-strategic-stability-nuclear-risk.pdf

19 https://www.jpeocbrnd.osd.mil

20 https://www.t2.army.mil/T2-Laboratories/Designated-Laboratories/Joint-Program-Executive-Office-for-Chemical-Biological-Radiological-and-Nuclear-Defense/

21 https://www.jtfcs.northcom.mil/MEDIA/NEWS-ARTICLES/Article/3796491/critical-change-before-the-disaster-the-necessity-of-modernizing-the-approach-t/

22 https://www.jtfcs.northcom.mil/MEDIA/NEWS-ARTICLES/Article/3796491/critical-change-before-the-disaster-the-necessity-of-modernizing-the-approach-t/