[Editor’s Note: Mad Scientist Laboratory is pleased to present our latest edition of “The Queue” – a monthly post listing the most compelling articles, books, podcasts, videos, and/or movies that the U.S. Army’s Mad Scientist Initiative has come across during the previous month. In this anthology, we address how each of these works either informs or challenges our understanding of the Future Operational Environment (OE). We hope that you will add “The Queue” to your essential reading, listening, or watching each month!]

1. “Boston Dynamics prepares to launch its first commercial robot: Spot,” by James Vincent, The Verge, 5 June 2019.

Day by Day Armageddon: Ghost Road, by J.L. Bourne, Gallery Books, 2016, 241 pages.

“Metalhead,” written by Charlie Brooker / directed by David Slade, Black Mirror, Netflix, Series 4, Episode 5.

Boston Dynamics, progenitors of a wide range of autonomous devices is now poised to retail its first robotic system — during an interview with The Verge at Amazon’s re:MARS Conference, Boston Dynamics CEO Marc Raibert announced that “Spot the robot will go on sale later this year.” Spot is “a nimble robot that handles objects, climbs stairs, and will operate in offices, homes and outdoors.” Its “robot arm is a prime example of Boston Dynamics’ ambitious plans for Spot. Rather than selling the robot as a single-use tool, it’s positioning it as a “mobility platform” that can be customized by users to complete a range of tasks.” Given its inherent versatility, Mr. Raibert likens Spot “to … the Android [phone] of Androids,” with the market developing a gamut of apps, facilitating new and innovative adaptations.

But what about the tactical applications of such a device in the Future OE? Two works of fiction explore this capability through storytelling, with disparate visions of the future….

In the his fourth installment of the Day By Day Armageddon series, J. L. Bourne imagines one man’s trek across a ravaged America in search of the cure to a zombie apocalypse, and effectively explores the versatility of a Spot-like quadrupedal robot. Stumbling across the remains of a special operator, his protagonist Kil discovers the tablet and wrist band controls for a Ground Assault Reconnaissance & Mobilization Robot (GARMR) that, across the passage of the novel, ultimately anthropomorphizes into his “dog” named Checkers. After mastering the device’s capabilities via a tutorial on the tablet, Kil programs and employs Checkers as a roving ISR platform, effectively empowering him as a one-man Anti-Access/Area-Denial (A2/AD) “bubble.” Conducting both perimeter and long-range patrols, it tirelessly detects and alerts Kil to potential threats using its machine vision, audio, and video sensor feeds. Bourne’s versatile GARMR, however, is dependent on man-in-the-loop input and though capable of executing pre-programmed autonomous operations, Checkers remains compliant with current DoD policy regarding autonomy.

In the his fourth installment of the Day By Day Armageddon series, J. L. Bourne imagines one man’s trek across a ravaged America in search of the cure to a zombie apocalypse, and effectively explores the versatility of a Spot-like quadrupedal robot. Stumbling across the remains of a special operator, his protagonist Kil discovers the tablet and wrist band controls for a Ground Assault Reconnaissance & Mobilization Robot (GARMR) that, across the passage of the novel, ultimately anthropomorphizes into his “dog” named Checkers. After mastering the device’s capabilities via a tutorial on the tablet, Kil programs and employs Checkers as a roving ISR platform, effectively empowering him as a one-man Anti-Access/Area-Denial (A2/AD) “bubble.” Conducting both perimeter and long-range patrols, it tirelessly detects and alerts Kil to potential threats using its machine vision, audio, and video sensor feeds. Bourne’s versatile GARMR, however, is dependent on man-in-the-loop input and though capable of executing pre-programmed autonomous operations, Checkers remains compliant with current DoD policy regarding autonomy.

Netflix’s Black Mirror Metalhead episode, however, imagines its eponymous quadruped robot as a lethal autonomous system run amok, relentlessly hunting down humanity’s remnants in a post-apocalyptic Britain. Operating in wolf packs, these man-out-of-the-loop devices sit passively until a human target is acquired, whom they then tirelessly track, run to ground, and kill. The Metalhead episode riffs on the Skynet trope with another defense program gone rogue, this time an armed and deadly next-gen Spot. U.S. defense policy-makers would be wise to reconsider and plan accordingly for the coming commercialization and inevitable democratization of quadrupedal lethal autonomy – in light of recent drone attacks in Syria, Venezuela, and Yemen – a new killer genie is about to be unleashed from its bottle!

Netflix’s Black Mirror Metalhead episode, however, imagines its eponymous quadruped robot as a lethal autonomous system run amok, relentlessly hunting down humanity’s remnants in a post-apocalyptic Britain. Operating in wolf packs, these man-out-of-the-loop devices sit passively until a human target is acquired, whom they then tirelessly track, run to ground, and kill. The Metalhead episode riffs on the Skynet trope with another defense program gone rogue, this time an armed and deadly next-gen Spot. U.S. defense policy-makers would be wise to reconsider and plan accordingly for the coming commercialization and inevitable democratization of quadrupedal lethal autonomy – in light of recent drone attacks in Syria, Venezuela, and Yemen – a new killer genie is about to be unleashed from its bottle!

2. “Small Businesses Aren’t Rushing Into AI,” by Sara Castellanos and Agam Shah, The Wall Street Journal, 9 June 2019.

While sixty-five percent of firms with more than 5,000 workers are using Artificial Intelligence (AI) or planning on it, only twenty-one percent of small businesses have similar plans. The upfront costs of AI tools, data architecture improvements, and the scarcity of people capable of implementing AI tools outpace the ability of small businesses. While the U.S. Army is not a small business, it faces many similar obstacles.

First, the U.S. Army is not “AI Ready.” While Google and Microsoft are working on AI tools that are not overly reliant on large data sets, today’s tools are trained and fueled by access to reliant big data. Key to enabling AI and the Army realizing the advantages of speed and improved decision-making is access to our own data. The Army is a data rich organization with information from previous training events, combat operations, and readiness status, but data rich does not mean data ready. Much of the Army’s data would be characterized as “dark data” — sitting in a silo, accessible for limited single-use purposes. To get the Army AI Ready, we need to implement a Service-wide effort to break down the silos and import all of the Army’s data into an open-source architecture that is accessible by a range of AI tools.

First, the U.S. Army is not “AI Ready.” While Google and Microsoft are working on AI tools that are not overly reliant on large data sets, today’s tools are trained and fueled by access to reliant big data. Key to enabling AI and the Army realizing the advantages of speed and improved decision-making is access to our own data. The Army is a data rich organization with information from previous training events, combat operations, and readiness status, but data rich does not mean data ready. Much of the Army’s data would be characterized as “dark data” — sitting in a silo, accessible for limited single-use purposes. To get the Army AI Ready, we need to implement a Service-wide effort to break down the silos and import all of the Army’s data into an open-source architecture that is accessible by a range of AI tools.

Second, the size and dispersed nature of the Army exasperates our ability to acquire and retain a high number of people capable of implementing AI tools. This AI defined future requires the creation of new jobs and skillsets to overcome the coming skills mismatch. The Army is learning as it builds out a cyber capable force and these lessons are probably applicable to what we will need to do to support an AI-enabled force. At a minimum, we must address a new form of tech literacy to lead these future formations.

Second, the size and dispersed nature of the Army exasperates our ability to acquire and retain a high number of people capable of implementing AI tools. This AI defined future requires the creation of new jobs and skillsets to overcome the coming skills mismatch. The Army is learning as it builds out a cyber capable force and these lessons are probably applicable to what we will need to do to support an AI-enabled force. At a minimum, we must address a new form of tech literacy to lead these future formations.

While Google and Microsoft work to reduce the reliance on big data for training AI and lessen the need for AI coding, the Army should begin to improve its “AI Readiness” by implementing new data strategies, exploring new skillsets, and improving force tech literacy.

3. “Have Strategists Drunk the ‘AI Race’ Kool-Aid?” by Zac Rogers, War on the Rocks, 4 June 2019.

“When technological change is driven more by hubris and ideology than by scientific understanding, the institutions that traditionally moderate these forces, such as democratic oversight and the rule of law, can be eroded in pursuit of the next false dawn.”

In this article, Dr. Zac Rogers cautions those who are willing to leap headfirst into the technological abyss. Rogers provides a countering narrative to balance out the tech entrepreneurs who are ready to go full steam ahead with the so-called AI race, breaking down the competition and producing a robust analysis of the unintended effects of the digital age. The full implications of advancing AI offer a sobering reality, replete with warning of the potential breakdown of sociopolitical stability and of Western societies themselves. While countries continue to invest billions in AI development and innovation,  Rogers reminds us that beneath the high-tech veneer of the 21st century we are still “human beings in social systems – to which all the usual caveats apply.” As asserted by Mr. Ian Sullivan, our world is driven largely by thoughts, ideals, and beliefs, despite the increasing global connectivity we experience every day. To forget that we are, as put by Dr. Rogers, “always human” would be to lose touch with the very reality we are augmenting.

Rogers reminds us that beneath the high-tech veneer of the 21st century we are still “human beings in social systems – to which all the usual caveats apply.” As asserted by Mr. Ian Sullivan, our world is driven largely by thoughts, ideals, and beliefs, despite the increasing global connectivity we experience every day. To forget that we are, as put by Dr. Rogers, “always human” would be to lose touch with the very reality we are augmenting.

Rogers cautions against “idealized cybernetic systems” and implores those spearheading the foray into the technological unknown to take pause and remember what, ultimately, we stand to gain from these developments – and what we stand to lose.

4. “For the good of humanity, AI needs to know when it’s incompetent,” by Nicole Kobie, Wired, 15 June 2019

Prowler.io is an AI platform for generalized decision-making for businesses aiming to augment human work with machine learning. Prowler.io considers four questions as it sets up the platform: 1) when does the AI know for certain that it’s right; 2) when does it know it’s wrong; 3) when does it know that it’s about to go wrong — timing is key, so humans in the loop have time to react; and 4) “how are we even sure the AI is asking the right questions.” For Prowler.io, keeping humans-in-the-loop is necessary due to the current lack of trust in machine-based decision-making.

The article points out that understanding why your AI-driven fund manager lost money is less useful than preventing bad buys in the first place.  “Explainable AI is not enough, you have to have trusted AI – and for that to happen, you need to have human decision-making in the loop.” One failing of AI is that it doesn’t inherently understand its own competency. For example, if a human worker needs help, they can ask for it. The dilemma is how will “understanding of personal limitations [be] built into code?” A worst case example of this was two deadly 737 Max crashes — “In both crashes, the commonality was that the autopilot did not understand its own incompetence.”

“Explainable AI is not enough, you have to have trusted AI – and for that to happen, you need to have human decision-making in the loop.” One failing of AI is that it doesn’t inherently understand its own competency. For example, if a human worker needs help, they can ask for it. The dilemma is how will “understanding of personal limitations [be] built into code?” A worst case example of this was two deadly 737 Max crashes — “In both crashes, the commonality was that the autopilot did not understand its own incompetence.”

Correct and timely decisions are paramount for the Army and military applications of AI on the battlefield, now and in the future. “Even if there’s a human-in-the-loop that has oversight, how valuable is the human if they don’t understand what’s going on?” One disconnect is that the military and code writers equally have a blind spot in how they think about technological progress toward the future. That blind spot is thinking about AI largely disconnected from humans and the human brain. Rather than thinking about AI-enabled systems as connected to humans, we think about them as parallel processes. We talk about human-in-the-loop or human-on-the-loop largely in terms of the control over autonomous systems, rather than a comprehensive connection to and interaction with those systems.  “Having the idea that a human always has to overrule or an algorithm always has to overrule is not the right strategy. It really has to be focusing on what the human is good at and what the algorithm is good at, and combining those two things together. And that will actually make decision-making better, fairer, and more transparent.”

“Having the idea that a human always has to overrule or an algorithm always has to overrule is not the right strategy. It really has to be focusing on what the human is good at and what the algorithm is good at, and combining those two things together. And that will actually make decision-making better, fairer, and more transparent.”

5. “Garbage In, Garbage Out,” by Clare Garvie, Georgetown Law Center on Privacy & Technology, 16 May 2019.

A system relies on the information it is given. Feed it poor or questionable data and you will get poor or questionable results, hence the phrase, “garbage in, garbage out.” With facial recognition software becoming an increasingly common tool, law enforcement agencies are relying more heavily on a process that many experts admit is more art than science. Further, they’re stretching the bounds of the software when trying to identify potential suspects – feeding the system celebrity photos, composite sketches, and altered images in an attempt to “help” the system find the right person. However, studies by the National Institute of Standards and Technology (NIST) and Michigan State University concluded that composite sketches produced a positive match between 4.1 and 6.7 percent of the time. Despite, these figures relaying dubious or questionable results, some agencies are still following this process. As the Army becomes a more data-centric organization,

A system relies on the information it is given. Feed it poor or questionable data and you will get poor or questionable results, hence the phrase, “garbage in, garbage out.” With facial recognition software becoming an increasingly common tool, law enforcement agencies are relying more heavily on a process that many experts admit is more art than science. Further, they’re stretching the bounds of the software when trying to identify potential suspects – feeding the system celebrity photos, composite sketches, and altered images in an attempt to “help” the system find the right person. However, studies by the National Institute of Standards and Technology (NIST) and Michigan State University concluded that composite sketches produced a positive match between 4.1 and 6.7 percent of the time. Despite, these figures relaying dubious or questionable results, some agencies are still following this process. As the Army becomes a more data-centric organization, it will be imperative to understand that “data” itself does not necessarily mean “good data.” If the Army feeds poor data into the system it will get poor results that may lead to unnecessary resource expenditure or even loss of life. Modernization will rely on accurate forecasting underpinned by a robust data set. How can the Army ensure it has the right data it needs? What processes need to be put in place now, to avoid potentially disastrous shortcuts?

it will be imperative to understand that “data” itself does not necessarily mean “good data.” If the Army feeds poor data into the system it will get poor results that may lead to unnecessary resource expenditure or even loss of life. Modernization will rely on accurate forecasting underpinned by a robust data set. How can the Army ensure it has the right data it needs? What processes need to be put in place now, to avoid potentially disastrous shortcuts?

6. “Deepfakes, social media, and the 2020 election,” by John Villasenor, Brookings TechTank, 3 June 2019.

“…deepfakes are the inevitable next step in attacking the truth.”

The point of deepfakes is not necessarily to convince people that public figures said something that they did not. They’re designed to introduce doubt and confusion into people’s minds, wreaking havoc on the information-saturated environment Americans are accustomed to operating in. As this type of digital deception becomes more refined, social media platforms will face greater technological and logistical challenges in identifying and removing false information, not to mention walking the fine legal line of subjective content monitoring.

Deepfakes are also of concern outside of the social media arena: our strategic competitors can use this tool to manipulate information, delegitimizing or mischaracterizing American military actions and operations in the views of local actors in conflict zones and the wider global population. In addition to the weaponization of information by state actors, any individual with basic technological skill and access to a computer and the internet can create a video that drastically alters the perceptions of millions. So, that leads us to wonder: what happens when we can no longer trust the information right in front of our eyes? How can we make decisions when we have to question all of our evidence?

7. “Space Exploration and the Age of the Anthropocosmos,” by Joi Ito, Wired, 30 May 2019.

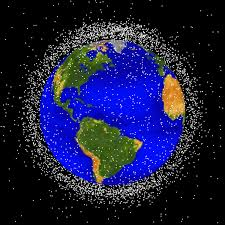

Space is the final frontier – and it’s here. Joi Ito likens the current utopian, free-for-all stage of human/space interactions with the initial years of publicly-accessible internet. To encapsulate this era, Ito coins a new term, anthropocosmos, for this phase of human development in which people have a measurable impact on non-terrestrial environments. However, he cautions that if expansion into and use of space is left unchecked, then a “tragedy of the commons” situation will begin to arise.  Moribah Jah alluded to the tragedy of the commons regarding orbital “space debris” congestion by suggesting that this phenomenon is already occurring in Earth’s orbital pathways. Ito continues his comparison by highlighting that, much like the internet, space in the future will be used for all sorts of purposes unimaginable to us today. He envisions a world where space becomes increasingly commercialized, monitored, and restricted by various actors trying to secure their own domains (such as governments) or turn a profit. Space can be a cooperative arena, but if it’s not, people on Earth and beyond the planet will feel the negative consequences of exploiting this newly-accessible environment.

Moribah Jah alluded to the tragedy of the commons regarding orbital “space debris” congestion by suggesting that this phenomenon is already occurring in Earth’s orbital pathways. Ito continues his comparison by highlighting that, much like the internet, space in the future will be used for all sorts of purposes unimaginable to us today. He envisions a world where space becomes increasingly commercialized, monitored, and restricted by various actors trying to secure their own domains (such as governments) or turn a profit. Space can be a cooperative arena, but if it’s not, people on Earth and beyond the planet will feel the negative consequences of exploiting this newly-accessible environment.

8. “Team of Teams: New Rules of Engagement for a Complex World,” by General Stanley McChrystal, Tantum Collins, David Silverman, and Chris Fussell, Penguin Random House, 12 May 2015.

This 2015 book on leadership, engagement, and teamwork primarily authored by retired General and JSOC Commander Stan McChrystal addresses the tension point between how military teams (and teams in the workforce in general) are traditionally organized and led and the emerging digital age and info/data-centric character of modern warfare.

The book highlights the need for conventional and special forces to transform their centralized and largely rigid ways of warfare into something more adaptive, fluid, and agile to counter the growing insurgency in Iraq in 2004. As forces in Iraq tackled a boiling sectarian civil war in hot spots like Fallujah, McChrystal’s Joint Special Operations Command morphed into a team of teams that efficiently leveraged intelligence experts, informant networks, interagency task forces, special operators, and a host of support personnel to kill, capture, and disrupt what had become al Qaeda in Iraq (AQI). This network built to defeat a network saw their efforts and metamorphosis culminate in the June 2006 airstrike on AQI leader and most wanted man in Iraq, Abu Musab al-Zarqawi.

The book highlights the need for conventional and special forces to transform their centralized and largely rigid ways of warfare into something more adaptive, fluid, and agile to counter the growing insurgency in Iraq in 2004. As forces in Iraq tackled a boiling sectarian civil war in hot spots like Fallujah, McChrystal’s Joint Special Operations Command morphed into a team of teams that efficiently leveraged intelligence experts, informant networks, interagency task forces, special operators, and a host of support personnel to kill, capture, and disrupt what had become al Qaeda in Iraq (AQI). This network built to defeat a network saw their efforts and metamorphosis culminate in the June 2006 airstrike on AQI leader and most wanted man in Iraq, Abu Musab al-Zarqawi.

The lessons gleaned from this book are not only applicable to special operations forces involved in manhunts or even to military operations as a whole, but to teams across the globe in all areas of business, academia, and service. The rapid nature of changing circumstances, enormity of big data, and pervasiveness of hyper-connectivity mean that organizations must shift away from being executive-centric, hierarchical, and rigid and become cross-functional, openly communicating, and mutually respecting teams of teams. The growing integration of artificial intelligence and machine learning in the workplace (that will likely include levels of, or assistance to, decision-making) will exacerbate the need for cross collaboration and a better top-to-bottom understanding of how the team of teams functions as a whole.

If you read, watch, or listen to something this month that you think has the potential to inform or challenge our understanding of the Future OE, please forward it (along with a brief description of why its potential ramifications are noteworthy to the greater Mad Scientist Community of Action) to our attention at: usarmy.jble.tradoc.mbx.army-mad-scientist@mail.mil — we may select it for inclusion in our next edition of “The Queue”!