[Editor’s Note: The Information Environment (IE) is the point of departure for all events across the Multi-Domain Operations (MDO) spectrum. It’s a unique space that demands our understanding, as the Internet of Things (IoT) and hyper-connectivity have democratized accessibility, … Read the rest

118. The Future of Learning: Personalized, Continuous, and Accelerated

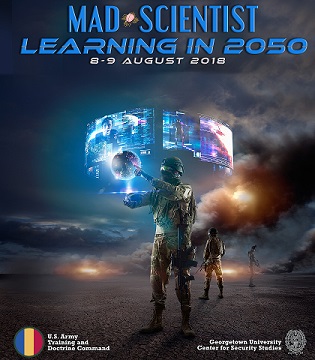

[Editor’s Note: At the Mad Scientist Learning in 2050 Conference with Georgetown University’s Center for Security Studies in Washington, DC, Leading scientists, innovators, and scholars gathered to discuss how humans will receive, process, and integrate information in the future. The convergence of … Read the rest

[Editor’s Note: At the Mad Scientist Learning in 2050 Conference with Georgetown University’s Center for Security Studies in Washington, DC, Leading scientists, innovators, and scholars gathered to discuss how humans will receive, process, and integrate information in the future. The convergence of … Read the rest

101. TRADOC 2028

[Editor’s Note: The U.S. Army Training and Doctrine Command (TRADOC) mission is to recruit, train, and educate the Army, driving constant improvement and change to ensure the Total Army can deter, fight, and win on any battlefield now and … Read the rest

75. “The Queue”

[Editor’s Note: Mad Scientist Laboratory is pleased to present (somewhat belatedly) our July edition of “The Queue” – a monthly post listing the most compelling articles, books, podcasts, videos, and/or movies that the U.S. Army’s Training and Doctrine Command (TRADOC) … Read the rest

74. Mad Scientist Learning in 2050 Conference

Mad Scientist Laboratory is pleased to announce that Headquarters, U.S. Army Training and Doctrine Command (TRADOC) is co-sponsoring the Mad Scientist Learning in 2050 Conference with Georgetown University’s Center for Security Studies this week (Wednesday and Thursday, 8-9 August 2018) … Read the rest